New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How to project point cloud to depth image? #2190

Comments

|

following! |

|

If you ask me, I do not find that method very helpful. The capturing of the float buffers is not really flexible and hard to debug (if you encounter any difficulties). Therefore, I developed my own algorithm to create a depth map. It is based on a projection of points into the image plane, iteration over all image coordinates, and condition checking (see here #1912 (comment) ). This gives way more flexibility, especially when you need to include lens distortion. Also, with this method, you get your depth map as a numpy array. This allows you to display the depth map with any colormap using matploblib's I don't know if a flexible depth map creation is a feature request for the future? |

|

That would be great, as I think many other people would like this feature, as this question has been asked multiple times on issues, but with different answers. |

|

+1 |

|

Hello GnnrMrkr, Your code of create a depth map from point cloud sounds very interesting! Looking forward to seeing it. |

|

Reusing this question. I see there is a new gui interface using filament. Here there is an example: Is there a way to obtain the depth maps (similar to what |

|

@pablospe Not currently but it is on our roadmap for the next release. |

|

@errissa Thanks for the reply! One more question related to this topic. What about the equivalent to Anyways I could use some code similar to: The problem with this function is all those ifs, it doesn't allow an arbitrary pinhole camera parameter. For my purposes I modify this function to avoid this limitation and render depth maps with the headless rendering. I was wondering if it makes sense to create a PR with a more flexible version, something like I will create the PR, but I don't know if these function will be removed. So far I don't find documentation about the new GUI interface. |

|

@pablospe You are correct about the limitation on configuring the camera in the new rendering pipeline. We are currently working on allowing it to be configured with camera intrinsics. Regarding a PR, we are, of course, always happy to receive contributions. The original visualizer will be removed it some point in the future but I am not certain how long from now. I will find out. |

Ok, I will make a clean version of this function and also add the python wrapper call. |

|

@pablospe even the dirty version would be helpful! |

|

I'm currently part of a project, where we need to create a depth map with lens distortion. Do you have any idea around what date the next release is? We noticed that the visualization method didn't work well for our project, mostly because large portion of the environment were not represented, so we would appreciate an estimated eta, so we can decide whether to work around the old visualization method, and deal with the cons, or wait for the new method. Also, will an example be posted for how to use this new visualization method in python? |

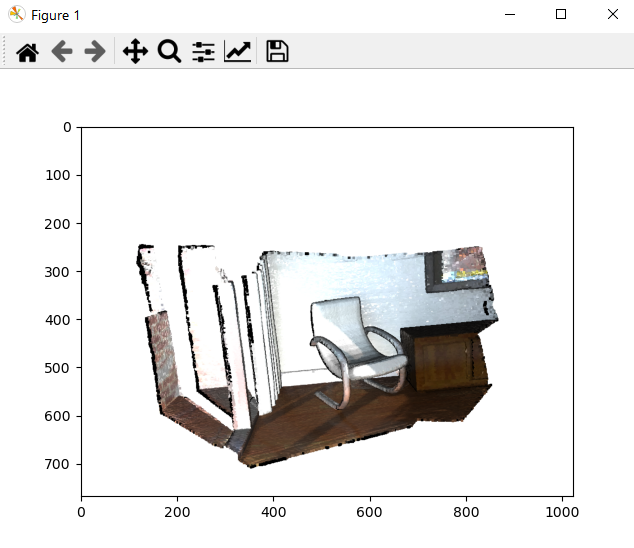

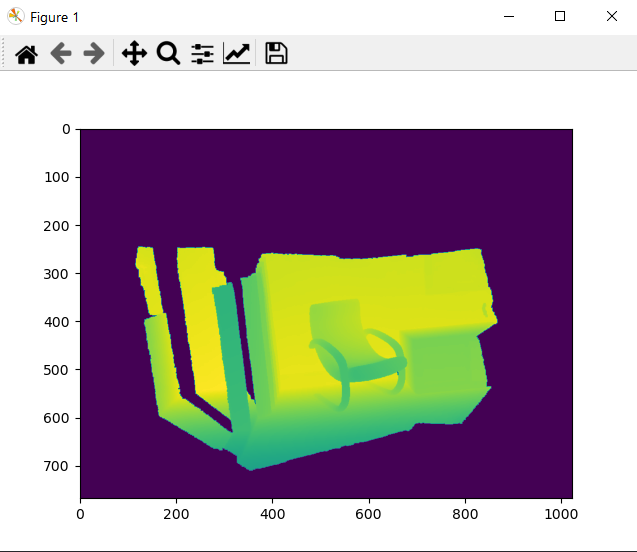

See PR #2564. Do you know who is the responsable for the new viewer in open3D? I would like to try to implement the depth rendering with filament, but I am afraid I would need some directions first. @ghost, @stanleyshly, @zapaishchykova: I created an example that should generate depthmaps without restrictions in the FOV, zoom, etc. I tested mainly in ubuntu 18.04. See here: https://github.com/pablospe/open3D_depthmap_example If you don't want to compile the PR #2564, you can install the .whl file with Capture the screenCapture depth |

|

Now I believe this solves your problem, and this issue can be closed. |

I currently have this code to load a point cloud in, and I also have code for the pinhole intrinsic.

pcd = o3d.io.read_point_cloud("./pcd.txt", format='xyz')

intrinsics = o3d.camera.PinholeCameraIntrinsic(640, 480 ,525.0, 525.0, 319.5, 239.5)

However, would I take an irregularly spaced point cloud, and create a grayscale depth map from it, while using the camera intrinsic?

I tried the code in issue #1073, but I get a heat map, not a grayscale, plus I appear to lose some corners, depending on the imported point cloud, similar to the right image on the 4th row of images in #1073.

Here is my code so far, taken from #1073, but the depth map is zoomed out, and when I reimport into3d, the depth map is not scaled properly with the rgb image.

`vis= o3d.visualization.Visualizer()

vis.create_window('pcl',640, 480, 50, 50, True)

vis.add_geometry(pcd)

vis = o3d.visualization.Visualizer()

vis.create_window(visible = True)

vis.add_geometry(pcd)

depth = vis.capture_depth_float_buffer(False)

image = vis.capture_screen_float_buffer(False)

plt.imshow(np.asarray(depth))

o3d.io.write_image("./test_depth.png", depth)

plt.imsave("./test_depth.png",

np.asarray(out_depth))#, dpi = 1)

from PIL import Image

img = Image.open('./test_depth.png').convert('LA')

img.save('./greyscale.png')`

The text was updated successfully, but these errors were encountered: