This is LSTM implementation based on Caffe.

- Mini-batch update

- Examples for the real datasets (i.e., Handwriting recognition/Speech recognition)

- Peephole connection

An example code is in /examples/lstm_sequence/.

In this code, LSTM network is trained to generate a predefined sequence without any inputs.

This experiment was introduced by Clockwork RNN.

Four different LSTM networks and shell scripts(.sh) for training are provided.

Each script generates a log file containing the predicted sequence and the true sequence.

You can use plot_result.m to visualize the result.

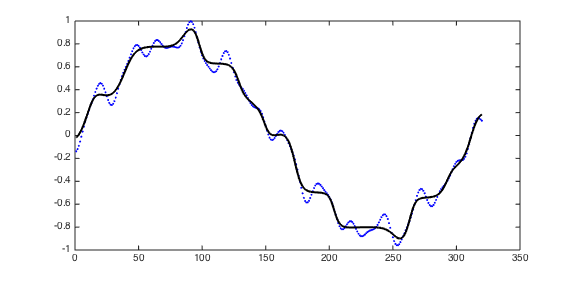

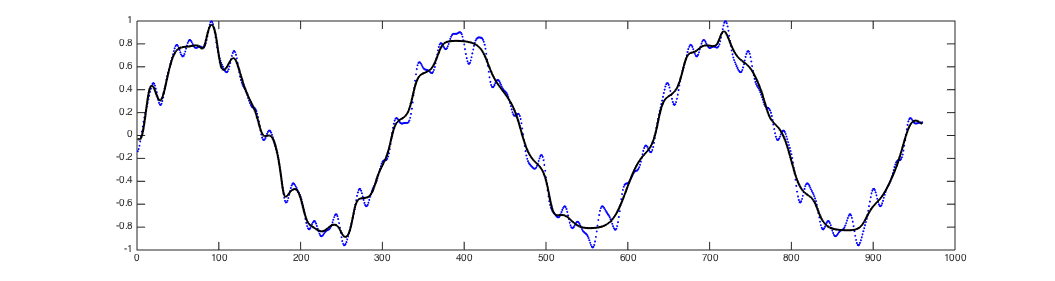

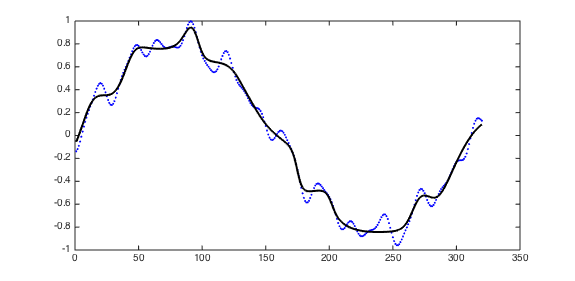

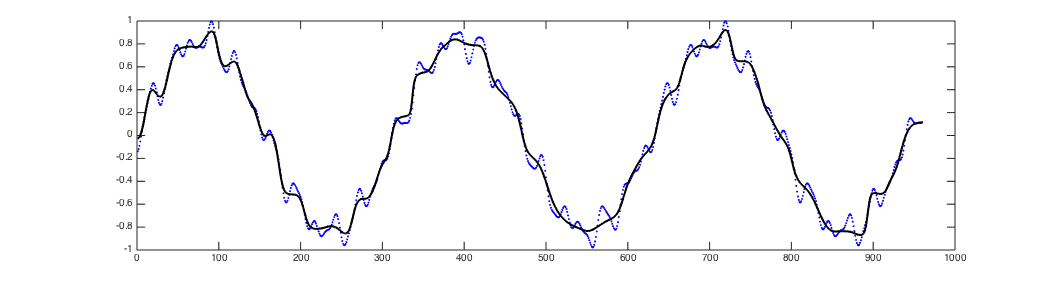

The result of four LSTM networks will be as follows: