Copyright (C) 2012- Joseph T. Lizier; 2014- Ipek Özdemir; 2017- Pedro Mediano; 2019- Emanuele Crosato, Sooraj Sekhar, Oscar Huaigu Xu; 2022- David Shorten

JIDT provides a stand-alone, open-source code Java implementation (also usable in Matlab, Octave, Python, R, Julia and Clojure) of information-theoretic measures of distributed computation in complex systems: i.e. information storage, transfer and modification.

JIDT includes implementations:

- principally for the measures transfer entropy, mutual information, and their conditional variants, as well as active information storage, entropy, etc;

- for both discrete and continuous-valued data;

- using various types of estimators (e.g. Kraskov-Stögbauer-Grassberger estimators, box-kernel estimation, linear-Gaussian), as described in full at ImplementedMeasures.

JIDT is easy to use:

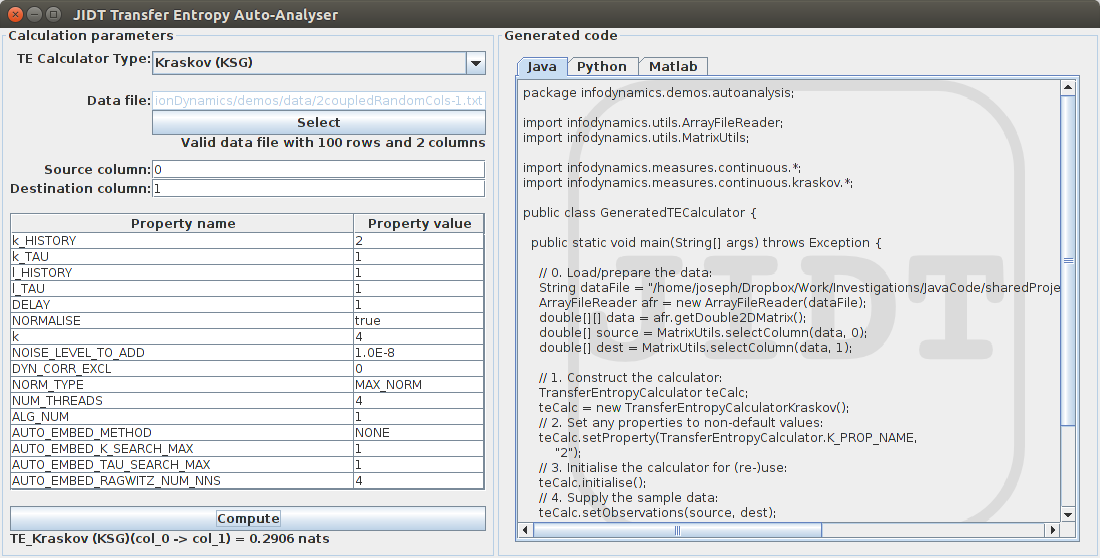

- It ships with a GUI application -- the AutoAnalyser, see picture below -- to facilitate point-and-click analysis, as well as code template generation for more complex analysis.

- We provide short video lectures and corresponding slides in a (beta) Course on how to understand using information-theoretic tools to analyse complex systems, and to implement such analysis with JIDT.

JIDT is distributed under the GNU GPL v3 license (or later).

- Download and Installation is very easy!

- Quick start: take a

git clone(then build via AntScripts) OR download the latest v1.6.1 full distribution (suitable for all platforms) and see the readme.txt file therein.

- Quick start: take a

- Documentation including: the paper describing JIDT at arXiv:1408.3270 (distributed with the toolkit), a (beta) Course including short video lectures and a shorter Tutorial, and Javadocs (v1.6.1 here);

- Demos are included with the full distribution, including a GUI app for automatic analysis and code generation (see picture below), simple java demos and cellular automata (CA) demos.

- These Java tools can easily be used in Matlab/Octave, Python, R, Julia and Clojure! (click on each language here for examples)

For further information or announcements:

- Join our discussion group: http://groups.google.com/d/forum/jidt-discuss

- See also the FAQs

- Follow @infodynamicstkt on twitter

Please cite your use of this toolkit as:

Joseph T. Lizier, "JIDT: An information-theoretic toolkit for studying the dynamics of complex systems", Frontiers in Robotics and AI 1:11, 2014; doi:10.3389/frobt.2014.00011 (pre-print: arXiv:1408.3270)

And please let me know about any publications resulting from its use!

See other PublicationsUsingThisToolkit.

22/08/2023 - New full distribution files available for release v1.6.1; Changes for v1.6.1 include: Minor updates to supporting use in Python, including virtual environments; Minor tweaks to fish schooling examples (mostly comments).

5/09/2022 - New full distribution files available for release v1.6; Changes for v1.6 include: Adding Flocking/Schooling/Swarming demo; Included Pedro's code on IIT and O-/S-Information measures; Spiking TE estimator added from David; Fixed up AutoAnalyser to work well for Python3 and numpy; Links to lecture videos included in the beta wiki for the course; Added rudimentary effective network inference (simplified version of the IDTxl full algorithm) in demos/octave/EffectiveNetworkInference;

26/11/2018 - New jar and full distribution files available for release v1.5; Changes for v1.5 include: Added GPU (cuda) capability for KSG Conditional Mutual Information calculator (proper documentation to come), brief wiki page and unit tests included; Added auto-embedding for TE/AIS with multivariate KSG, and univariate and multivariate Gaussian estimator (plus unit tests), for Ragwitz criteria and Maximum bias-corrected AIS, and also added Maximum bias corrected AIS and TE to handle source embedding as well; Kozachenko entropy estimator adds noise to data by default; Added bias-correction property to Gaussian and Kernel estimators for MI and conditional MI, including with surrogates (only option for kernel); Enabled use of different bases for different variables in MI discrete estimator; All new above features enabled in AutoAnalyser; Added drop-down menus for parameters in AutoAnalyser; Included long-form lecture slides in course folder;

26/11/2017 - New jar and full distribution files available for release v1.4; Changes for v1.4 include: Major expansion of functionality for AutoAnalysers: adding Launcher applet and capability to double click jar to launch, added Entropy, CMI, CTE and AIS AutoAnalysers, also added binned estimator type, added all variables/pairs analysis, added statistical significance analysis, and ensured functionality of generated Python code with Python3; Added GPU (cuda) capability for KSG Mutual Information calculator (proper documentation and wiki page to come), including unit tests; Added fast neighbour search implementations for mixed discrete-continuous KSG MI estimators; Expanded Gaussian estimator for multi-information (integration); Made all demo/data files readable by Matlab.

17/12/2016 - New book out from J. Lizier et al., "An Introduction to Transfer Entropy: Information Flow in Complex Systems" published by Springer, which contains various examples using JIDT (distributed in our releases)

21/10/2016 - New jar and full distribution files available for release v1.3.1; Changes for v1.3.1 include: Major update to TransferEntropyCalculatorDiscrete so as to implement arbitrary source and dest embeddings and source-dest delay; Conditional TE calculators (continuous) handle empty conditional variables; Added new auto-embedding method for AIS and TE which maximises bias corrected AIS; Added getNumSeparateObservations() method to TE calculators to make reconstructing/separating local values easier after multiple addObservations() calls; Fixed kernel estimator classes to return proper densities, not probabilities; Bug fix in mixed discrete-continuous MI (Kraskov) implementation; Added simple interface for adding joint observations for MultiInfoCalculatorDiscrete Including compiled class files for the AutoAnalyser demo in distribution; Updated Python demo 1 to show use of numpy arrays with ints; Added Python demo 7 and 9 for TE Kraskov with ensemble method and auto-embedding respectively; Added Matlab/Octave example 10 for conditional TE via Kraskov (KSG) algorithm; Added utilities to prepare for enhancing surrogate calculations with fast nearest neighbour search; Minor bug patch to Python readFloatsFile utility.

19/7/2015 - New jar and full distribution files available for release v1.3; Changes for v1.3 include: Added AutoAnalyser (Code Generator) GUI demo for MI and TE; Added auto-embedding capability via Ragwitz criteria for AIS and TE calculators (KSG estimators); Added Java demo 9 for showcasing use of Ragwitz auto-embedding; Adding small amount of noise to data in all KSG estimators now by default (may be disabled via setProperty()); Added getProperty() methods for all conditional MI and TE calculators; Upgraded Python demos for Python 3 compatibility; Fixed bias correction on mixed discrete-continuous KSG calculators; Updated the tutorial slides to those in use for ECAL 2015 JIDT tutorial.

12/2/2015 - New jar and full distribution files available for release v1.2.1; Changes for v1.2.1 include: Added tutorial slides, description of exercises and sample exercise solutions; Made jar target Java 1.6; Added Schreiber TE heart-breath rate with KSG estimator demo code for Python.

28/1/2015 - New jar and full distribution files available for release v1.2; Changes for v1.2 include: Dynamic correlation exclusion, or Theiler window, added to all Kraskov estimators; Added univariate MI calculation to simple demo 6; Added Java code for Schreiber TE heart-breath rate with KSG estimator, ready for use as a template in Tutorial; Patch for crashes in KSG conditional MI algorithm 2.

20/11/2014 - New jar and full distribution files available for release v1.1; Changes for v1.1 include: Implemented Fast Nearest Neighbour Search for Kraskov-Stögbauer-Grassberger (KSG) estimators for MI, conditional MI, TE, conditional TE, AIS, Predictive info, and multi-information. This includes a general (multivariate) k-d tree implementation; Added multi-threading (using all available processors by default) for the KSG estimators -- code contributed by Ipek Özdemir; Added Predictive information / Excess entropy implementations for KSG, kernel and Gaussian estimators; Added R, Julia, and Clojure demos; Added Windows batch files for the Simple Java Demos; Added property for adding a small amount of noise to data in all KSG estimators;

15/8/2014 JIDT paper finalised and uploaded to the website and arXiv:1408.3270

14/8/2014 - New jar and full distribution files available for our first official release, v1.0; Changes for v1.0 include: Added the draft of the paper on the toolkit to the release; Javadocs made ready for release; Switched source->destination arguments for discrete TE calculators to be with source first in line with continuous calculators; Renamed all discrete calculators to have Discrete suffix -- TE and conditional TE calculators also renamed to remove "Apparent" prefix and change "Complete" to "Conditional"; Kraskov estimators now using 4 nearest neighbours by default; Unit test for Gaussian TE against ChaLearn Granger causality measurement; Added Schreiber TE demos; Interregional transfer demos; documentation for Interaction lag demos; added examples 7 and 8 to Simple Java demos; Added property to add noise to data for Kraskov MI; Added derivation of Apache Commons Math code for chi square distribution, and included relevant notices in our release; Inserted translation class for arrays between Octave and Java; Added analytic statistical significance calculation to Gaussian calculators and discrete TE; Corrected Kraskov algorithm 2 for conditional MI to follow equation in Wibral et al. 2014.

20/4/2014 - New jar and full distribution files available for v0.2.0; Moved downloads to http://lizier.me/joseph/ since google code has stopped the download facility here :(. Changes for v0.2.0 include: Rearchitected (most) Transfer Entropy and Multivariate TE calculators to use an underlying conditional mutual information calculator, and have arbitrary embedding delay, source-dest delay; this includes moving Kraskov-Grassberger Transfer Entropy calculator to use a single conditional mutual information estimator instead of two mutual information estimators; Rearchitected (most) Active Information Storage calculators to use an underlying mutual information calculator; Added Conditional Transfer Entropy calculators using underlying conditional mutual information calculators; Moved mixed discrete-continuous calculators to a new "mixed" package; bug fixes.

11/9/2013 - New jar and full distribution files available for v0.1.4; added scripts to generate CA figures for 2013 book chapters; added general Java demo code; added Python demo code; made Octave/Matlab demos and CA demos properly compatible for Matlab; added extra Octave/Matlab general demos; added more unit tests for MI and conditional MI calculators, including against results from Wibral's TRENTOOL; bug fixes.

11/9/2013 - New CA demo scripts for several review book chapters we're preparing in 2013 have been uploaded - see CellularAutomataDemos.

4/6/2013 - Added instructions on how to use in python and several PythonExamples.

13/01/2013 - New jar and full distribution files available for v0.1.3; existing Octave/Matlab demo code made compatible with Matlab; several bug fixes, including using max norm by default in Kraskov calculator (instead of requiring this to be set explicitly); more unit tests (including against results from Kraskov's own MI implementation)

19/11/2012 - New jar and full distribution files available for v0.1.2, including demo code for two newly submitted papers

31/10/2012 - Jar and full distribution files available for v0.1.1 (first distribution)

7/5/2012 - JIDT project created and code uploaded

This project has been supported by funding through:

- Australian Research Council Discovery Early Career Researcher Award (DECRA) "Relating function of complex networks to structure using information theory", J.T. Lizier, 2016-19 DE160100630

- Universities Australia - Deutscher Akademischer Austauschdienst (German Academic Exchange Service) UA-DAAD Australia-Germany Joint Research Co-operation grant "Measuring neural information synthesis and its impairment", Wibral, Lizier, Priesemann, Wollstadt, Finn, 2016-17

- University of Sydney Research Accelerator (SOAR) Fellowship 2019 Scheme, J.T. Lizier (CI), 2019-2020

- Australian Research Council Discovery Project "Large-scale computational modelling of epidemics in Australia: analysis, prediction and mitigation", M. Prokopenko, P. Pattison, M. Gambhir, J.T. Lizier, M. Piraveenan, 2016-19 DP160102742