New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Unable to connect to confluent kafka #1241

Comments

|

I'm not a Confluent Kafka expert, does it run inside your cluster or do they manage it? From the looks of it, the address (kafka.svc:9092) points to a local service (which is also the one from our docs), is that correct? |

|

Our confluent Kafka does not run on kubernetes cluster. We are using the Confluent Kafka bootstrap servers(pkc-xxxxx.eastus2.azure.confluent.cloud:9092). We did try by replacing the address but still the same error |

|

@harshik9 okay, so that's the value that should be in |

|

@zroubalik, yes. We tried by using that value in the bootstrapServers. What should be the sasl value when connecting to confluent Kafka. |

|

Same issue here running a Kafka cluster locally, alongside with Keda installed on an on-premise K8s cluster. As a test, I created a Pod and was able to curl Kakfa topics from inside that Pod on my K8s. Apparently, Kafka cluster is reachable from inside my K8s cluster (initially I thought that that was the problem) but it feels like it is Keda that cannot reach Kafka endpoints. Currently running Kafka without authentication, using Keda v2 and K8s 1.18! |

The scenario you described here above, is Kafka with or without authentication? |

Without authentication. |

|

I was able to connect to confluent kafka with Authentication by going down on the KEDA version to 1.5. https://keda.sh/docs/1.5/scalers/apache-kafka/ |

|

Hm that's strange, unfortunately I am not able to reproduce that. I am using in-cluster Kafka (instaled via Strimzi) and it is working (the same for our e2e tests that are passing). Is there anything non standard in your Kafka config? |

@harshik9 for that you used the same configuration of ScaledObject and TriggerAuthentication as mentioned above? |

|

@zroubalik Our confluent Kafka is on azure. When we used the KEDA 2.0 BETA version. The Authentication parameters were sasl, Username and password. With sasl= plaintext, we were unable to connect but when I went down on the KEDA version 1.5, I had authentication parameters as authMode, username and password. The authMode that i gave was sasl_ssl_plain and this worked for me. I used the same scaledObject as in here --> https://keda.sh/docs/1.5/scalers/apache-kafka/ . But there was no necessity of providing a ca, cert and key. Username was our azure confluent kafka API key and password was our Confluent kafka API key secret. |

|

I belive the issue was fixed by #1288 please reopen if the problem still persists |

|

I was also facing the same issue with newer version of KEDA 2.4. |

|

@harshitak27 following approach might help you: #2101 (comment) Let us know and we can probably document this if it solves your issue. |

|

@zroubalik Thank you for the solution. So here's what I changed in the scaledobject.

Except for the above changes and few template changes, rest all remains same. Also, wanted to know what does "ACTIVE" and "FALLBACK" mean? This shows up once we try to list the Scaledobjects once we deploy them. Thanks again @zroubalik |

|

Glad to hear that it works for you. Active shows whether scaling is active - eg. some traffic in the trigger. Wrt Fallback you can read it in the docs: https://keda.sh/docs/2.4/concepts/scaling-deployments/ |

|

@harshitak27 Is there something that can be improved in the docs of our scaler / FAQ that could have helped you? Otherwise I think we can close this issue. |

|

It worked for me for confluent cloud |

|

Just a question: is the broker hostname relative to the namespace the scaled object is in or the KEDA controller? I'm having to put the full hostname of Kafka.kafkanamespace.svc.cluster.local:9092 to get connectivity despite the scaled object and broker being in the same namespace. I'd have expected Kafka:9092 to have worked like the documentation. |

@zroubalik I am having the same issue with my kafka cluster deployed with Strimzi. I am not sure what I am doing wrong. I use the keda version 2.9.1. I want to authenticate using "scram_sha512". I have the following CRs: Did you configure something specific for strimzi? or am I missing a parameter for scram_sha512? |

|

What do you see in KEDA operator logs? |

|

I get the following error message: I currently removed tls for my internal listener, but it doesn't change anything. As soon as I specify scram_sha512 for sasl I get this error message. When you test with Strimzi, which kafka version did you use? Did you have authentication set? Also did you specify a specific version in the ScaledObject metadata? |

|

Ok I manage to make it work with scram_sha512 and tls. I will post my CRs and I hope it will help some people: ScaledObject TriggerAuthentication Secret |

|

@edubois10 thanks for sharing. So the problem was in missing |

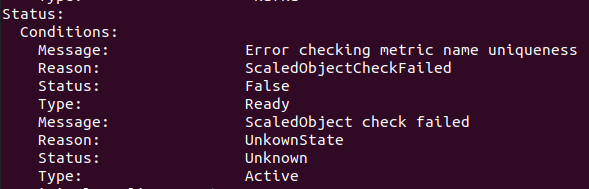

I am trying to implement KEDA for Confluent Kafka. I have tested out different scenarios but the error i get is

I deployed a scaled object as follows.

Scenario 1 without TriggerAuthentication

Scenario 2 with TriggerAuthentication

Expected Behavior

After deploying the scaled object, it should get the hpa.

Actual Behavior

ERROR controllers.ScaledObject Failed to ensure HPA is correctly created for ScaledObject {"ScaledObject.Namespace": "keda", "ScaledObject.Name": "kafka-scaledobject", "error": "error getting scaler for trigger #0: error creating kafka client: kafka: client has run out of available brokers to talk to (Is your cluster reachable?)"}Steps to Reproduce the Problem

Specifications

The API version on Scaledobject and TriggerAuthentication is keda.sh/v1alpha1

Scaledobjectsandtriggerauthentications.txt

The text was updated successfully, but these errors were encountered: