-

Notifications

You must be signed in to change notification settings - Fork 38.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add more scheduler benchmark testcases #69898

add more scheduler benchmark testcases #69898

Conversation

| map[string]string{"foo": ""}, | ||

| ) | ||

| testStrategy := testutils.NewCustomCreatePodStrategy(testBasePod) | ||

| nodeStrategy := testutils.NewLabelNodePrepareStrategy(apis.LabelZoneFailureDomain, "zone1") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I just put this simple scenario as a start.

But feel free to comment if we'd like a more typical scenario like "all nodes are split into X regions, Y zones", etc.

| } | ||

| basePod.Spec.Affinity = &v1.Affinity{ | ||

| PodAntiAffinity: &v1.PodAntiAffinity{ | ||

| RequiredDuringSchedulingIgnoredDuringExecution: []v1.PodAffinityTerm{ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

similar like https://github.com/kubernetes/kubernetes/pull/69898/files#r225712449, it's the most basic case. We can extend it to have multiple terms, like region in X, zone in Y, etc.

|

/retest |

|

/test pull-kubernetes-integration |

| // PodAntiAffinity rules when the cluster has various quantities of nodes and | ||

| // scheduled pods. | ||

| func BenchmarkSchedulingAntiAffinity(b *testing.B) { | ||

| func BenchmarkSchedulingPodAntiAffinity(b *testing.B) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

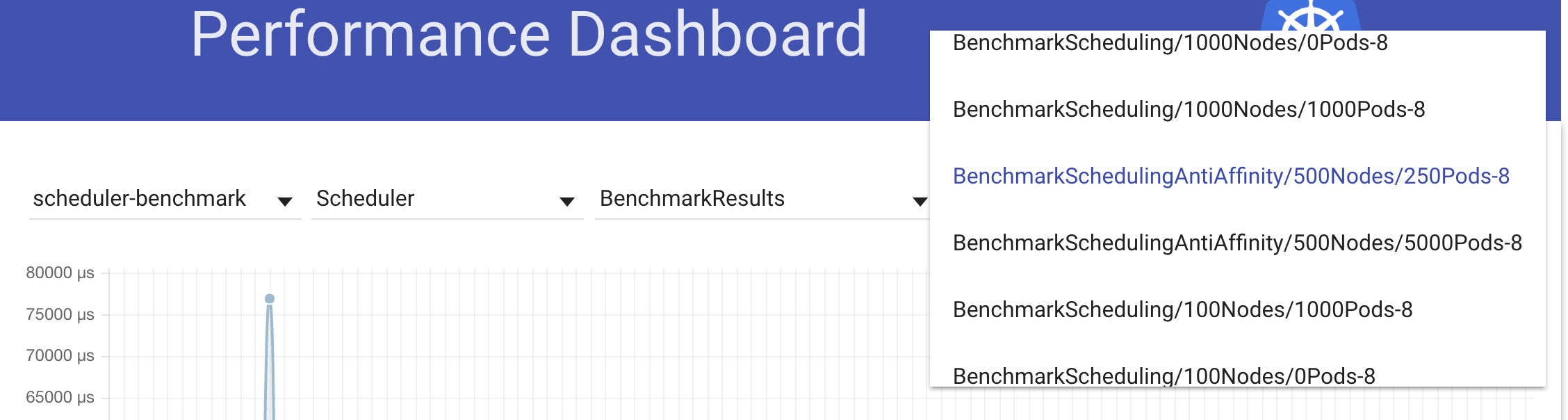

I am not sure where the code for the perf dashboard lives, but it is worth checking to make sure that renaming this function does not affect the dashboard.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure. From below snapshot, it's very likely it's running -bench=. option:

@wojtek-t @shyamjvs If function BenchmarkSchedulingAntiAffinity is renamed to BenchmarkSchedulingPodAntiAffinity, I guess old datapoints would stop growing and become stale? And maybe we need to do some trick (manually rename the local dataset file) to make old data connect with new data, so that the graph is still consistent.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not familiar with perf-dash.

@krzysied - can you please suggest something?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess old datapoints would stop growing and become stale

Yes. The new datapoints will by assigned to new function name. The old one will remain unchanged.

And maybe we need to do some trick (manually rename the local dataset file) to make old data connect with new data, so that the graph is still consistent.

Can be done. The question is whether we need this.

Regarding benchmarks, Perfdash presents 100 newest results. Assuming that there is one test every hour, after a week there will be only results with the new function name available.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| @@ -99,11 +148,66 @@ func makeBasePodWithAntiAffinity(podLabels, affinityLabels map[string]string) *v | |||

| return basePod | |||

| } | |||

|

|

|||

| // makeBasePodWithAffinity creates a Pod object to be used as a template. | |||

| // The Pod has a PodAntiAffinity requirement against pods with the given labels. | |||

| func makeBasePodWithAffinity(podLabels, affinityZoneLabels map[string]string) *v1.Pod { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please consider renaming this function to makeBasePodWithPodAffinity to be consistent.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

| for _, test := range tests { | ||

| name := fmt.Sprintf("%vNodes/%vPods", test.nodes, test.existingPods) | ||

| b.Run(name, func(b *testing.B) { | ||

| benchmarkScheduling(test.nodes, test.existingPods, test.minPods, defaultNodeStrategy, setupStrategy, testStrategy, b) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shouldn't you use LabelNodePrepareStrategy instead of defaultNodeStrategy to make the test more meaningful?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

f27f80a

to

681a15e

Compare

|

/retest |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just left a few review comments, thanks!

| } | ||

| // The setup strategy creates pods with no affinity rules. | ||

| setupStrategy := testutils.NewSimpleWithControllerCreatePodStrategy("setup") | ||

| // The test strategy creates pods with affinity for each other. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd choose to move this comment to testStrategy := ... line below, ditto for BenchmarkSchedulingNodeAffinity

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

| func BenchmarkSchedulingPodAffinity(b *testing.B) { | ||

| tests := []struct{ nodes, existingPods, minPods int }{ | ||

| {nodes: 500, existingPods: 250, minPods: 250}, | ||

| {nodes: 500, existingPods: 5000, minPods: 250}, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am a bit curious why we had different number of nodes and pods in BenchmarkScheduling VS BenchmarkSchedulingPodAffinity/AntiAffinity from the beginning? It should've been a good chance to do cross comparison.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the past, we could hardly go beyond 500 nodes for inter-pod affinity/anti-affinity benchmarks without having test timeouts. With the improved performance of the feature, I think we should be able to run the test for 1000 node clusters with no problem.

| Spec: testutils.MakePodSpec(), | ||

| } | ||

| basePod.Spec.Affinity = &v1.Affinity{ | ||

| PodAntiAffinity: &v1.PodAntiAffinity{ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wait ... PodAntiAffinity in makeBasePodWithPodAffinity?

| PodAntiAffinity: &v1.PodAntiAffinity{ | |

| PodAffinity: &v1.PodAffinity{ |

Also, ditto the comment of this function :-)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Apologize... A copy/paste error..

Fixed.

681a15e

to

74522a9

Compare

|

/retest |

| // PodAntiAffinity rules when the cluster has various quantities of nodes and | ||

| // scheduled pods. | ||

| func BenchmarkSchedulingAntiAffinity(b *testing.B) { | ||

| func BenchmarkSchedulingPodAntiAffinity(b *testing.B) { | ||

| tests := []struct{ nodes, existingPods, minPods int }{ | ||

| {nodes: 500, existingPods: 250, minPods: 250}, | ||

| {nodes: 500, existingPods: 5000, minPods: 250}, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Now that you are at it, could you add nodes: 1000, existing: 1000, minPods: 500 test here and in the next test?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@bsalamat to confirm, would you like the following tests to be added this 1000-node test entry?

- BenchmarkSchedulingPodAntiAffinity

- BenchmarkSchedulingPodAffinity

- BenchmarkSchedulingNodeAffinity

And after that, our cases would be like this:

-

BenchmarkScheduling (have no affinity)

{nodes: 100, existingPods: 0, minPods: 100}, {nodes: 100, existingPods: 1000, minPods: 100}, {nodes: 1000, existingPods: 0, minPods: 100}, {nodes: 1000, existingPods: 1000, minPods: 100}, -

BenchmarkSchedulingPodAntiAffinity

{nodes: 500, existingPods: 250, minPods: 250}, {nodes: 500, existingPods: 5000, minPods: 250}, {nodes: 1000, existingPods: 1000, minPods: 500}, -

BenchmarkSchedulingPodAffinity

{nodes: 500, existingPods: 250, minPods: 250}, {nodes: 500, existingPods: 5000, minPods: 250}, {nodes: 1000, existingPods: 1000, minPods: 500}, -

BenchmarkSchedulingNodeAffinity

{nodes: 500, existingPods: 250, minPods: 250}, {nodes: 500, existingPods: 5000, minPods: 250}, {nodes: 1000, existingPods: 1000, minPods: 500},

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ping @bsalamat ^^

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seems reasonable from my side :-)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Huang-Wei Looks good for now. I want to be able to try a larger number of nodes, but it has the risk of timing out in our CI/CD. Let's go with 1000 for now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

/assign |

- add benchmark test for PodAffinity - add benchmark test for NodeAffinity - add 1000-nodes test for PodAntiAffinity/PodAffinity/NodeAffinity

74522a9

to

5259d09

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

/lgtm

/approve

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: bsalamat, Huang-Wei The full list of commands accepted by this bot can be found here. The pull request process is described here

Needs approval from an approver in each of these files:

Approvers can indicate their approval by writing |

|

/test pull-kubernetes-e2e-gce |

What this PR does / why we need it:

This PR adds more benchmark testcases:

Special notes for your reviewer:

Motivation of this PR is to evaluate performance of implementation of Inter-PodAffinity is calculated on multiple pods. And NodeAffinity test is added as a bonus.

(based on this PR, here is an example of testing result, checkout the "before" sheet)

Release note:

/kind feature

/sig scheduling

/cc @resouer @bsalamat @misterikkit