New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

VMI error: failed to find a sourceFile in containerDisk........../disk: no such file or directory #5861

Comments

I remember there was a similar issue reported some time ago #4613. |

|

@vasiliy-ul I installed version 0.42.1, the previous error is now gone but I am seeing a new error:

|

|

You can try setting |

Maybe we don't check it for all disk types. Definitely sounds like a bug. |

|

I think the issue here is that kubevirt/pkg/virt-launcher/virtwrap/converter/converter.go Lines 258 to 268 in edf8b8f

So eventually the driver cache mode is not detected for the cloudinit disk. |

I tried this...and it works... Thanks a lot !! |

Is this a BUG REPORT or FEATURE REQUEST?:

/kind bug

What happened:

VMI failed with below error

failed to find a sourceFile in containerDisk containerdisk: Failed to check /proc/1/root/home/docker/node003/docker/devicemapper/mnt/d3575d97a42289078ac894d2002ad8e8d90a8d424a16d8d84bd8f7223e0a31c1/disk for disks: open /proc/1/root/home/docker/node003/docker/devicemapper/mnt/d3575d97a42289078ac894d2002ad8e8d90a8d424a16d8d84bd8f7223e0a31c1/disk: no such file or directory

What you expected to happen:

VMI to be in Running state.

How to reproduce it (as minimally and precisely as possible):

I have centos 8 VM which is diskless.

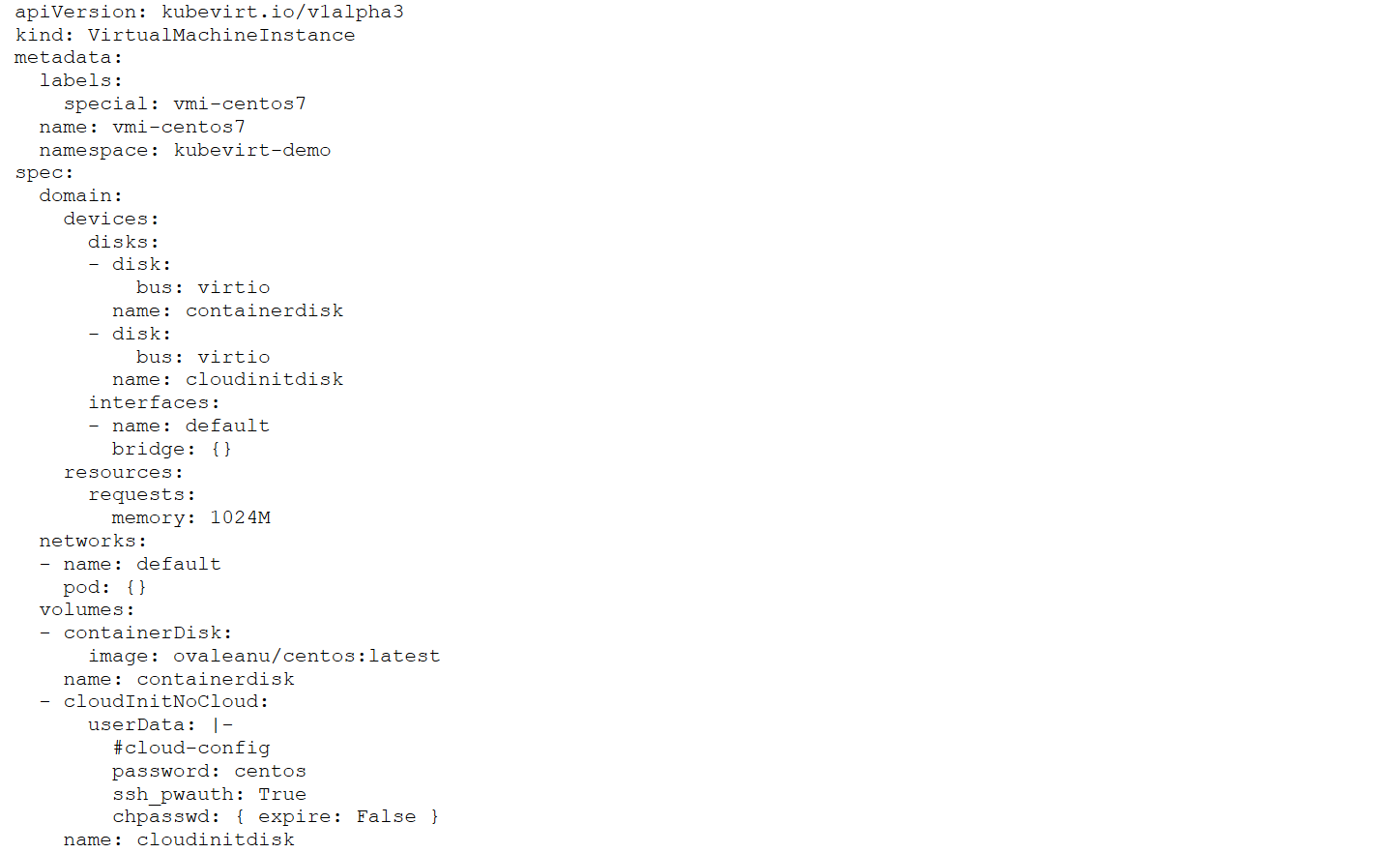

I am trying to execute the attached VMI specification. The vmi is created but after some time it is in Failed state.

I execute

kubectl describe vmi vmi_name -n kubevirt-demoand then I see above error in 'Events'`

`

Anything else we need to know?:

The VM on which I am trying to create vmi is a diskless VM. Let me know if my attached vmi specification needs any changes for same.

I am able to successfully run same vmi specification on another VM which is not diskless.

Environment:

virtctl version): 0.35.0kubectl version): 1.21.0uname -a): Linux node003 4.18.0-240.22.1.el8_3.x86_64 Add travis support #1 SMP Thu Apr 8 19:01:30 UTC 2021 x86_64 x86_64 x86_64 GNU/LinuxThe text was updated successfully, but these errors were encountered: