New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[BUG] cudaErrorMemoryAllocation with KOKKOS on Volta GPU #1473

Comments

|

@danicholson you are running out of memory: |

|

@stanmoore1 Thanks for the reply. In a previous test, nvidia-smi did not report high memory usage. I wrote a script to check it every minute or so, and the usage was ~600 MiB at the last check before the error occurred. I also used the KOKKOS tool to check the GPU memory usage for a shorter simulation and it was stationary around a similar value. Could this error be due to memory fragmentation? |

Yeah that seems reasonable for your system size. The error mentions |

|

This issue sounds very similar to #542. |

|

I've checked with Valgrind and Kokkos profiling tools and I don't see any memory growth over time. Can you describe how you are getting LAMMPS source code, are you cloning the GIT repo? Just to be absolutely certain, you used either |

|

I guess you are using cmake, so the comment about |

|

For this test I cloned the repo fresh and checked out the unstable branch. I built using cmake (the command is in the issue description above) so I did not execute |

|

I can do a test run using global memory rather than texture memory. Would this just require declaring |

|

Yes, though looking back at #542, it also failed with the exact same error, and the root cause was a memory leak not texture memory, so I'm doubtful that will help. |

|

I'm running this on a V100 GPU to see if I can reproduce the OOM. It will take several hours to reach 14 million timesteps, and may need to run even longer since V100 has 16 GB of memory instead of 12 GB for Titan V. |

|

Other than a memory leak, something else in the simulation could be blowing up which is leading to a large memory allocation. You could try writing a restart file every 1 million timesteps, and then after if fails at 14 million timesteps, read back in the latest restart file before it failed, and try running it again. If it fails at the same spot as before, then it is probably something wrong with the simulation. If it runs for another 14 million timesteps then fails, could be a memory leak. |

|

Thank you very much for you attention to this issue. I will run the test that you suggested. Based on a CPU-only run with the same input, this system should reach a stable equilibrium in a few nanoseconds, but I agree that it is worth checking. |

Yes, I mean there may be a bug that is triggered by very rare events that causes an atom to explode out of the box, or something like that. |

|

This looks more like a memory leak than that type of bug though, just can't find any evidence yet. |

|

I can reproduce this on V100. It failed just before 14 million timesteps. |

|

@crtrott any ideas? |

|

@danicholson I submitted another job on V100 with memory profiling to see if I can reproduce the memory growth you saw. |

|

@stanmoore1 Just to clarify, I did not use the kokkos profiling tools to monitor the memory. I used |

|

@danicholson understood. Kokkos tools use |

|

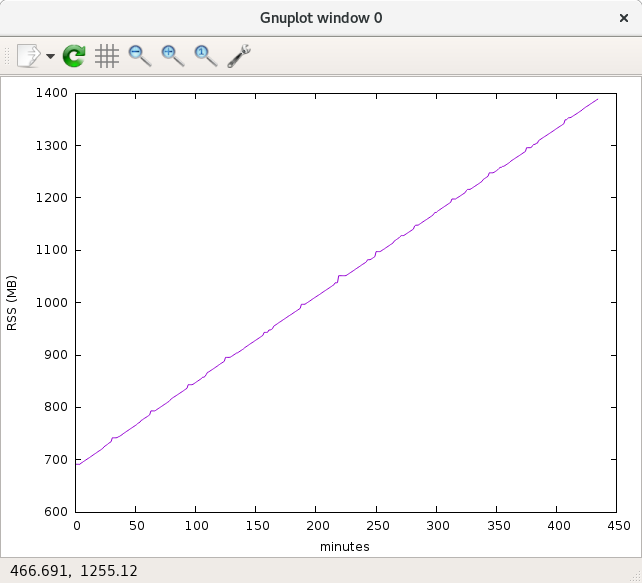

I do see significant host |

|

@danicholson I can confirm that the leak goes away if I don't use texture memory for |

|

@danicholson my test shows #1474 fixes this issue. Could help performance a little too since it isn't reallocating as often. |

|

I created a small reproducer and reported this to the Kokkos library developers: kokkos/kokkos#2155. @danicholson thanks for the bug report. |

|

@stanmoore1 Great, thank you for the investigation and the fix. I'm running it now, and the memory usage looks stable. |

|

@danicholson sure, let us know if you see other issues. |

Summary

My KOKKOS/CUDA simulation crashes on a cudaErrorMemoryAllocation after ~14 million steps on a Titan V GPU. It is a molecular system without electrostatics.

LAMMPS Version and Platform

LAMMPS: 15 May 2019

OS: Centos 7

GCC: 4.8.5

CUDA: 9.1

GPU: Titan V

CPU: Xeon E5-2630 v4

compiled with:

Expected Behavior

The script runs without issue on CPUs

Actual Behavior

The simulation crashes with the following error:

Steps to Reproduce

Unfortunately I don't have a small system to reproduce this error quickly. I run the script below with default Kokkos settings "-sf kk -k on g 1".

Further Information, Files, and Links

files:

relax.in.txt

relax_440K.data.txt

Call stack from cuda-gdb:

The text was updated successfully, but these errors were encountered: