New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Can't connect to DO cluster : http proxy error #699

Comments

|

You can try to use the full path of |

|

Using |

|

@jakolehm do you know are there still some issues related to snap regarding this? |

|

I also tried running the command Looks like there isn't any problem with this. |

|

@nevalla no known issues. @Ridzu95 does it work if you start Lens from a terminal where kubectl works? |

|

@jakolehm The logs I mentioned in my first message are the ones I get as an output when running from a terminal. So no it doesn't work unfortunately. |

|

Got this reproduced and I think I found the reason too. When opening Lens, snap will set and And the issue is that there is no access token in that config file. I think the workaround is to add |

|

@nevalla could we override |

|

Maybe. I think |

|

Update : I did not manage to make it work by adding a This solution is not ideal but it works fine, thanks to @nevalla indications. |

|

Right...I might have created that dir too during debugging. |

|

@Ridzu95 that solution was a real lifesaver for me today, thank you. |

This solution works for me. @nevalla is it possible to fix this problem with the XDG_CONFIG_HOME in a future release? |

|

Just to add on, I was having simialr issues as well as

Turns out Lens, started by just clicking the Mac icon ,without sudo didn't have the permissions to start the auth proxy it needs! Works perfectly now that I open it with sudo from the terminal. |

|

Got the same problem :) Any chance someone would do PR :)? |

Yes, my problem is on AWS. I guess it is because of the need to use aws-cli to authenticate the k8s cluster. I wonder if there is a more elegant solution to this problem? |

|

Having issues with my team accessing the cluster connect feature in Lens. I am guessing this has to do with the ~/Kubeconfig file - currently using mini kube on M1 and I created a teams space - and allowed access to specific users. They can see the teams space but cannot access the cluster. I think what happens..still doing some research.. but when you add a cluster it pulls off your kube.config. when you create your space I think you need a new config that then shares throughout the space. When my team tries to access the clusters I shared it gives them an SSL error which is directly from the config. When you go to your space I think it pulls your config... I think what needs to happen is you pull a new config in the space and promote it. Then the users you invite to the space use the same and you resolve the proxy error. Please advise |

|

Hi any updates? |

You find solution for AWS?? I'm getting same. kubectl is able to connect with server while configuring Lens through error "Unable to locate credentials. You can configure credentials by running "aws configure"." |

|

Just a note on the previous replies as version 110 is quite old now. If you use "current" instead of "110" it will patch the installed version. |

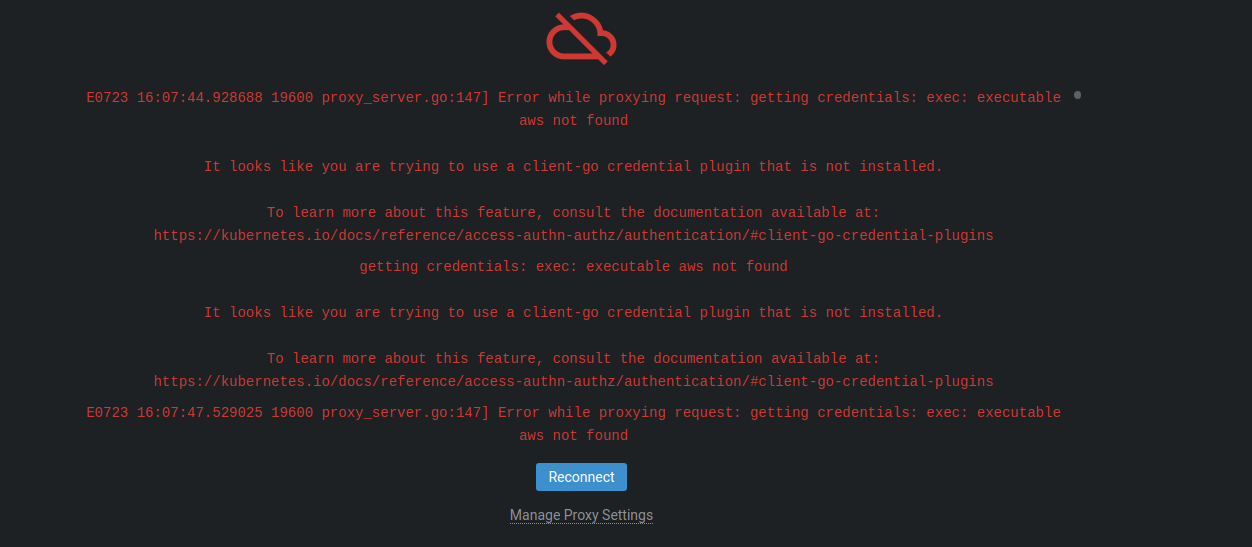

Describe the bug

Hello everyone, I cannot connect to my DigitalOcean cluster. After selecting my kubeconfig and trying to connect to the cluster, I get the error message "http: proxy error: getting credentials: exec: exit status 1".

To Reproduce

Expected behavior

As explained in various tutorials, no further configuration is needed so it should just work.

Screenshots

Environment (please complete the following information):

Logs:

Kubeconfig:

Thanks in advance for your help !

The text was updated successfully, but these errors were encountered: