-

Notifications

You must be signed in to change notification settings - Fork 571

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[BUG] Failed replicas not being cleared from node #2461

Comments

|

@dbpolito Can you check the |

|

I don't remember customizing that, so i guess it's the default: That's not even customizable on helm chart: https://github.com/longhorn/charts/blob/master/charts/longhorn/templates/storageclass.yaml#L21 What is this? minutes? hours? days? |

|

|

|

Could you help to check if there any orphan replicas directory under the host path Right now, if the node even went down and back, Longhorn has no information anymore which is the orphan replicas (i.e., Longhorn did not scan the |

|

I left some quick thoughts on how replica deletion could be improved to ensure cleanup of replica data here: |

Describe the bug

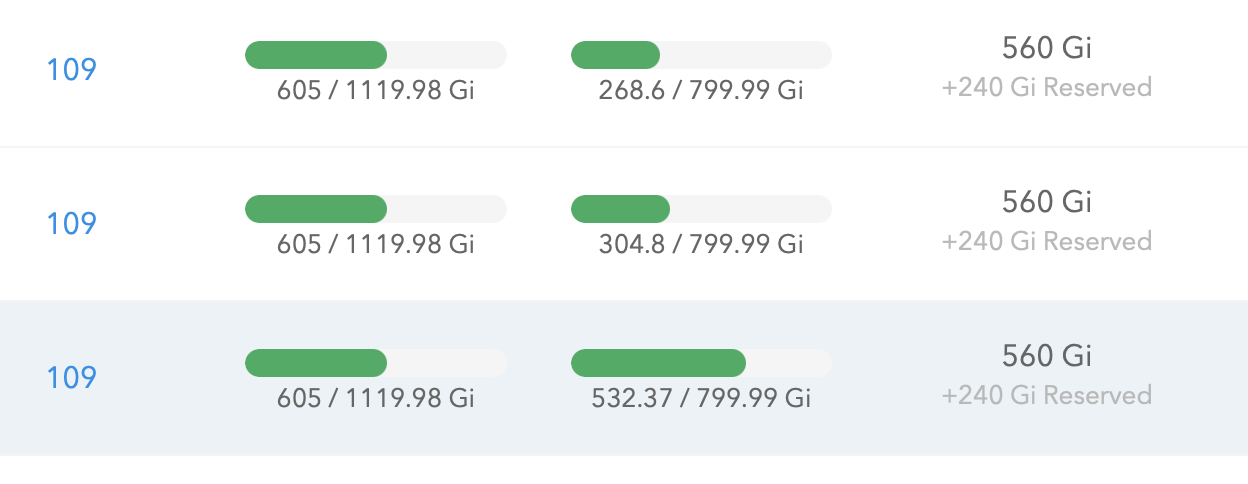

I got a instability on one node that made some replicas to corrupt and got rebuilt, but it seems that the disk usage wasn't freed by these replicas that failed:

Environment:

Not sure that's really the problem but the only thing i noticed is this node disk increase after all these replica rebuilds...

The text was updated successfully, but these errors were encountered: