-

Notifications

You must be signed in to change notification settings - Fork 567

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

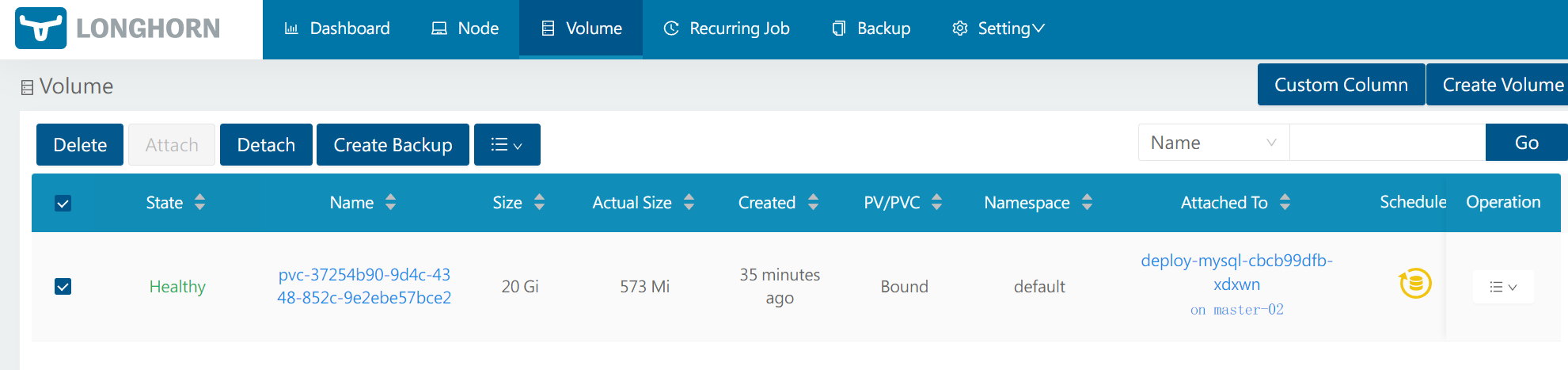

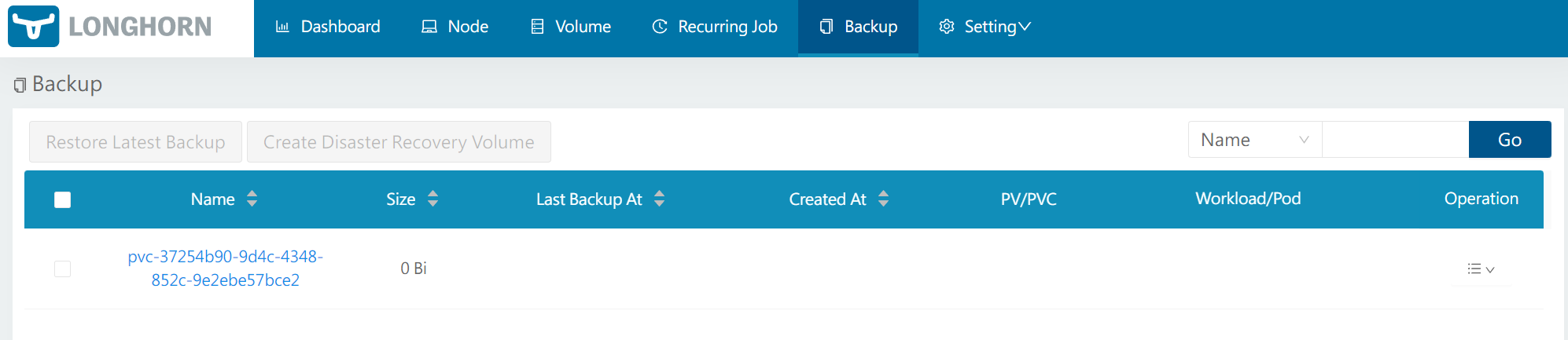

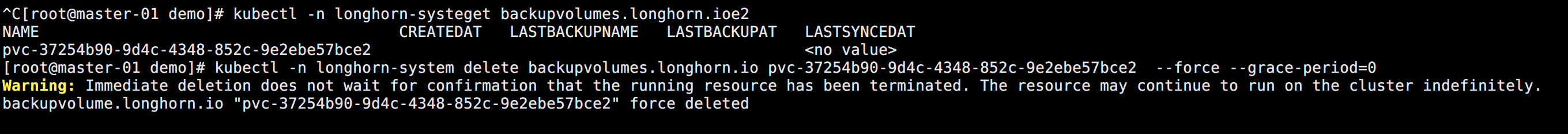

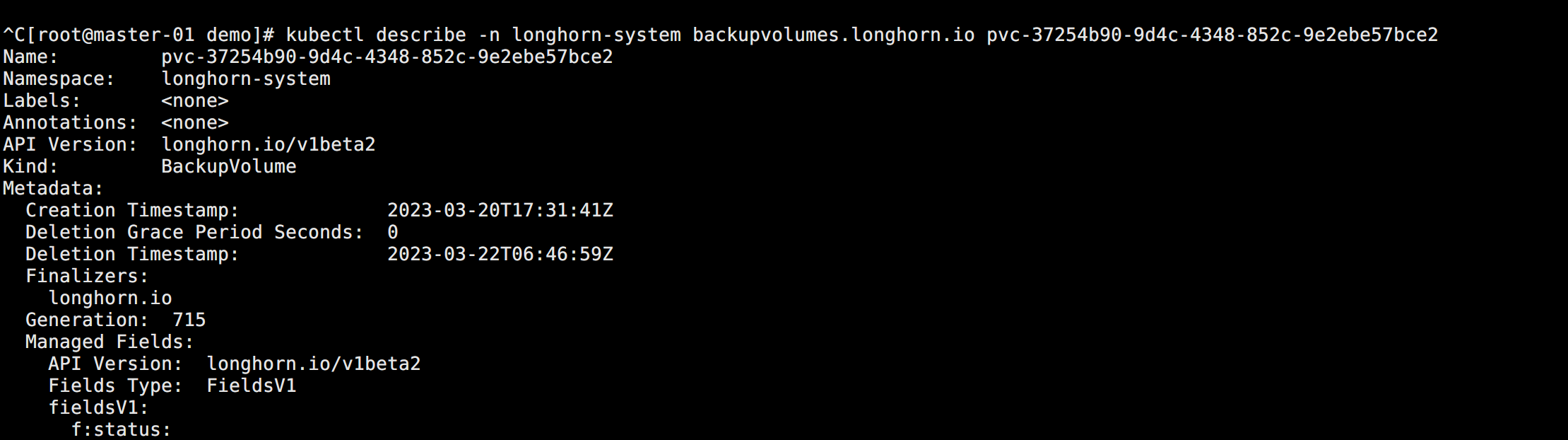

[BUG]This is a empty backup,but i canot delete。 #5643

Comments

|

Can you check the log in instance-manager-r pods? It is usually caused by a permission issue. |

|

i had checked! after i delete again, the instance-manager-r has no log. |

|

Any clue in longhorn-manager pods? |

|

The deletion is async. The status Can you provide us with the support bundle? |

|

Just saw a lot of 2023-03-23T01:07:46.806906232+08:00 time="2023-03-22T17:07:46Z" level=error msg="Error clean up remote backup volume" backupVolume=pvc-37254b90-9d4c-4348-852c-9e2ebe57bce2 controller=longhorn-backup-volume error="error deleting backup volume: failed to execute: /var/lib/longhorn/engine-binaries/longhornio-longhorn-engine-v1.4.1/longhorn [backup rm --volume pvc-37254b90-9d4c-4348-852c-9e2ebe57bce2 nfs://192.168.2.21:/data/backup], output cannot mount nfs 192.168.2.21:/data/backup: vers=4.0: failed to execute: mount [-t nfs4 -o nfsvers=4.0 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\n, error exit status 32: vers=4.1: failed to execute: mount [-t nfs4 -o nfsvers=4.1 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\n, error exit status 32: vers=4.2: failed to execute: mount [-t nfs4 -o nfsvers=4.2 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\n, error exit status 32: Cannot mount using NFSv4\n, stderr, time=\"2023-03-22T17:07:46Z\" level=error msg=\"cannot mount nfs 192.168.2.21:/data/backup: vers=4.0: failed to execute: mount [-t nfs4 -o nfsvers=4.0 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\\n, error exit status 32: vers=4.1: failed to execute: mount [-t nfs4 -o nfsvers=4.1 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\\n, error exit status 32: vers=4.2: failed to execute: mount [-t nfs4 -o nfsvers=4.2 -o actimeo=1 192.168.2.21:/data/backup /var/lib/longhorn-backupstore-mounts/192_168_2_21/data/backup], output mount.nfs4: access denied by server while mounting 192.168.2.21:/data/backup\\n, error exit status 32: Cannot mount using NFSv4\"\n, error exit status 1" node=master-01@goer3 Could you help us check the nfs server is running and ACL is correct (this KB might help)? Then could you try to mount |

|

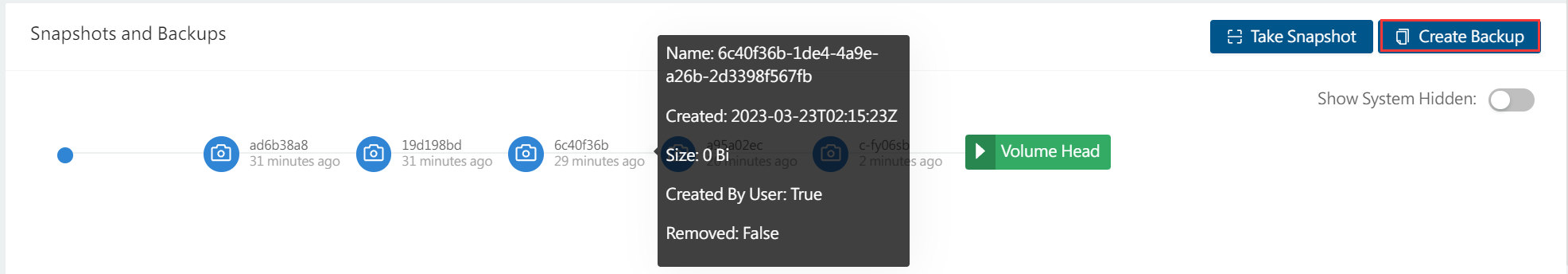

Your backups failed because the icon is |

|

The backups are generated successfully from your image. |

|

Can you check backup resources' status like |

|

Could you provide the nfs server setting in |

|

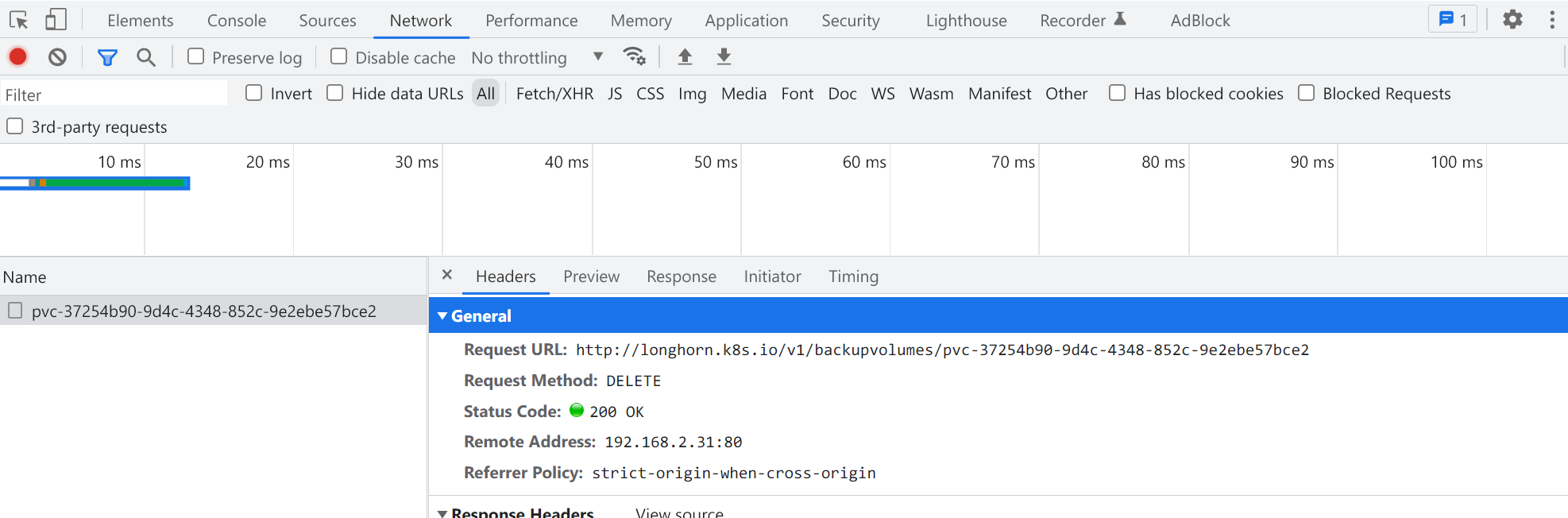

/etc/exports: when i run command: then i edit this backupvolumes,change Thank you very much! @mantissahz @derekbit @innobead |

Describe the bug (🐛 if you encounter this issue)

Expected behavior

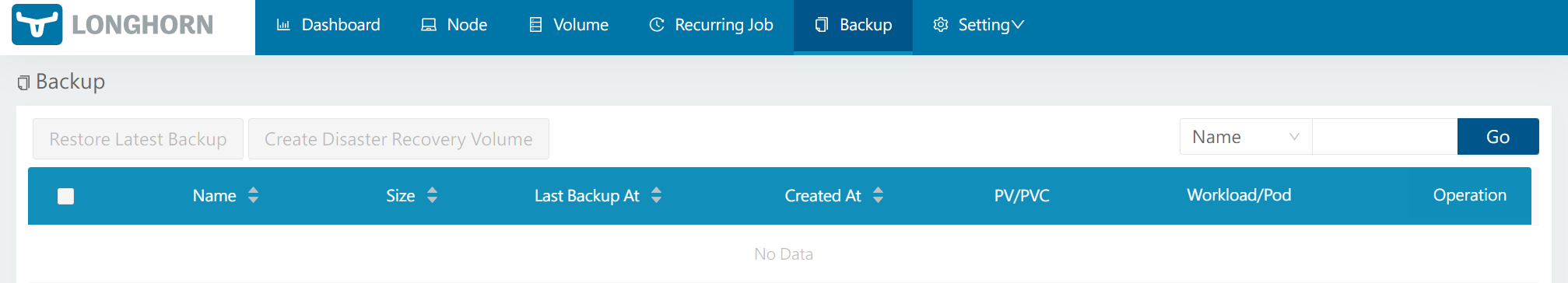

I deleted all pv and pvc on --force,then i canot delete this folder. it is empty!

Environment

The text was updated successfully, but these errors were encountered: