New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

EfficientNET.onnx does not run in TensorRT #167

Comments

|

There was an error during engine creation hence engine is None. You have to provide more information how your engine is created because engine creation can fail for various reasons. |

I use tensorRT python sample for creating the engine. I had a problem with converting the EfficientNet from PyTorch to ONNX. ONNX can't export SwishImplementation. I use this line to convert.

Is it possible this layer causes the problem? |

|

Finally, I could run the efficientNet model using this environment: |

|

@Soroorsh hey did you give any output names? i am getting the same error |

Hey, `I've used this code: |

|

@kochsebastian Even I am facing the same issue with TensorRT model. As soon as I convert the onnx model to tensorRT version, the performance drops completely. |

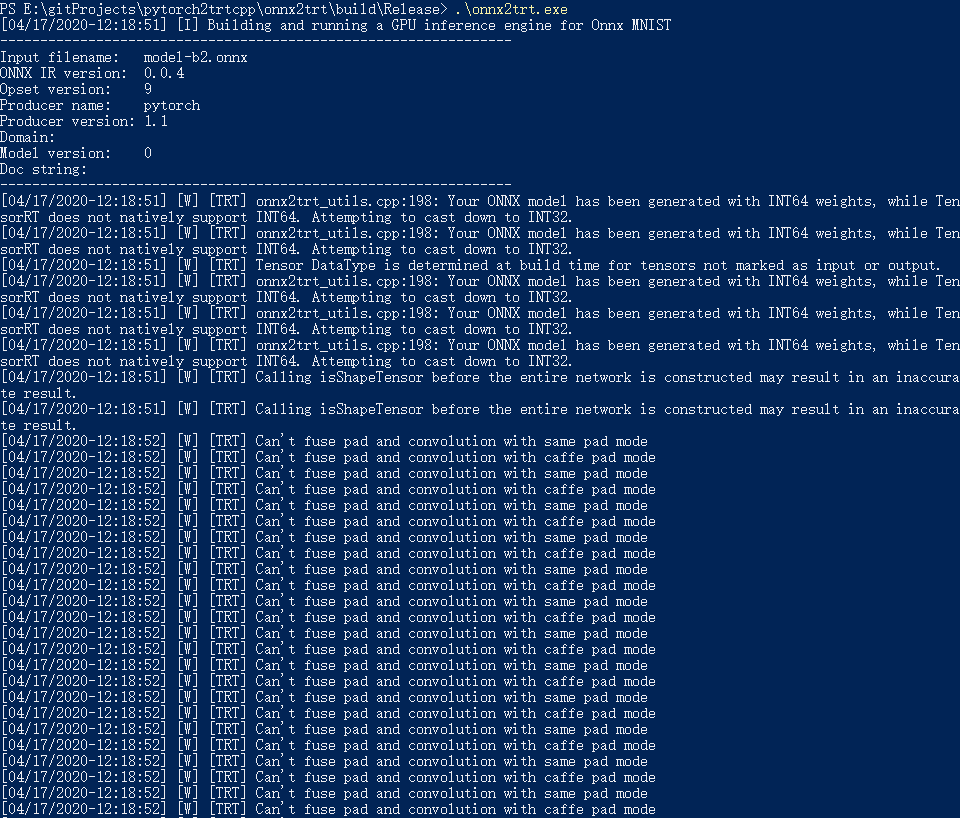

Hi,

I've got this error for running the converted EfficientNet from PyTorch to Onnx in TensorRT:

Can anybody help me?

TensorRT version: 6.1.05

Pytorch: 1.1.0

Onnx: 1.5.0

The text was updated successfully, but these errors were encountered: