New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Governing equation for the contrast agent concentration #108

Comments

|

Thank you for your quick reply @lululxvi So I tried your suggestions and interestingly when I reduced the time domain from 0.5s to 0.1s, the prediction became worse (normally, it should get better right? Since the density of collocation points further increases)! I also tried to use more training points (15000 domain and boundary points, 5000 initial condition points) but my train and test loss graphs of different trainings (I tried to use training points ranged from 5000 to 15000).stay pretty similar (just flatten up). Additionally, I thought it has something to do with the complexity of the model and the learning rate, so I tried to use a different network size ( [40]*6 and using learning rate 1.5e-3 and 3e-3) but it didn't get any better. Do you think I should keep using more training points, longers training and further increasing the complexity of the model? The following losses are from one of my training, do you reckon my number of initial condition points is not enough? |

|

The loss is too large. Check "Q: I failed to train the network or get the right solution, e.g., the training loss is large." at FAQ. |

|

Hi lulu, I have sort of figured out how to solve my problem by adjusting the ratio of my number of domain, boundary and initial condition point and using the argument Thank you for making this awesome deep learning library. |

|

@iMTimmyyy is it possible to upload your final code? |

Sure, here you go. `from future import absolute_import import matplotlib.pyplot as plt def main(): |

|

@iMTimmyyy Thank you |

Dear @lululxvi,

Hi I am trying to model and solve the following equation :

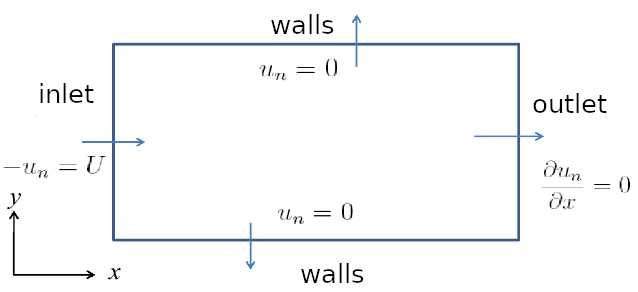

over a rectangle domain like this (20mm by 2mm):

where there are zero-gradient boundary conditions at the upper, lower walls and outlet and a Dirichlet boundary condition at the inlet for the concentration. And for initial condition, it is zero everywhere. And velocity is 0.2 m/s in the x-direction

And my script is as follow:

from future import absolute_import

from future import division

from future import print_function

import matplotlib.pyplot as plt

import numpy as np

import deepxde as dde

from deepxde.backend import tf

def main():

if name == "main":

main()

Originally, I was using a fixed value for the Dirichlet condition (func_one, 5000 Domain and 3000 boundary points) and it worked fine. However, when I changed the Dirichlet condition to sin^2(t/0.005), it behaved strangely. The test loss is much lower than train loss (e.g. train loss: 1e-1, test loss: 1e-4/-5) and the prediction I obtained is very different from numerical solutions.

The Prediction:

Numerical Solutions:

Besides, when I tried to add the "num_test" argument in TimePDE I got the following error:

Traceback (most recent call last):

File "C:/Users/timmy/PycharmProjects/examples/CA_Conc.py", line 111, in

main()

File "C:/Users/timmy/PycharmProjects/examples/CA_Conc.py", line 51, in main

geomtime, pde, [bc_l, bc_r, bc_up, bc_low, ic], num_domain=5000, num_boundary=3000, num_initial=150, num_test=1000)

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\data\pde.py", line 162, in init

num_test=num_test,

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\data\pde.py", line 45, in init

self.test()

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\utils.py", line 23, in wrapper

return func(self, *args, **kwargs)

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\data\pde.py", line 89, in test

self.test_x = self.test_points()

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\data\pde.py", line 191, in test_points

return self.geom.uniform_points(self.num_test)

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\geometry\timedomain.py", line 53, in uniform_points

nt = int(np.ceil(n / nx))

ZeroDivisionError: division by zero

Process finished with exit code 1

And I got this error when I tried to add metrics = ["l2 relative error"] to model.complie("adam", lr=1.5e-3):

File "C:\Users\timmy\AppData\Roaming\Python\Python37\site-packages\deepxde\metrics.py", line 15, in l2_relative_error

return np.linalg.norm(y_true - y_pred) / np.linalg.norm(y_true)

TypeError: unsupported operand type(s) for -: 'NoneType' and 'float'

Could you help me identify what might be wrong?

Thank you in advance.

The text was updated successfully, but these errors were encountered: