New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Unable to use GPU, regardless of TrainerConfig(gpus=) setting #16

Comments

|

Additional details: Model configs = data_config = DataConfig( model_config = TabNetModelConfig( tabular_model = TabularModel( |

|

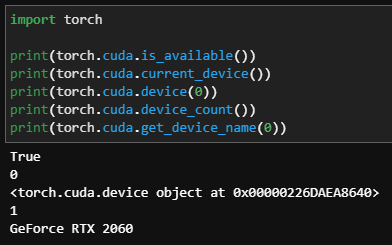

Can you run the below code and check if your PyTorch installation is using GPU? |

|

okay.. So PyTorch is seeing the GPU. Can you try giving |

|

@rafaljanwojcik if [0] or None is using the GPUs, I think something fishy is going on. Which version of PyTorch Lightning are you running? I think I need to re-look at the gpu setting. PyTorch Lightning has had some changes and have auto_select_gpus now. Since this GPU config is creating a lot of confusion, I'll try to simplify this in the next release. |

|

@manujosephv thanks for responding! You're right, it is actually using GPU, just not whole, sorry for the fuss |

|

@Sunishchal @rafaljanwojcik This should be fixed now in the develop branch.. Have changed the way we use the

If you turn off Not able to publish to PyPi cause of my CI/CD pipeline which is currently with travics-ci.org. need to migrate to travis-ci.com or Github Actions. |

|

Fixed in v 0.6. Now in PyPi. Can you check and revert? |

|

Closing the issue. Feel Free to reopen if it still persists. |

I am unable to use GPUs whether I set 0 or 1 for the gpus parameter. I think the issue may lie inside an internal calling of the distributed.py script. As the warning states, the --gpus flag seems to not be invoked.

The text was updated successfully, but these errors were encountered: