New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Once "context deadline exceeded" error happened, need to restart service. #21641

Comments

|

Hello @shawn111 - an error of "context deadline exceeded" means that the database exceeded the query timeout set by the application. I'd suggest to check the database logs for any issues. But as you mentioned above, the db does not appear to have any issues. I think I'd need some more information about your setup to be able to provide further info.

Let me tag @anx-ag who's had some experience debugging these issues before. Alexander, do you know what could be the issue here? |

|

If it happens unregularly or only when the system has been running for some days, there might be an autovacuum blocking things or reducing performance so that the other queries cannot finish. It's also possible that the application's connection pools get full because of that or that you reach max connections on your database server and the application therefore stops working for new queries while the older ones are timing out. Additional to what @agnivade requested, can you please also share the The output of the following query while you're experiencing the problem would be very helpful: select * from pg_stat_activity; |

|

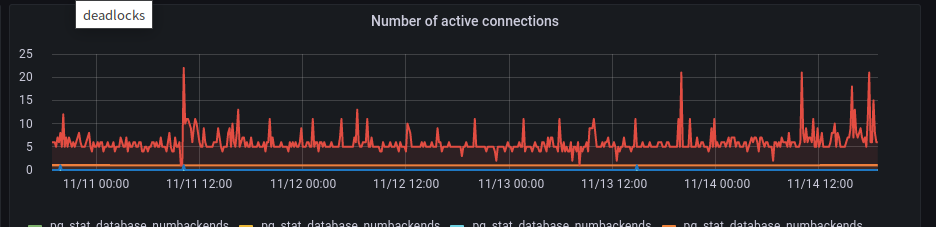

@agnivade yes, as beginning. I also thought this issue is caused by db loading. {"timestamp":"2022-11-13 09:14:44.587 +08:00","level":"error","msg":"GetChannelMembersForUserWithPagination: Unable to get the channel members., failed to find ChannelMembers data with and userId=1gigwsncqibwdp5euyp64xy3fw: context deadline exceeded","caller":"app/plugin_api.go:940","plugin_id":"com.mattermost.calls","origin":"main.(*Plugin).handleGetAllChannels api.go:129"} In order to fix it, we restarted the mattermost service aroud 9:16. @anx-ag , Here is the latest 4 days info. About my env, mattermost run direct in host server, but postgres already move into k8s env. It might be the root cause of "context deadline exceeded", but even this issue happened, it should be covered after a moment. |

@agnivade It seem it might cause by my setting. |

|

Is the zhparser extension enabled in the mattermost database? I must admit I was unable to follow the documentation on the Github page properly, but the hourly peaks are most likely not caused by this extension. If you want to find out what queries these are, you could try to enable query logging a few minutes before this happens and disable query logging again afterwards, so we can find out what causes these spikes but they're most likely unrelated to your problem. Also did you find time to run the query I sent you and did what was the result? Please do also send the requested configuration settings of your config.json. |

|

@anx-ag It's might be a network issue between my pg server and mattermost server. Ping time is not stable. Database loading still far to reach the limitation. However, it point out once "context deadline exceeded" happened, all db connection would be broken. |

|

Getting In order to debug this further I would really need the requested outputs and answers to my questions. Do you need help with running those queries or should I rephrase the questions if you've trouble understanding them? Just let me know. |

|

Got it, I thought in my env is quite clear about network issue to cause this issue. I thought we can close this issue? |

Summary

When the error happened, can not access mattersmost service.

It would redirect to login page and show "Token Error", like https://forum.gitlab.com/t/mattermost-token-error/65279/2.

Check mattermost.log, once this error happen, all db connection were broken.

Nov 11 09:41:13 messaging-server mattermost: {"timestamp":"2022-11-11 09:41:13.085 +08:00","level":"error","msg":"Failed to list plugin key values","caller":"app/plugin_key_value_store.go:167","page":0,"perPage":1000,"error":"failed to get PluginKeyValues with pluginId=playbooks: context deadline exceeded"}

Not sure it is related to jackc/pgx#1313.

Bug report in one concise sentence

Steps to reproduce

Mattermost Version: 7.1.3

Database Schema Version: 89

Database: postgres (13.8)

A complete restart of the system solves the problem, but it comes back every 4-5 day.

We check db / memory / network status, can not find the problem.

Expected behavior

Even the error happened, it should broken that query not all of queries after that.

Observed behavior (that appears unintentional)

Once "context deadline exceeded" error happened, need to restart service.

We restarted mattermost at 10:05 after that no such error happen yet.

Possible fixes

Should we upgrade pgx to fix it?

jackc/pgx#1313

The text was updated successfully, but these errors were encountered: