New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Pretrain Model #1

Comments

hi~, we use Deformable DETR, r50 + iterative bounding box refinement, you should download this model: https://drive.google.com/file/d/1JYKyRYzUH7uo9eVfDaVCiaIGZb5YTCuI/view |

hello, I have tried the pretrained model above, but meet the error:

After ignoring the model parameter size mismatch, I get another error about size mismatch of optimizer: How did you solve it? Could you give any suggestions?Thanks. |

Did you modify any codes? or could you paste the .sh command here? |

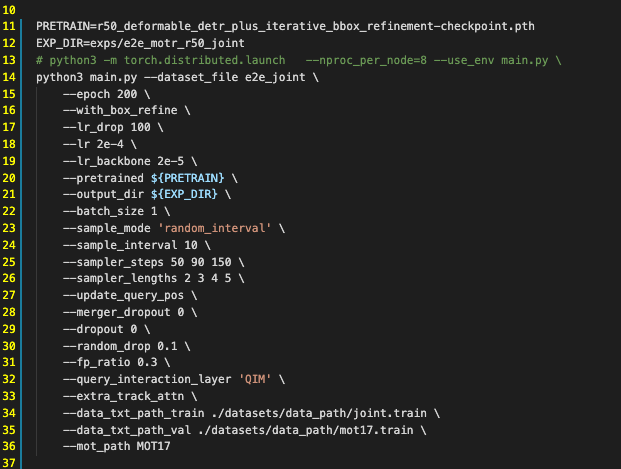

PRETRAIN in r50_motr_train.sh is set to r50_deformable_detr_plus_iterative_bbox_refinement-checkpoint.pth(the link you provided above) because I don't find coco_model_final.pth. |

|

@dbofseuofhust @zzzz94 This error may cause by the '--resume {PRETRAIN}' in the training script. Just remove that. |

thx, I'll try it. |

pytorch version is mismatch,need torch==1.5.1, torchvision==0.6.1 |

|

I am getting into the same problem: All I did is uncommenting the command in configs/r50_motr_train.sh and replace $PRETRAIN with the checkpoint you suggested above (https://drive.google.com/file/d/1JYKyRYzUH7uo9eVfDaVCiaIGZb5YTCuI/view). @dbofseuofhust Do you have any idea about this? Anything to be updated in your README guidelines? |

Could you please provide the version of pretrain model. I downloaded the r50_deformable_detr-checkpoint.pth but the there is an error in loading state_dict for MOTR

The text was updated successfully, but these errors were encountered: