This repository has been archived by the owner on Dec 1, 2023. It is now read-only.

This issue was moved to a discussion.

You can continue the conversation there. Go to discussion →

Try passing into scipy.optimize.differential_evolution #42

Labels

The idea here is to take a scipy function like

scipy.optimize.differential_evolutionand be able to execute it on different hardware.If the hardware is still immediate, then we could do this possibly by passing in a CuPy array or something like that.

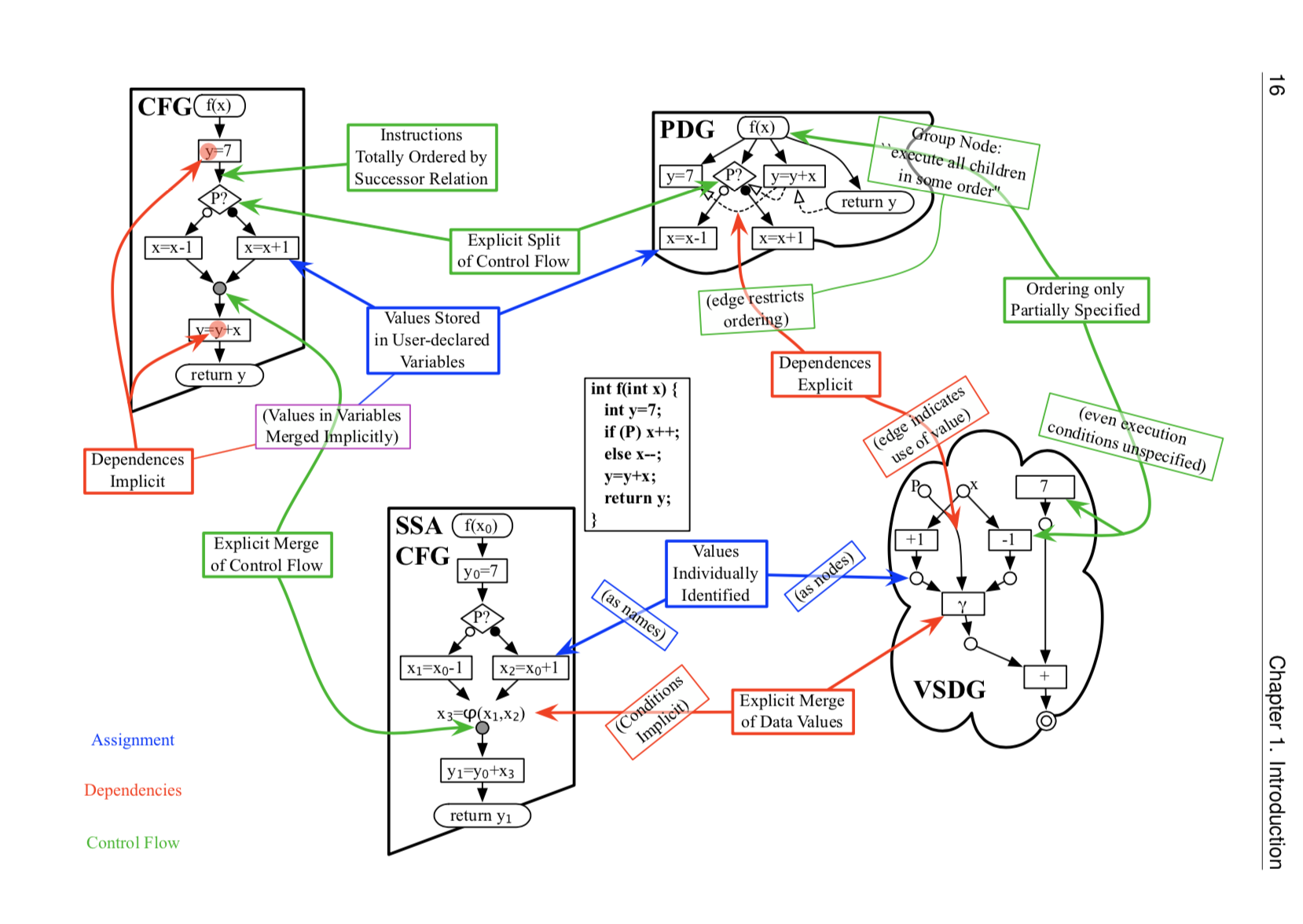

The more general case, however, is to first build up an expression graph for the computation, as written, then choose how to execute it. This would be useful if we wanna explore any sort of MoA optimizations or translate to a non eager form like Tensorflow. Basically by not doing things eagerly, we can optimize in ways that are impossible.

For example, this is what Numba does. It takes a whole function and optimizes it as a whole. And you can get much better performance this way.

https://github.com/scipy/scipy/blob/v1.2.1/scipy/optimize/_differentialevolution.py#L649

The text was updated successfully, but these errors were encountered: