New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Results on natural language code retrieval #91

Comments

|

|

So it's because of the different data used for pre-training, right? |

|

Both of them use the same model architecture as Roberta and the same pre-training data. But |

|

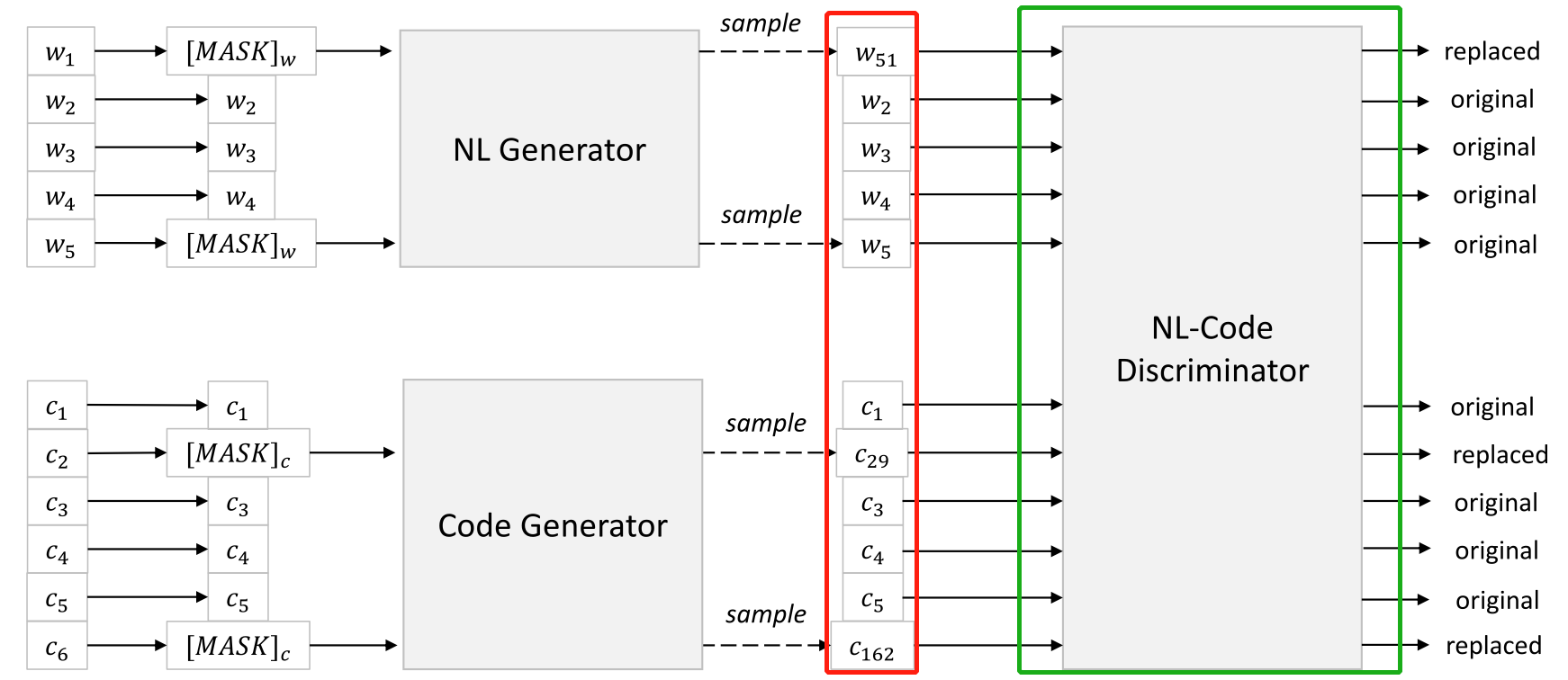

We first learn two generators separately with corresponding unimodal data to generate plausible alternatives for the set of randomly masked positions. Specifically, we implement two n-gram language models with bidirectional contexts. NL-Code discriminator is the targeted pre-trained model, both NL and code generators are thrown out in the fine-tuning step. |

Thanks for your reply! I have understood that discriminator is the targeted pre-trained model, both NL and code generators are thrown out in the fine-tuning step. But I don't understand that the RTD pre-training process.

So, are generators and discriminators trained separately? First, train two generators and then fix the two trained generators to train the discriminator?

What is the role of the pre-training discriminator? What is the relationship between the generic representation obtained by the discriminator during the fine-tuning phase and the representation obtained by the MLM output layer? What does it have to do with the representation of [CLS] when code searches for fine tuning?

|

Yes, you are right.

We use the multi-layer Transformer as the model architecture of CodeBERT. RTD and MLM are two objectives used for training CodeBERT. |

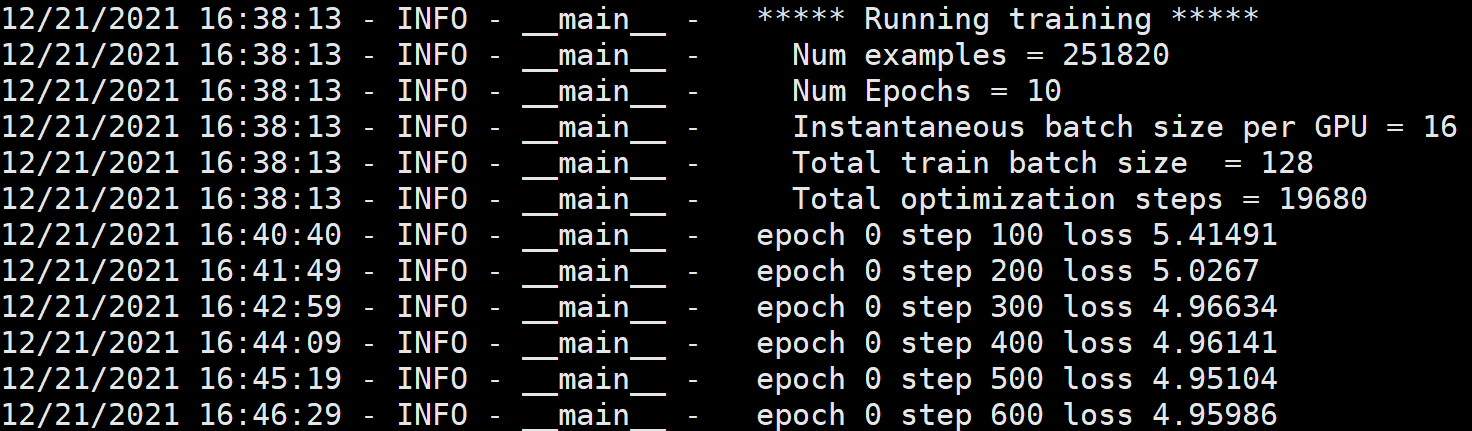

We only use the training data of the fine-tuning stage for pre-training. |

Thanks for your reply so quickly!

|

|

Yes,you are right. |

Hi, I have pretrained RoBERTa only with codd from scratch (PT W/ CODE ONLY (INIT=R)). When I fine-tune it use the script in `Siamese-model\README.md`:

Is Codebert's pre-training data all NL-PL bimodal data? Do you use unimodal data? |

|

We pre-train CodeBERT with both bimodal data and unimodal data. We only use NL-PL bimodal data to finetune and evaluate the model for code search. |

Why is the MA-AVG of

CODEBERT (MLM, INIT=R)about 3% higher than that ofPT W/ CODE ONLY (INIT=R)?Is it because their network structure is different?

But as described in the paper, they use the same network architecture and objective function MLM:

Is it because they use different pre-training data?

As described in the paper:

The text was updated successfully, but these errors were encountered: