-

Notifications

You must be signed in to change notification settings - Fork 2.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Controller Mapping not working when building for Quest 2 using MRTK with PC/Standalone as target platform #10785

Comments

|

Also, after adding the oculus integration package all the buttons seem to be working correctly other than the grip button. I have mapped the Grip button action of Meta Quest 2 controller to the "Axis1D.PrimaryHandTriggerPress" event that can be seen in the above picture but it is not invoking the respected input action. |

|

One more thing: Although the Grip Button action Axis Constraint is defined as Single Axis rather than Digital, but still, on pressing the Grip button the IMixedRealityInputHandler is being invoked. |

|

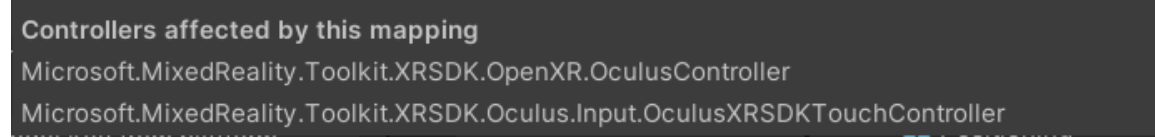

Hey there, we're trying to better understand the root cause of the issue. Can you narrow down which kind of controller is being used in the project? The Oculus controller mapping affects both of these kinds of controllers: But we're unsure which controller mapping may be incurring this bug. Just so we understand this issue in full, is the issue that the Physical Grip button on the controller isn't being recognized? Or is it being recognized and not raising the appropriate input action? |

|

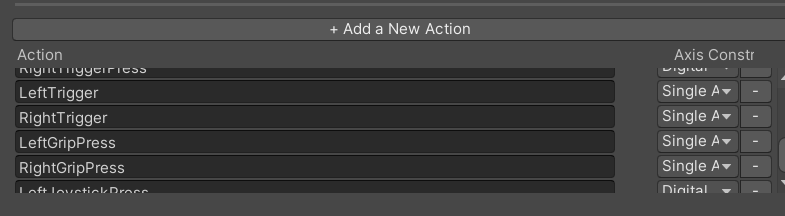

Hi @RogPodge, thanks for getting back to me. We are using the Oculus.Input.XRSDKTouchController in our project. For example, in the following screenshot both the LeftTrigger/RightTrigger input actions and LeftGripPress/RightGripPress input actions have SingleAxis as their Axis Constraint but LeftTrigger/RightTrigger actions are raising the OnInputChanged event (CORRECTLY), whereas the LeftGripPress/RightGripPress actions are raising the OnInputDown/OnInputUp events (NOT THE CORRECT BEHAVIOR). |

|

Mistakenly closed the issue, sorry :x |

|

Hey there. Sorry for the late response. I noticed in your initial query that you weren't using the Oculus Integration package. Does that mean you are using the OpenXR backend then instead? You should be using one or the other, though if you decide to go with OpenXR, I suggest you try using MRTK3, which is in preview, instead. It simplifies a lot of the input stack and uses Unity's built-in input action system rather than our own self-defined one. In addition, the MixedRealityInteractionTool scene can be used as a good resource to help you debug. It can be found at Assets/MRTK/Tools/RuntimeTools/Tools/MixedRealityInteractionTool within the main project, or RuntimeTools/Tools in the Microsoft.MixedReality.Toolkit.Unity.Tools package. |

|

Closing this issue as it seems to have gone stale. |

Describe the bug

We are rebuilding our Hololens app for Quest 2 and we want to keep on using MRTK package. Right now we don't have Oculus Integration package in our project. Our app is working as desired without the Oculus Integration package but Input Actions are not working. We have mapped Input Actions to controller buttons in the Controller Mapping section of MixedRealityToolkit gameobject in the scene but pressing the button is not performing the desired action. When I tested in a new project by also adding the Oculus Integration package then it's working correctly.

My question is: Is it possible to map the controller buttons to input actions without adding the Oculus Integration package?

Screenshots

Your setup (please complete the following information)

Target platform (please complete the following information)

The text was updated successfully, but these errors were encountered: