New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

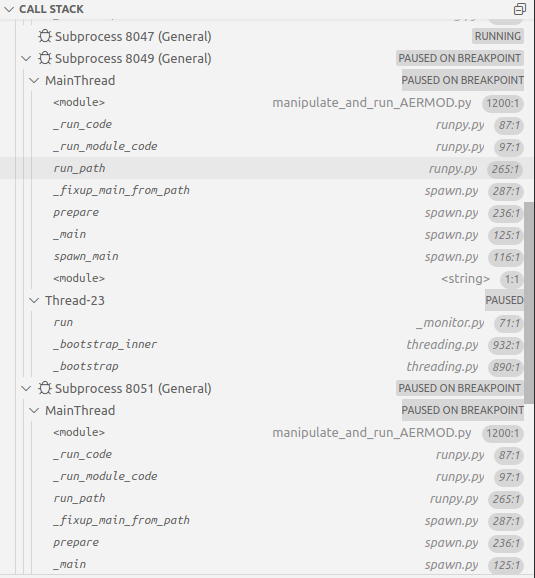

Why are multiple debugging threads being started in integrated Python-debugging of VS Code? #349

Comments

|

Debugger does spawn background threads - that's an unavoidable part of the implementation. However, you wouldn't see those threads in the Call Stack window. They are also not running your code, so "executing the same code several times simultaneously" does not apply. Same thing goes for subprocesses. This behavior doesn't reproduce for me on any simple app, so we'll need some more information to repro. Most likely, this has more to do with the code being debugged, or with the libraries that it uses. In addition, I'm curious as to what lead you to conclude that those threads execute the same code. Did you try pausing and inspecting the call stacks in other threads to see what code is running there? If not, doing that might shed some light on what's spawning the threads. |

|

@AndreasLuckert I believe this issue is on your own code or some library you're using to accelerate your code using multiple processes -- the debugger is just tracking them as it should. For the threads, it seems they're started in a If you share the logs you have from running I may be able to give you more insight. i.e.:

|

|

As far as I know, I don't have a file called I copied these files from my Thanks in advance for analyzing these files. |

|

It seems you (or some library you have) is using the i.e. the stack with full paths is: I also see many One trick to evaluate where threads are being created could be adding: in For the multiprocessing, I think you could do something analogous, but I'm not sure the proper place in this case -- maybe Anyways, this doesn't seem to be an issue in the debugger, so, I'm closing this issue. |

|

Also, something to double check in your code is if the main entry point is protected by the and depending on the structure, you could also need the i.e.: (if you don't have that structure, it may explain why multiprocessing is executing your main entry point code multiple times). |

|

I have neither the first nor the second option implemented and it could happen with any script I execute I've just realized. Do you recommend me to put in the beginning of all my python-scripts which I execute as main-script, i.e. my entry-point or top-level script? |

|

I'd be glad to learn if putting the above-mentioned code-lines into my main-scripts enable me to avoid the multi-threading during my debugging sessions. |

|

It should generally be at the end of your script, and all top-level code that's not already in a function should be inside |

|

Alright, I didn't know that even the top-level/main-script is going to be imported as a module possible several times during the debugging process involving multiprocessing. I thought that I only needed to take care that sub-level scripts don't comprise code which are not within a function in order to prevent their multiple or generally undesired execution via e.g. Moreover, as for the recommendation to put in the end of my main-script (and possibly all the other sub-level scripts as well, which comprise code outside of functions), There, it states the following:

This in turn means that I cannot apply this to my case since, as I'd mentioned in my initial question above, my OS is:

Now I'm wondering if there is something equivalent for

|

|

The important part is calling Linux does indeed fork by default, but it can also use spawn via |

|

Okay thanks, so I will implement in the end of my script the bespoke

since the line By the way, the expression

stands for |

|

Yep! For more details, see this section in Python docs. |

Environment data

17299e413d5590b14ab0340ea477cdd86ff13daf

x64

Kernel: Linux 5.4.0-42-generic

Architecture: x86-64

[GCC 5.4.0 20160609] on linux (from linuxbrew using pip)

python.languageServersetting: "Jedi"Expected behaviour

Debugging with a single main thread.

Actual behaviour

Multiple threads occur and slow down the process for executing the same code several times simultaneously.

Steps to reproduce:

[NOTE: Self-contained, minimal reproducing code samples are extremely helpful and will expedite addressing your issue]

For all the other details, please visit my question on StackOverflow.

I'm asking for help in this issue here as well since on StackOverflow there was no significant activity regarding the solving of this matter. I'd also asked this question on VS Code GitHub which was not answered there but forwarded to other places.

Logs

Output for

Pythonin theOutputpanel (View→Output, change the drop-down the upper-right of theOutputpanel toPython)The text was updated successfully, but these errors were encountered: