New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[MusicBERT] Restriction to 1002 octuples when using preprocess.encoding_to_str

#60

Comments

|

If you need to change |

|

Hi @mlzeng .

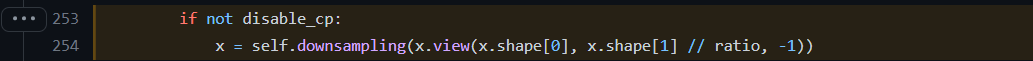

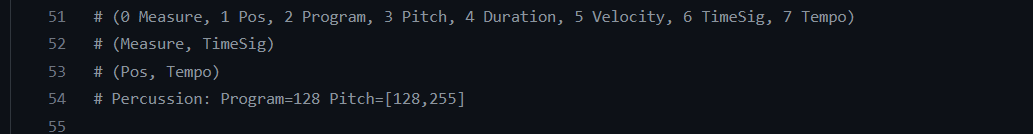

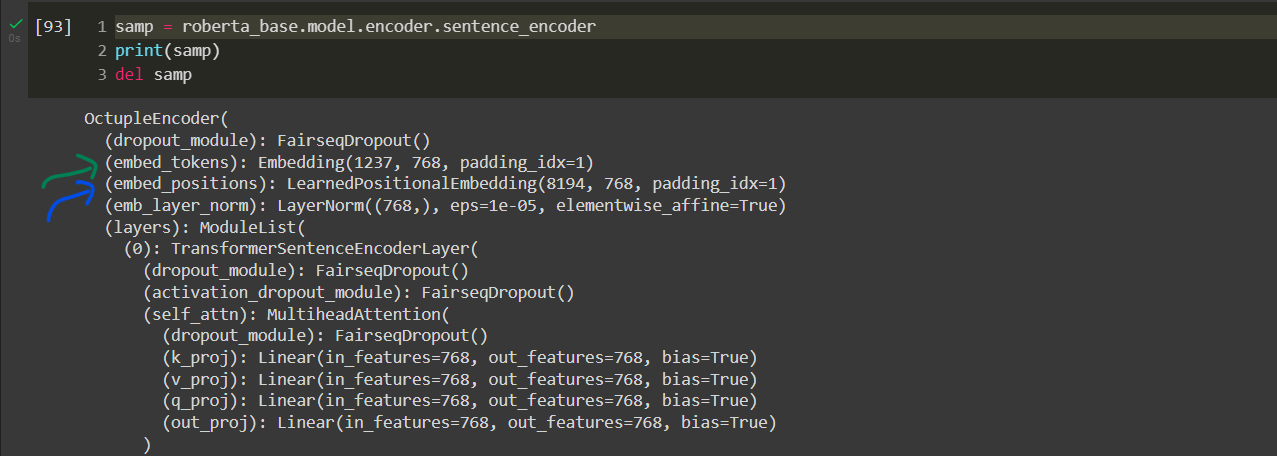

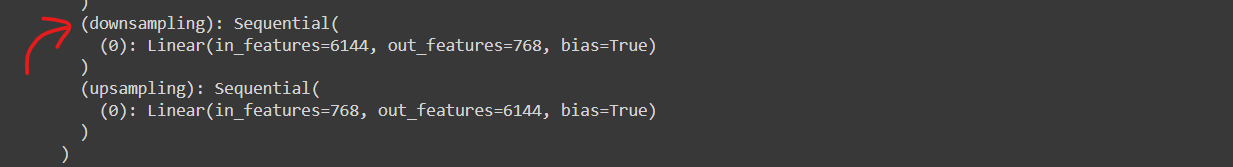

The The lines 253-254 then convert

Also see red arrow in Colab snip at end of comment for definition of The lines 257-259 then add positional embedding using only every (8*i + 1) th element of original sequence Now if we see the definition of Therefore upto 8194 octuples should be supported (could be with or without padding)! Please let me know if there is any misunderstanding in my logic, as I really need to understand this. Thanks! |

|

Your logic is pretty correct. But MusicBERT models are trained with setting Processing 8192 octuple tokens with MusicBERT is theoretically possible, but that will require 64 times more GPU memory (memory usage is quadratic proportional to sequence length), which is non-practical currently. (The original RoBERTa models are trained with sequence length = 512) |

|

I see, it is now clear! 😃 The provided MusicBERT Base also performs amazing without any further training and thanks to the team once again for making it open-source once. In |

|

We have tried pre-training using the So be careful of training a large model. We will release a large model if we can figure out the problem. |

|

Oh I see, hoping it is resolved eventually. Would the finetuned models on the genre prediction and accompaniment suggestion be released too? |

Hi once again!

While preprocessing a MIDI file, I noticed that the

MIDI_to_encodingmethod performs as intended and converts the sample song to 106 bars as seen in the snip below of the resultant octuples (please correct me if I'm wrong).However, the

encoding_to_strmethod has the result with restriction to just 18 bars (as conculsive from highlighted<0-18>near the end of the encoded string in snip below):More generally, what I have noticed in cases of multiple MIDI files is that only upto the first 1000 octuples (i.e, start token octuple + 1000 note octuples + end token octuple = (1002 * 8) = 8016 tokens) are considered:

Is there any way to change

encoding_to_strto get the whole song instead?, upto 256 bars only I mean, as model vocabulary is also restricted to 256 bars.I am not familiar enough with miditoolkit or mido to understand the code properly as of now, else I would have tried to fix this.

Thanks in advance!

Edit: I am aware that the

musicbert_basemodel can support upto 8192 octuples (i.e, final input to MusicBERT encoder) only, but that does not seem to be the issue here I think.The text was updated successfully, but these errors were encountered: