-

Notifications

You must be signed in to change notification settings - Fork 77

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

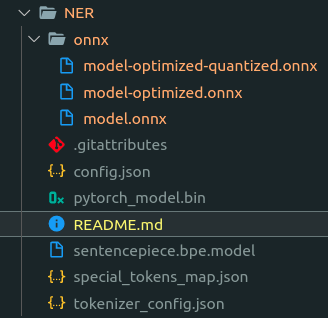

NER transformers model packed in one onnx file with Sentencepiece Tokenizer #164

Comments

|

If the model has a tokenizer as part of the onnx graph then you should be able to load the model into ONNX Runtime Java and then feed it strings. You'll need to load in the compiled custom op library before creating a session using this hook in the Java API - https://github.com/microsoft/onnxruntime/blob/master/java/src/main/java/ai/onnxruntime/OrtSession.java#L693. |

|

In Tribuo we wrote Java implementations of the tokenizers that we use for use with ONNX Runtime rather than use the custom ops, so you could do that too. |

|

The key point is how the tokenizer was implemented. |

|

Hey, thanks a lot for the answers. python -m transformers.convert_graph_to_onnx --framework pt --model path/to/NER/directory --quantize model.onnxAside the model I use the So, if I understand well your answers I need to load a custom op library in order to use the model with |

|

If the tokenizer hasn't been packaged into the model (and it's easy to check, if it has then the model will accept a string input, and if it hasn't it will accept 2 or 3 tensors of ints, which you can see by loading it into ORT and querying the inputs) then you'll need to tokenize the inputs yourself in your own code. I believe there is a sentencepiece JNI wrapper for the native sentencepiece library, but I haven't used it. If it has been packaged into the model then you'll need to load the custom ops library into your session options before loading the ONNX model, and it should work. Note I've not tested using the custom op tokenizers in Java, but I think there is a test for loading custom ops in general. |

|

Ok thanks for the answer again. Is there a way to manually pack the tokenizer with the My goal is to get only one final I talked about |

|

I think you want to follow this example for putting the tokenization op into the onnx graph - https://github.com/microsoft/onnxruntime-extensions/blob/main/tutorials/gpt2_e2e.py#L27. If you want to see what it would be like to do the tokenization in Java and leave the model as is, this is how we deploy BERT in Tribuo - https://github.com/oracle/tribuo/blob/main/Interop/ONNX/src/main/java/org/tribuo/interop/onnx/extractors/BERTFeatureExtractor.java#L904. Tribuo operates on the standard output from HuggingFace Transformers' onnx converter. |

|

Thanks a lot @Craigacp for explanations and usefull links! def get_cache_directory():

cache_dir = os.path.join("../..", "cache_models")

if not os.path.exists(cache_dir):

os.makedirs(cache_dir)

return cache_dir

def convert_models():

from transformers import AutoTokenizer

cache_dir = get_cache_directory()

tokenizer = AutoTokenizer.from_pretrained(

tokenizer_path,

cache_dir=cache_dir,

use_fast=False

)

build_customop_model('SentencepieceTokenizer', encoder_model_path, opset_version=12)

build_customop_model('VectorToString', decoder_model_path, decoder=tokenizer.decoder)

def inference_and_dump_full_model(inputs):

config = AutoConfig.from_pretrained(config_file, cache_dir=get_cache_directory())

core_model = pyfunc_from_model(core_model_path)

tokenizer = pyfunc_from_model(encoder_model_path)

with trace_for_onnx(sentence, names=['string_input']) as tc_sess:

preprocessing = preprocess(sentence=sentence)

inference = _forward(model_inputs=preprocessing)

results = postprocess(model_outputs=inference, aggregation_strategy='simple')

output= replace_words_with_tokens(sentence, results)

# Save all trace objects into an ONNX model

tc_sess.save_to_onnx("full_model.onnx", output)

return output

if not os.path.exists(decoder_model_path) or \

not os.path.exists(encoder_model_path):

convert_models()

output_ms = inference_and_dump_full_model(sentence)where I have 2 problems:

Traceback (most recent call last):

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_packing.py", line 351, in <module>

convert_models()

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_packing.py", line 330, in convert_models

build_customop_model('SentencepieceTokenizer', encoder_model_path, opset_version=12, model=tokenizer)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/onnxprocess/_builder.py", line 45, in build_customop_model

graph = SingleOpGraph.build_my_graph(op_class, **attrs)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/_cuops.py", line 234, in build_my_graph

cuop = onnx.helper.make_node(op_type,

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnx/helper.py", line 115, in make_node

node.attribute.extend(

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnx/helper.py", line 116, in <genexpr>

make_attribute(key, value)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnx/helper.py", line 377, in make_attribute

assert isinstance(bytes_or_false, bytes)

AssertionErrorIf I don't add the

Traceback (most recent call last):

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_packing.py", line 351, in <module>

convert_models()

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_packing.py", line 331, in convert_models

build_customop_model('VectorToString', decoder_model_path, decoder=tokenizer.decoder)

AttributeError: 'CamembertTokenizer' object has no attribute 'decoder'Any help would be appreciated! 🤗 |

|

@wenbingl The problems I am facing comes from this implementation (it seems that you made this 7 months ago) class _GPT2Tokenizer(GPT2Tokenizer):

@classmethod

def serialize_attr(cls, kwargs):

assert 'model' in kwargs, "Need model parameter to build the tokenizer"

hf_gpt2_tokenizer = kwargs['model']

attrs = {'vocab': json.dumps(hf_gpt2_tokenizer.encoder, separators=(',', ':'))}

sorted_merges = {v_: k_ for k_, v_ in hf_gpt2_tokenizer.bpe_ranks.items()}

attrs['merges'] = '\n'.join("{} {}".format(*sorted_merges[n_]) for n_ in range(len(sorted_merges)))

return attrsThis works perfectly with GPT2 because the transformers model has But this does not work with others tokenizers like the one I am using (CamembertTokenizer from SentencepieceTokenizer) because they don't have any So how could I make it works ? I am trying to code the function myself but that doesn't work for the moment, any hints ? class _SentencePieceTokenizer(SentencepieceTokenizer):

@classmethod

def serialize_attr(cls, kwargs):

assert 'model' in kwargs, "Need model parameter to build the tokenizer"

hf_sp_tokenizer = kwargs['model']

attrs = {'vocab': json.dumps(hf_sp_tokenizer._tokenize(), separators=(',', ':'))}

return attrsI am looking at a way to implement it for my use case, and I could make a PR if I succeed! |

|

It seems that this add is working for class _SentencePieceTokenizer(SentencepieceTokenizer):

@classmethod

def serialize_attr(cls, kwargs):

assert 'model' in kwargs, "Need model parameter to build the tokenizer"

hf_sp_tokenizer = kwargs['model']

attrs = {'vocab': json.dumps(hf_sp_tokenizer.vocab_file, separators=(',', ':'))}

return attrsBut I still have a problem while saving to onnx the full model. Traceback (most recent call last):

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_trace.py", line 367, in <module>

output_ms = inference_and_dump_full_model(sentence)

File "/home/Thomas.Chaigneau/code/arkea/onnx-conversion-nlp/packaging_tools/ner_trace.py", line 355, in inference_and_dump_full_model

tc_sess.save_as_onnx(

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/onnxprocess/_session.py", line 344, in save_as_onnx

m = self.build_model(model_name, doc_string)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/onnxprocess/_session.py", line 323, in build_model

graph = self.build_graph(container, self.inputs, self.outputs, model_name)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/onnxprocess/_session.py", line 304, in build_graph

nodes = ONNXModelUtils.topological_sort(container, nodes, ts_inputs, ts_outputs)

File "/home/Thomas.Chaigneau/miniconda3/envs/onnx-conversion/lib/python3.9/site-packages/onnxruntime_extensions-0.4.0+70aa18e-py3.9-linux-x86_64.egg/onnxruntime_extensions/onnxprocess/_session.py", line 122, in topological_sort

op = op_output_map[y_.name].name

AttributeError: 'dict' object has no attribute 'name'It seems that decoder isn't working as it should work. Any hints ? |

|

I went through c api and Application interface e.g. c++, java and c# API wrappers. Now I can follow the discussion here better. QuestionsWhat do you all think about making those contributed operators written in c++ available through onnxruntime repos (e.g. transformer pre, post processing) as a reusable interoperability library for e.g. java, c#? In the same way, as the BertExtractor discussed here. In this way, one can simply just use the onnx exported directly from HuggingFace, without needing onnxruntime-extension to achieve the ONE-STEP onnx . It is a library consisting of contributed operators but not used as a custom operator dll. |

|

Loading the custom op library works pretty well from Java, I've had need to use it since I originally made those comments and it's fine. It would be nice if it was packaged more easily so I didn't have to download a Python wheel and unzip it, but it's doable. You can always export the Tokenizer separately as its own ONNX model and then load both the model and tokenizer in as ORT sessions. The graph that @chainyo posted on 12th October shows how that would work. Alternatively once you've exported the model & tokenizer separately you could stitch them together by rewriting the protobuf. I've done this before, and it's unfortunately quite a painful manual procedure at the REPL, but it's definitely possible with the tools available today. ORT is looking at adding support for executing single operators, which would allow you to load the custom tokenizer op and execute it directly, before handing the result into the actual model which would also simplify things. |

|

@Craigacp

Is this what you are referring? =============================== /** \brief: Create onnxruntime native operator

*

* \param[in] kernel info

* \param[in] operator name

* \param[in] operator domain

* \param[in] operator opset

* \param[in] name of the type contraints, such as "T" or "T1"

* \param[in] type of each contraints

* \param[in] number of contraints

* \param[in] attributes used to initialize the operator

* \param[in] number of the attributes

* \param[out] operator that has been created

*

* \since Version 1.12.

*/

ORT_API2_STATUS(CreateOp,

_In_ const OrtKernelInfo* info,

_In_ const char* op_name,

_In_ const char* domain,

_In_ int version,

_In_opt_ const char** type_constraint_names,

_In_opt_ const ONNXTensorElementDataType* type_constraint_values,

_In_opt_ int type_constraint_count,

_In_opt_ const OrtOpAttr* const* attr_values,

_In_opt_ int attr_count,

_Outptr_ OrtOp** ort_op);

/** \brief: Invoke the operator created by OrtApi::CreateOp

* The inputs must follow the order as specified in onnx specification

*

* \param[in] kernel context

* \param[in] operator that has been created

* \param[in] inputs

* \param[in] number of inputs

* \param[in] outputs

* \param[in] number of outputs

*

* \since Version 1.12.

*/

ORT_API2_STATUS(InvokeOp,

_In_ const OrtKernelContext* context,

_In_ const OrtOp* ort_op,

_In_ const OrtValue* const* input_values,

_In_ int input_count,

_Inout_ OrtValue* const* output_values,

_In_ int output_count);

|

|

I am thinking python independent in c# once I have huggingface ONNX exported. Instead of reinventing the wheel in c#, use TorchSharp to coordinate all the pre and post processing? I see what you suggest. It is possible to expert the pre and post processing contributed operators as separate onnx. Eventually there are 3 onnx

Then, it is possible to use e.g. c# to stich all 3 Onnx in getting result instead of having ONE-STEP (All included pre, inference, post) ONNX Am I on the right track? |

I think so. I've not done the pass through the C API to get my todo list for Java yet, so I'd missed it had been merged.

Yes. You can combine the ONNX models (which are just protobufs) using anything that can read protobufs. I've edited them in Python and Java depending on what I was doing, and it's presumably possible to do the editing in C# too. |

Thanks for confirming! Do you have toy example combining onnx models using java. I am very curious how you did it. Appreciate if you have time to share. |

|

I usually do this kind of thing at the Java or Python REPL and it's custom for every model so I don't have any specific code to share. In the Python ONNX library they have a function which does all the nasty stuff for you - https://github.com/onnx/onnx/blob/main/docs/PythonAPIOverview.md#onnx-compose, but underneath the covers it's just manipulating the protobufs, there's nothing special happening (https://github.com/onnx/onnx/blob/main/onnx/compose.py#L233). |

Hi, I'm not getting all the stakes of this package, but is there a way to use the scripts to get a Java tokenizer ?

I converted my

pythonmodel toonnxand I aiming to run it with a Java runtime, but I don't get a clear comprehension of what the extension is designed for ? Is there a way to use it with Java Sentencepiece tokenizer ?I read on your docs that a lot of tokenizers are supported but is it only for Python ? (here)

Best regards

The text was updated successfully, but these errors were encountered: