You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

I can convert the pretrained model listed in this repository: https://github.com/salesforce/BLIP, However, at the inference time, I receive this error message: Name:'MatMul_32007' Status Message: matmul_helper.h:61 Compute MatMul dimension mismatch

Using these two modifications, the model accepts an image as an input and produces a tensor output having 20 entries. Having done the export, I used the following code to use for inference:

import torchvision.transforms.functional as F

import torch.onnx

import onnx

import onnxruntime

import PIL

import torchvision.transforms.functional as transform

path_to_onnx_file="BLIP_base.onnx" # path to the exported model

ort_session = onnxruntime.InferenceSession(path_to_onnx_file,providers=['TensorrtExecutionProvider',

'CUDAExecutionProvider',

'CPUExecutionProvider'])

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

image_path='out268.jpg' # change to any image

PIL_image = PIL.Image.open(image_path)

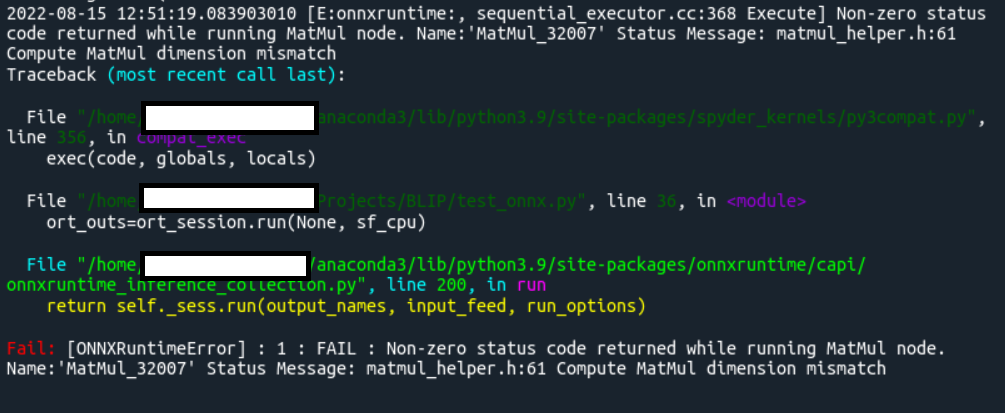

When I run the above code, I receive the following error:

Fail: [ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running MatMul node. Name:'MatMul_32007' Status Message: matmul_helper.h:61 Compute MatMul dimension mismatch

I have seen other people try to fix this mismatch issue for the Cuda part, but it did not work for me as I want to run it using CPU mode. It is of great importance to fix the issue ASAP.

System information

OS Platform and Distribution (Linux Ubuntu 18.04):

ONNX Runtime installed from pip command.

ONNX Runtime version: 1.12.1

Python version: 3.9

Visual Studio version (if applicable):

GCC/Compiler version (if compiling from source):

CUDA/cuDNN version: 11.3

GPU model and memory: Nvidia Geforce 3090, 24GB

Expected behavior

It should output a tensor list having 20 elements

Screenshots

The text was updated successfully, but these errors were encountered:

I am also attempting to convert this to ONNX. I have patched blip.py with your patch and ran the export code you provided, but crashed in python39.dll with Warning: Constant folding in symbolic shape inference fails: index_select(): Index is supposed to be a vector and IndexError: index_select(): Index is supposed to be a vector.

Bug:

I can convert the pretrained model listed in this repository: https://github.com/salesforce/BLIP, However, at the inference time, I receive this error message: Name:'MatMul_32007' Status Message: matmul_helper.h:61 Compute MatMul dimension mismatch

To Reproduce

Before exporting the model, the forward() function starting from line 105 from here (https://github.com/salesforce/BLIP/blob/main/models/blip.py#L105), should change to:

To export the model to ONNX, I used the following code:

import torch

from torchvision import transforms

from torchvision.transforms.functional import InterpolationMode

from models.blip import blip_decoder

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

image_size = 384

transform = transforms.Compose([

transforms.ToPILImage(),

transforms.Resize((image_size,image_size),interpolation=InterpolationMode.BICUBIC),

transforms.ToTensor(),

transforms.Normalize((0.48145466, 0.4578275, 0.40821073), (0.26862954, 0.26130258, 0.27577711))

])

model_url = 'https://storage.googleapis.com/sfr-vision-language-research/BLIP/models/model*_base_caption.pth'

model = blip_decoder(pretrained=model_url, image_size=384, vit='base')

model.eval()

print('export started')

batch_size=1

sf = torch.randn(batch_size, 3, 384, 384)

model.to('cpu')

torch.onnx.export(model,

sf,

"BLIP_base.onnx",

export_params=True,

verbose = True,

opset_version=14,

do_constant_folding=True,

input_names = ['input'],

output_names = ['output'],

dynamic_axes={'input' : {0 : 'batch_size'}, #

'output' : {0 : 'batch_size'}})

print('Export ended')

Using these two modifications, the model accepts an image as an input and produces a tensor output having 20 entries. Having done the export, I used the following code to use for inference:

import torchvision.transforms.functional as F

import torch.onnx

import onnx

import onnxruntime

import PIL

import torchvision.transforms.functional as transform

path_to_onnx_file="BLIP_base.onnx" # path to the exported model

ort_session = onnxruntime.InferenceSession(path_to_onnx_file,providers=['TensorrtExecutionProvider',

'CUDAExecutionProvider',

'CPUExecutionProvider'])

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

image_path='out268.jpg' # change to any image

PIL_image = PIL.Image.open(image_path)

tensor_image = transform.to_tensor(PIL_image)

tensor_image=tensor_image[None]

tensor_image=F.resize(tensor_image, [384,384])

sf_cpu= {'input': to_numpy(tensor_image)}

ort_outs=ort_session.run(None, sf_cpu)

When I run the above code, I receive the following error:

Fail: [ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running MatMul node. Name:'MatMul_32007' Status Message: matmul_helper.h:61 Compute MatMul dimension mismatch

I have seen other people try to fix this mismatch issue for the Cuda part, but it did not work for me as I want to run it using CPU mode. It is of great importance to fix the issue ASAP.

System information

Expected behavior

It should output a tensor list having 20 elements

Screenshots

The text was updated successfully, but these errors were encountered: