New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

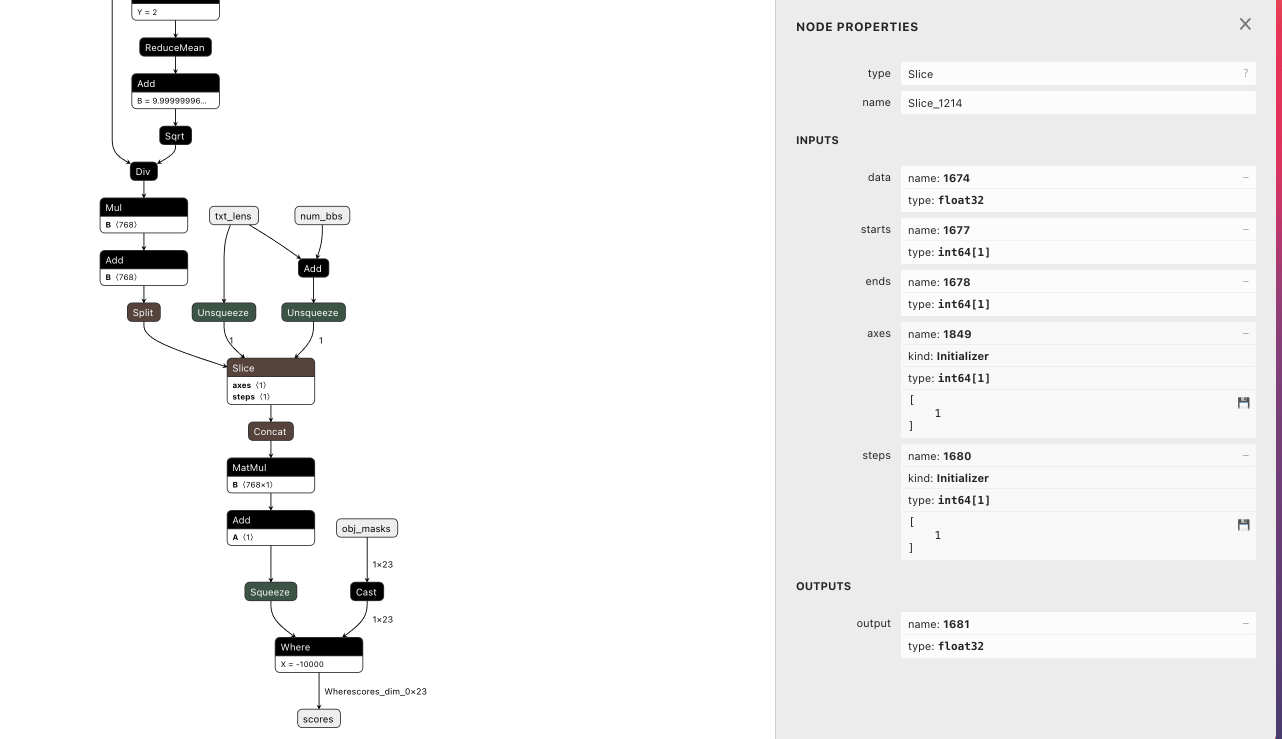

While i was doing the inference with onnxruntime, i got this error: return self._sess.run(output_names, input_feed, run_options) onnxruntime.capi.onnxruntime_pybind11_state.Fail: [ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running Slice node. Name:'Slice_1214' Status Message: slice.cc:260 FillVectorsFromInput Starts must be a 1-D array #8735

Comments

|

Can anyone help to see how to fix this bug? My input to my pytorch model contains ndarray of shape (1,) |

|

Can you please provide more details?

|

|

I firstly convert pytorch nodel to onnx model |

|

I tried to use num_bbs and txt_lens with shape (1,) ended up with same error |

|

Hi, it's still unclear to me which inputs is causing issue. |

|

Your starts would be 1677, output is 1681. If you click on concat below, it should show the input is 1681. Now you can click those 3 nodes above slice and find out what's 1677, and keep search upwards ... |

|

And here is the pytorch code for the slice part. ############ ############ |

|

Hi, the graph looks ok, it could be that at runtime, the actual data fed into the starts is not 1-D. can you please click on the 3 nodes (1 split and 2 unsqueeze) above slice and see where 1677 is coming from? just to confirm. I saw your post earlier ort inputs has [[23]] and [[39]] which is not consistent with torch inputs [23] and [39]. why the input shape are different? Have you tried with [23] and [39] as ort input since it has to be 1-D? (I thought you might have done so.) Again, can u please share your system info, including torch/python version? Also, will be great if you can reduce the model size and share a reproducible model.onnx with code and sample inputs, I can debug it further and see where it's broken. Another option would be that you can debug the code yourself using vscode. In this case, you need to build onnxruntime src in debug mode. |

|

yes, pls, and if you can provide a standalone code to repro the issue will be great. |

|

I'm going to debug onnxruntime with the debug mode source code first. Thank you so much. |

|

sounds good, if you have trouble setting it up, pls feel to ping back. |

|

Absolutely, thank you very much! |

|

contribution is more than welcome if you actually find an issue and have a fix for it |

|

Hi, how to build onnxruntime from souce for debug? I git clone it, and use this to build "./build.sh --cmake_extra_defines onnxruntime_DEBUG_NODE_INPUTS_OUTPUTS=1". I built successfully, but when I import onnxruntime, it shows no module named onnxruntime. Is it a path problem or did I do the build in the right way? |

|

pls see - https://onnxruntime.ai/docs/how-to/build/inferencing.html |

|

@SkylerZheng

|

|

I think it's an error happened during the conversion from pytorch to onnx model. Here is an exception occured during the conversion. [W shape_type_inference.cpp:419] Warning: Constant folding in symbolic shape inference fails: index_select(): Index is supposed to be a vector And in the modeling script, the slicing happened here: Could you tell me how to debug with onnx and onnxruntime? I built both of them from source. But I donot know how to debug with them under pycharm (I use python only) |

|

Hi, I solved the problem. I simply changed the len and len+nbb to 1-d array manually with np.asarray, and now it works. Thank you very much for your help during this process. |

Describe the bug

A clear and concise description of what the bug is. To avoid repetition please make sure this is not one of the known issues mentioned on the respective release page.

Urgency

If there are particular important use cases blocked by this or strict project-related timelines, please share more information and dates. If there are no hard deadlines, please specify none.

System information

To Reproduce

Expected behavior

A clear and concise description of what you expected to happen.

Screenshots

If applicable, add screenshots to help explain your problem.

Additional context

Add any other context about the problem here. If the issue is about a particular model, please share the model details as well to facilitate debugging.

The text was updated successfully, but these errors were encountered: