New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[BUG] IsADirectoryError while selecting S3 artifact in the UI #3154

Comments

|

@amiryi365 Thanks for filing this issue. I was able to reproduce the same error using a folder named mlflow/mlflow/server/handlers.py Lines 169 to 171 in df7cc84

|

|

Thanks for replying @harupy |

|

@amiryi365 The error indicates your folder name contains a text file extension. Can you share the code you used to log artifacts? |

|

@harupy the bug is probably not in my code, i,e, I wrote the run code, but it worked well (no exceptions, I can see all run details in PG tables and in the UI, I can see all the files in minio browser and download them, and they look fine). I don't use Finally I do in my __exit__(): |

|

@amiryi365 I see. Are you experiencing an error like below? |

|

Exactly! |

|

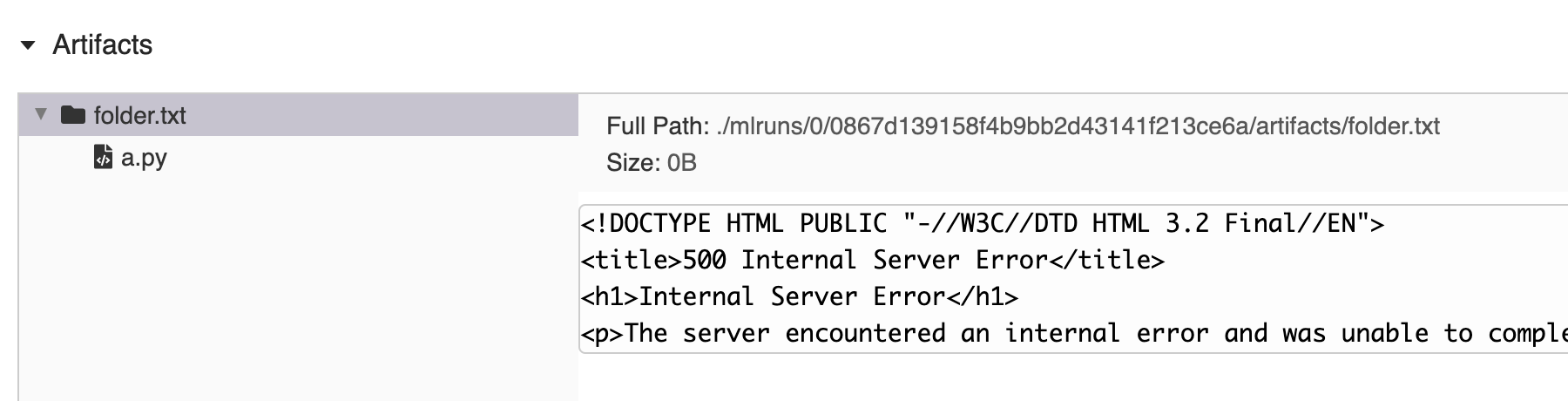

@amiryi365 Can you take a screenshot and share it if possible? |

|

@harupy I can't. But you say you can reproduce the bug... More Info: My client is Windows10 and I run it directly as python (from pycharm), not with |

|

@amiryi365 Got it. Do your artifacts contain a folder like mine in the image above? |

|

@harupy I logged all artifacts under 'log' but It didn't help... |

|

@harupy In your image I can see you're using file uri and not s3 uri |

|

@amiryi365 Yep I just wanted to show that a folder named like |

|

I've just used 2 folders: 'logs' and 'config' - the bug is still there for all files! |

|

So your folder structure looks like: and when you try to open |

|

@harupy Exactly! |

Does this mean that you have a folder named like |

|

@harupy There's a folder named like |

|

@amiryi365 I have setup up minio server following this doc and tested artifact logging, but wasn't able to reproduce the issue. code: import mlflow

EXPERIMENT_NAME = 'minio'

BUCKET_NAME = 'test'

if not mlflow.get_experiment_by_name(EXPERIMENT_NAME):

mlflow.create_experiment(EXPERIMENT_NAME, f's3://{BUCKET_NAME}')

mlflow.set_experiment(EXPERIMENT_NAME)

with mlflow.start_run():

mlflow.log_param('p', 1)

mlflow.log_metric('m', 1)

mlflow.log_artifact('minio.py')

mlflow.log_artifact('minio.py', artifact_path='data') |

|

@harupy I see...

|

|

@harupy Hi there! My patch fix is: It seems s3_root_path includes already the filename, e.g.: Any idea? |

|

@amiryi365 Thanks!

This indicates that |

|

@harupy I'm not sure... |

|

@amiryi365 You can use with mlfow.start_run() as parent_run:

print(mlflow.get_artifact_uri())

with mlflow_start_run(nested=True) as child_run:

print(mlflow.get_artifact_uri())

... |

|

@harupy I get: s3://mlflow/3/xxx/artifacts, so I was wrong putting the params in my test... |

|

@amiryi365 What do you mean by |

|

@harupy I ran somthing like: |

|

@amiryi365 DId you try inserting |

|

@harupy see above (I edited it), I already told you what was my problem adding prints |

|

@amiryi365 Sorry I missed the edit. Actually, you can change the insalled package code. The code below shows where |

|

@harupy that what I did! but although I changed the py file, and also deleted its pyc file, it didn't run my new file! |

|

@harupy I didn't. |

|

@amiryi365 Did you run |

|

@harupy I just tried - it doesn't work on the installed package. |

|

Just to confirm, what you did yesterday is directly fixing the source code of the installed mlfow? |

|

@harupy I added prints to the source code of the installed mlfow, but it didn't "catch" it because it ran the original code. |

How did you confirm that? |

|

@harupy I didn't see my prints. Also, when I deleted the pyc file, it recreated the original one. Other tries: |

|

@amiryi365 What did you do after changing the code? |

|

@harupy rerun it in the same way: |

|

@amiryi365 You added some prints to |

|

@harupy sure |

|

@amiryi365 I think just running |

|

@harupy of course it should call |

|

@amiryi365 So you clicked an artifact in the UI and nothing printed out. Did you still get the same error? |

|

@harupy of course I get the error. If I wouldn't, I could say I solved it.. |

|

@amiryi365 Can you open a file in the error stack trace and edit it to debug? |

|

@harupy I found the bug!!! How to debug mlflow server:

Bug: minio getting contained items list

Inconsistency: Possible Solution: |

|

I found that this bug disappear with an older release of minio - 2020-04-28T23:56:56Z |

|

@amiryi365 Thanks for the investigation :) |

|

@Subhraj07 did u solve this? Im having the same issue too :( |

System information

mlflow --version): 1.9.0Describe the problem

My mlflow server runs on centos with Postgresql backend storage and S3 (minio) artifact storage:

mlflow server --backend-store-uri postgresql://<pg-location-and-credentials> --default-artifact-root s3://mlflow -h 0.0.0.0 -p 8000I set all S3 relevant env vars:

MLFLOW_S3_ENDPOINT_URL, AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, AWS_DEFAULT_REGION

I've successfully ran several runs from other machine against this server:

Runs all finished OK, with params, metrics and artifacts.

Postrgesql mlflow tabels were updated accordingly.

All arifacts were stored in minio bucket as expected and I can display and download them by minio browser.

However, when I select any artifact in the UI, I get Internal Server Error in the browser.

Other info / logs

In the mlflow server I see the following error:

Actually, there is a '/tmp/<generated-name>/' which is really a directory and not a file!

This folder contains another directory with a generated name, and inside there's nothing!

I didn't find any similar error regarding mlflow and s3.

What's wrong?

What component(s), interfaces, languages, and integrations does this bug affect?

Components

area/artifacts: Artifact stores and artifact loggingThe text was updated successfully, but these errors were encountered: