New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Ensemble model only using 2 models to ensemble #478

Comments

|

Shouldn't it ensemble all trained models? |

|

Ensemble is trying all available models. If models don't improve the performance they are not added to the final ensemble. It is using this algorithm. If only one model is selected in ensemble then the ensemble is not selected as the best model. Two models in the ensemble are fine. In your example 75% of predictions are from |

Okay that helps. Thanks. Could I also know which stacking/ensemble_stacking algo is being used? I also see only 1 model (Ensemble) in stacking reader.md |

|

For stacking it is using 5 best models from each algorithm (except baseline, linear model, decision tree). And prediction from 5 best models from each algorithm plus input data are the new input for stacked models. If ensemble has only one model in it, it means that it couldn't build the ensemble of at least 2 models with better performance. |

|

Thanks @pplonski for your prompt feedback. Closing the issue. |

|

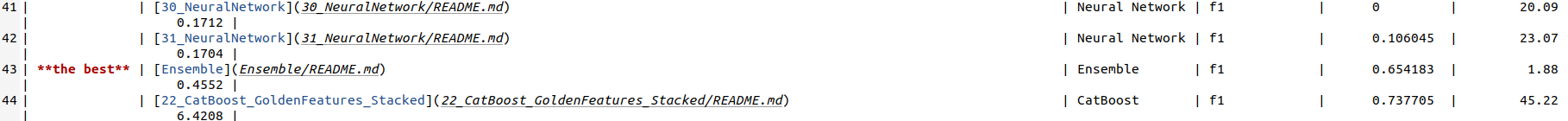

Hi @pplonski, just one more thing - the best model - at the end of training isn't actually the best. As you can see below, the best model is actually a stacked catboost one with F1 score of 0.73. But the output says its the "Ensemble" model which actually has F1 score of 0.65.. |

Also, the predict() function seems to output class 1 even if probability for "prediction_1" is <0.5 (look at the below screenshot). Is automl calibrating the classifier too?? Didn't read about it anywhere in the documentation. I just gave sample_weights to class-1 since its a minority class in my use case, but that's all. |

|

In your example, the model with highest F1 score is not selected as the best because you set the limit for maximum prediction time on single sample (maybe you are using For computing the labels there is threshold used. It doesn't need to be 0.5, please check your model README.md to check the threshold value (the threshold which maximizes accuracy). |

|

Okay, thank you. That makes sense. |

|

I had problem with running SHAP + CatBoost - too long to compute or just throws errors (dont remember now), but I need to disable it. |

When I look at the readme.md file of the Ensemble folder, it shows only 2 models out of so many others that it used to ensemble. Is there a reason for this? Also, when I look at the Ensemble_stacked, it shows just 1, "Ensemble" model as the one used for stack_ensemble.

The text was updated successfully, but these errors were encountered: