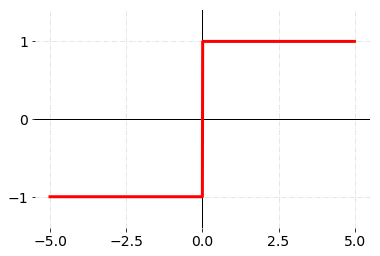

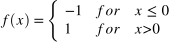

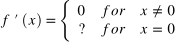

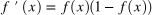

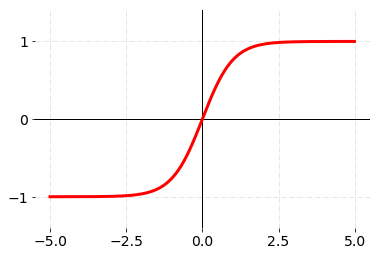

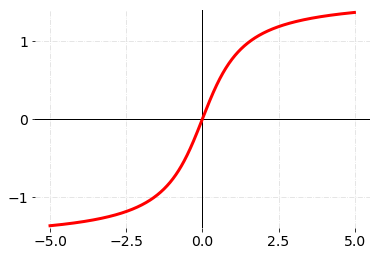

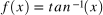

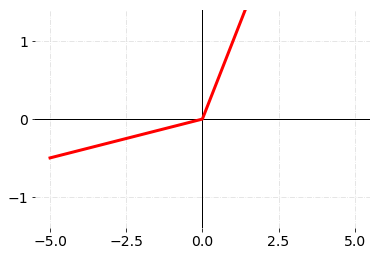

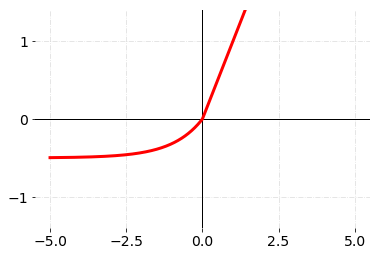

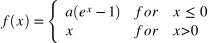

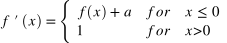

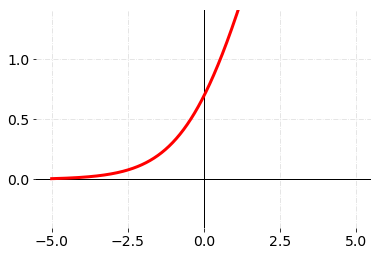

Understanding activation functions is crucial in comprehending the behavior of artificial neural networks. Given the plethora of variants, I've compiled a concise overview to provide clarity.

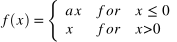

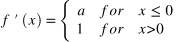

You can access the comprehensive implementations of these functions in Python along with accompanying plots through the notebook or Python file. Notably, the Parametric ReLU is akin to Leaky ReLU, but with the leakage coefficient learned as a neural network parameter.