Recon [Paper Link]

Guangyuan Shi, Qimai Li, Wenlong Zhang, Jiaxin Chen and Xiao-Ming Wu

@article{shi2023recon,

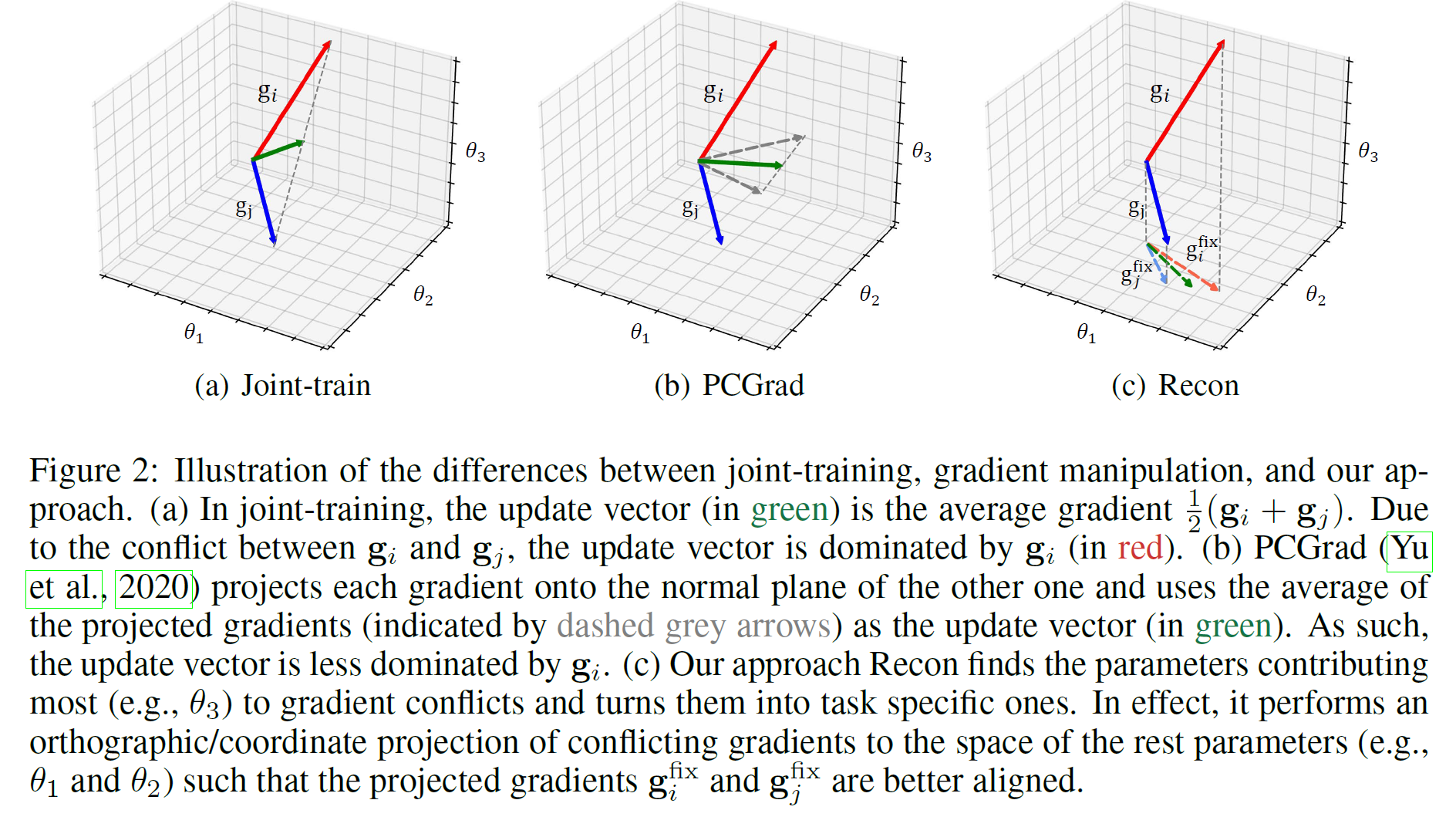

title={Recon: Reducing Conflicting Gradients from the Root for Multi-Task Learning},

author={Shi, Guangyuan and Li, Qimai and Zhang, Wenlong and Chen, Jiaxin and Wu, Xiao-Ming},

journal={arXiv preprint arXiv:2302.11289},

year={2023}

}

- ✅ 2023-04-17: Release the first version of the paper at Arxiv.

- ✅ 2022-04-17: Release the first version of codes and configs of Recon (including the implementation of CAGrad, PCGrad, Graddrop and MGDA).

- ✅ 2022-04-19: Upload the training scripts of Single-Task Learning Baseline.

- 🚧 (To do) Upload the training codes and configs on dataset PASCAL-Context and CelebA.

- 🚧 (To do) Upload implementations of BMTAS and RotoGrad.

-

Clone repo

git clone https://github.com/moukamisama/FS-IL.git

-

Install wandb

- Refer to the README file in dataset folder.

- Refer to the ./exp/ folder for the bash scripts of all baseline models on different datasets. For example, to train CAGrad on MultiFashion+MNIST datasets

./exp/MultiFashion+MNIST/run_CAGrad.sh

- We provide the bash scripts of Recon on different datasets in the ./exp/ folder.

- For example, to train Recon on MultiFashion+MNIST datasets, first we need to run the following codes for calculating the cos similarity between each pair of shared layers:

./exp/MultiFashion+MNIST/run_Recon.sh

- Then we need to run the following code for Calculating the S-conflict Score of each layer and obtain the layers permutation:

./exp/MultiFashion+MNIST/calculate_Sconflict.sh

- Training the modified model: Pre-calculated layer permutations are provided in ./logs/. You can skip the first two steps and directly run the following command to train the modified model:

./exp/MultiFashion+MNIST/run_Recon_Final.sh

- Evaluation results can be seen in the logger or wandb. In the paper, we repeat the experiments with 3 different seeds for each dataset, and the average results of the last iteration are reported.

-

Our modified model can be easily applied to other datasets. The layer permutations we obtained can sometimes be directly used for other datasets. Tuning the hyperparameters (e.g., topK in the third procedure) directly on different datasets can lead to better performance.

-

Generating specific models for different datasets leads to better performance.