New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[FT][ERROR] CUDA runtime error: operation not supported #20

Comments

|

This looks similar to #14 – the T4 is not that old (although you will have trouble running some of the models with only 8GB of RAM), and the compute capability is listed as 7.5, so I'm surprised it doesn't work. Could you try upgrading the version of CUDA to something more recent? |

I have already tried using cuda 10.0, 11.0, 11.2 and 11.7 in host machine. None of them works. |

|

Did that include upgrading the NVIDIA driver? 450.102.04 seems to be fairly old (it came out in January 2021). |

The source contents of ./utils/allocator.h:181 are as follows. virtual ~Allocator()

{

FT_LOG_DEBUG(__PRETTY_FUNCTION__);

while (!pointer_mapping_->empty()) {

free((void**)(&pointer_mapping_->begin()->second.first));

}

delete pointer_mapping_;

}In other words, the error seems to occur because Therefore, I interpret this issue as follows: You had 6.56 GB of free space out of the total of 8 GB before running the model of your choice. |

Indeed I think the code that causes this issue is https://github.com/NVIDIA/FasterTransformer/blob/a44c38134cefe17a81c269b6ec23d91cfe4e7216/src/fastertransformer/utils/allocator.h#L181 I guess some old gpus, like tesla M60, don't support 'Async cudaMalloc/Free' even with cuda version higher than 11.2. Unfortunately, Fasttransformer don't know this. Evidence is ./launch.sh does not show log like https://github.com/NVIDIA/FasterTransformer/blob/a44c38134cefe17a81c269b6ec23d91cfe4e7216/src/fastertransformer/utils/allocator.h#L126 or https://github.com/NVIDIA/FasterTransformer/blob/f73a2cf66fb6bb4595277d0d029ac27601dd664c/src/fastertransformer/utils/allocator.h#L149 . As for @karlind, you changed the cuda version to 11.0 on host machine, but in triton_with_ft container it is still cuda 11.7. So i think maybe we could downgrade the cuda version in triton_with_ft docker image to 11.1 to avoid this issue. And wait Fasttransformer to fix it. Now i don't know how to rebuild the triton_with_ft image. So i can't test the case. Can anyone help me and give some suggestions? @moyix @leemgs |

|

I rebuilt the triton_with_ft image by changing ft code like NVIDIA/FasterTransformer#263 (comment) .

Already solved. |

|

Sorry, I forgot to check the GPU compute capability. It's imposible to run FT on M60 which has compute capability of 5.2. Though, I think my method will work for T4. BTW, can I run fauxpilot on P40? |

Could you tell me the execution result (e.g., CC, Compute Capability) of deviceQuery? And, please, refer to the https://github.com/moyix/fauxpilot/wiki/GPU-Support-Matrix |

|

@leemgs I tested fauxpilot on my tesla p40 using main branch, and it works well( a little bit slow ). And I'm happy that I can use 6B model on it. |

Congrats. I think that a major reaon is a video memory capacity of your GPU on |

do you have solved this problem? and finally how do you do? thanks. my gpu is T4-8C, can i load codegen-350M-mono model and build a server? |

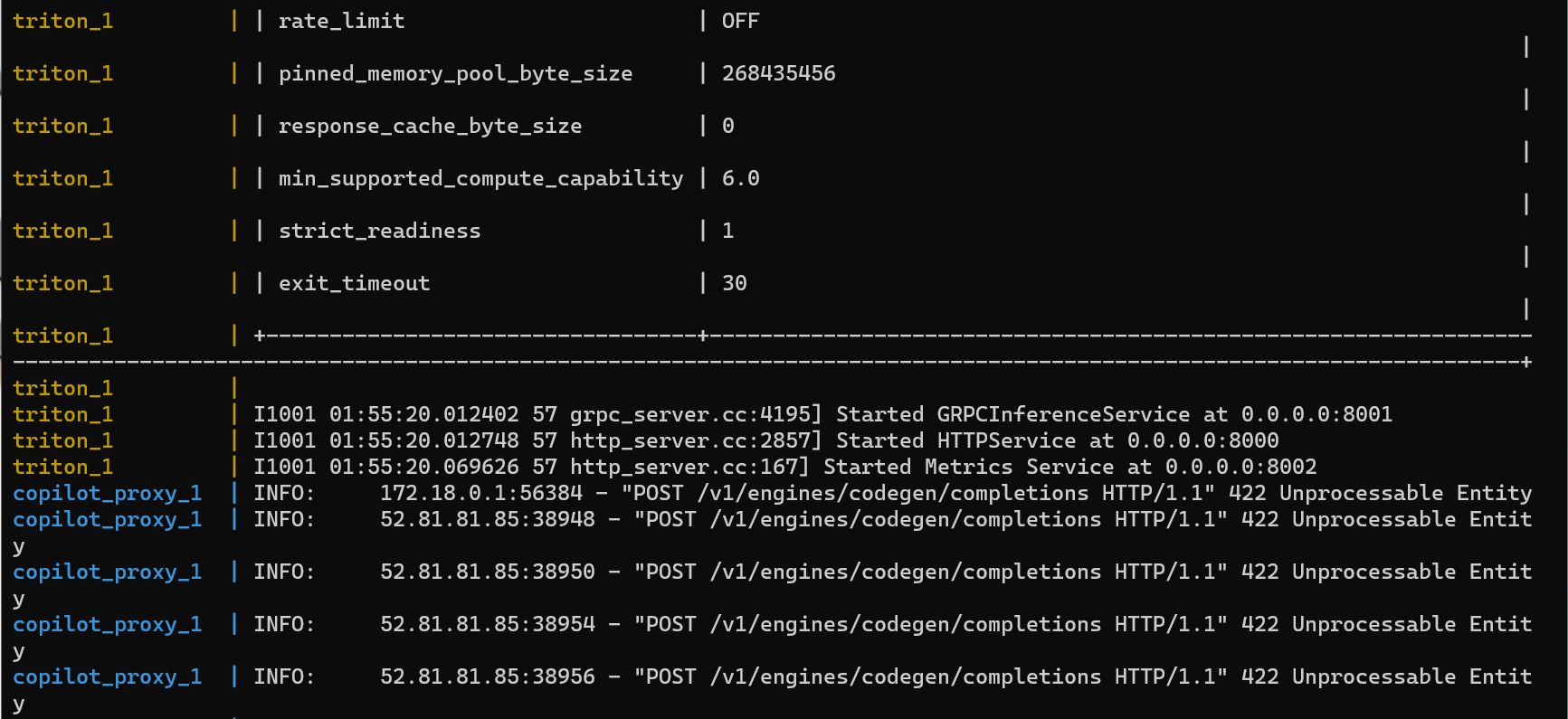

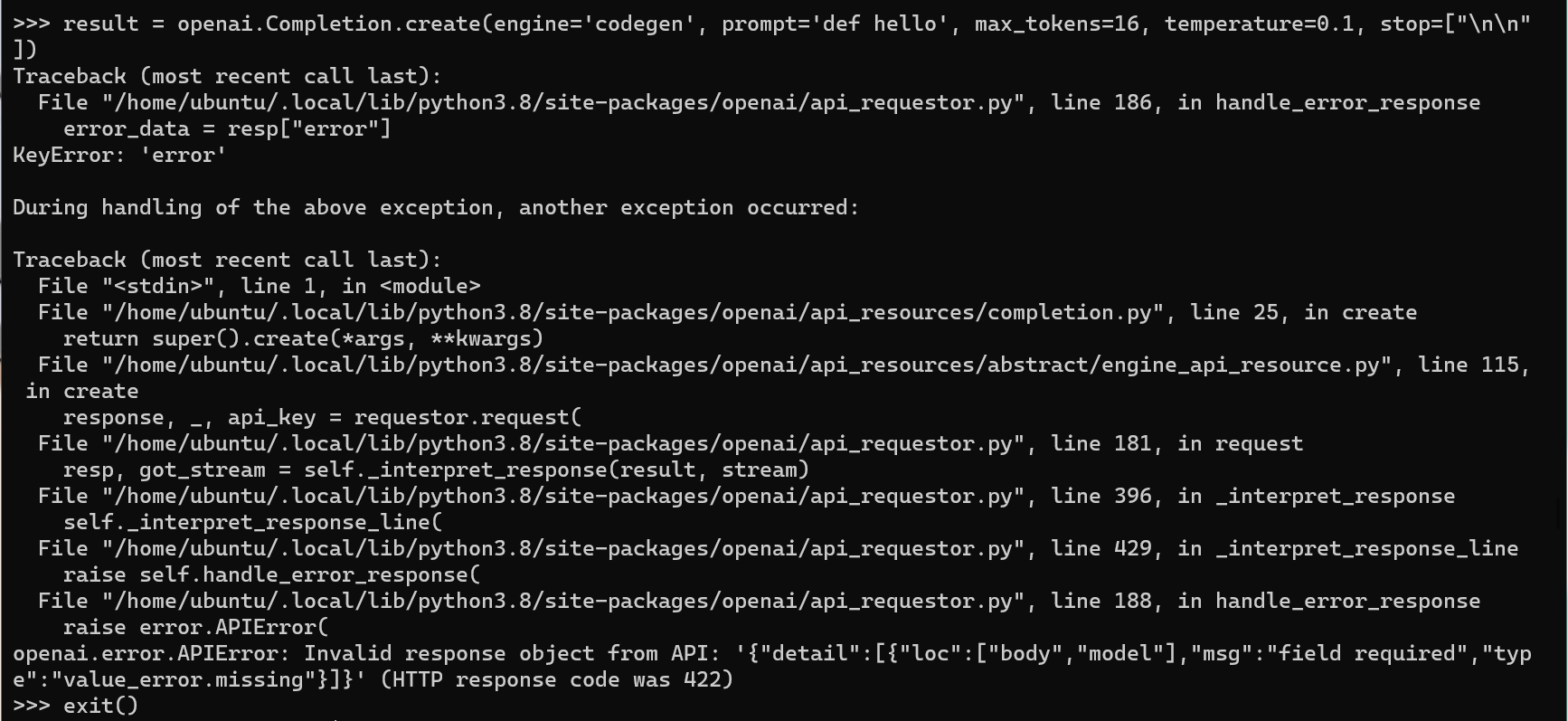

I am using a T4 gpu, host machine's cuda is 11.0 and driver is 450.102.04. When running launch.sh, got such error.

Detail log:

The text was updated successfully, but these errors were encountered: