This package provides a Chainer implementation of Deep Q-Network(DQN) described in the following papers:

- Playing Atari with Deep Reinforcement Learning

- Human-level control through deep reinforcement learning

この記事で実装したコードです。

- Arcade Learning Environment(ALE)

- RL-Glue

- PL-Glue Python codec

- Atari 2600 VCS ROM Collection

- Chainer 1.6+

- Seaborn

- Pandas

環境構築に関しては DQN-chainerリポジトリを動かすだけ が参考になります。

e.g. Atari Breakout

Open 4 terminal windows and run the following commands on each terminal:

Terminal #1

rl_glue

Terminal #2

cd path_to_deep-q-network

python experiment.py --csv_dir breakout/csv --plot_dir breakout/plot

Terminal #3

cd path_to_deep-q-network/breakout

python train.py

Terminal #4

cd /home/your_name/ALE

./ale -game_controller rlglue -use_starting_actions true -random_seed time -display_screen true -frame_skip 4 -send_rgb true /path_to_rom/breakout.bin

実験に用いたコンピュータのスペックは以下の通りです。

| OS | Ubuntu 14.04 LTS |

| CPU | Core i7 |

| RAM | 16GB |

| GPU | GTX 970M 6GB |

We extract the luminance from the RGB frame and rescale it to 84x84.

Then we stack the 4 most recent frames to produce the input to the DQN.

e.g.

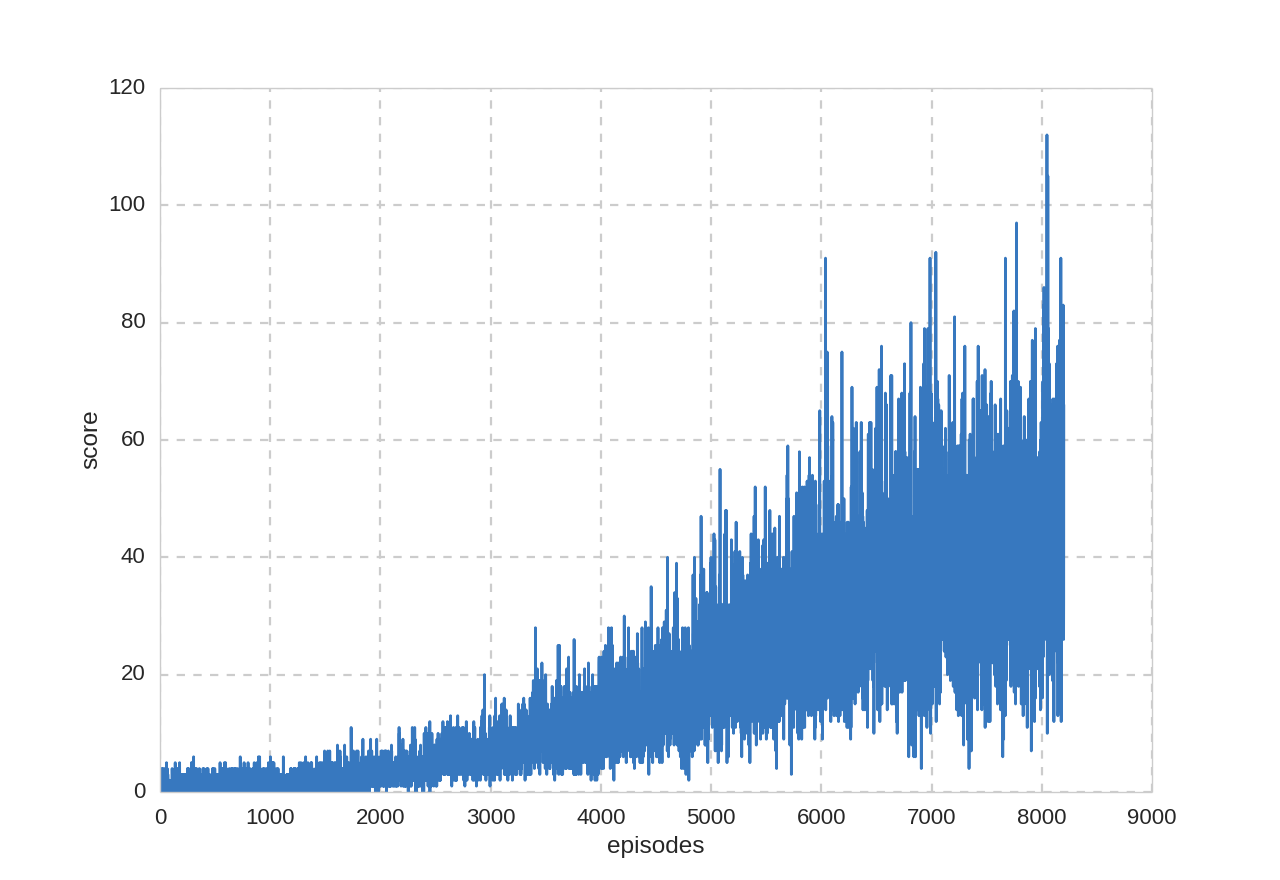

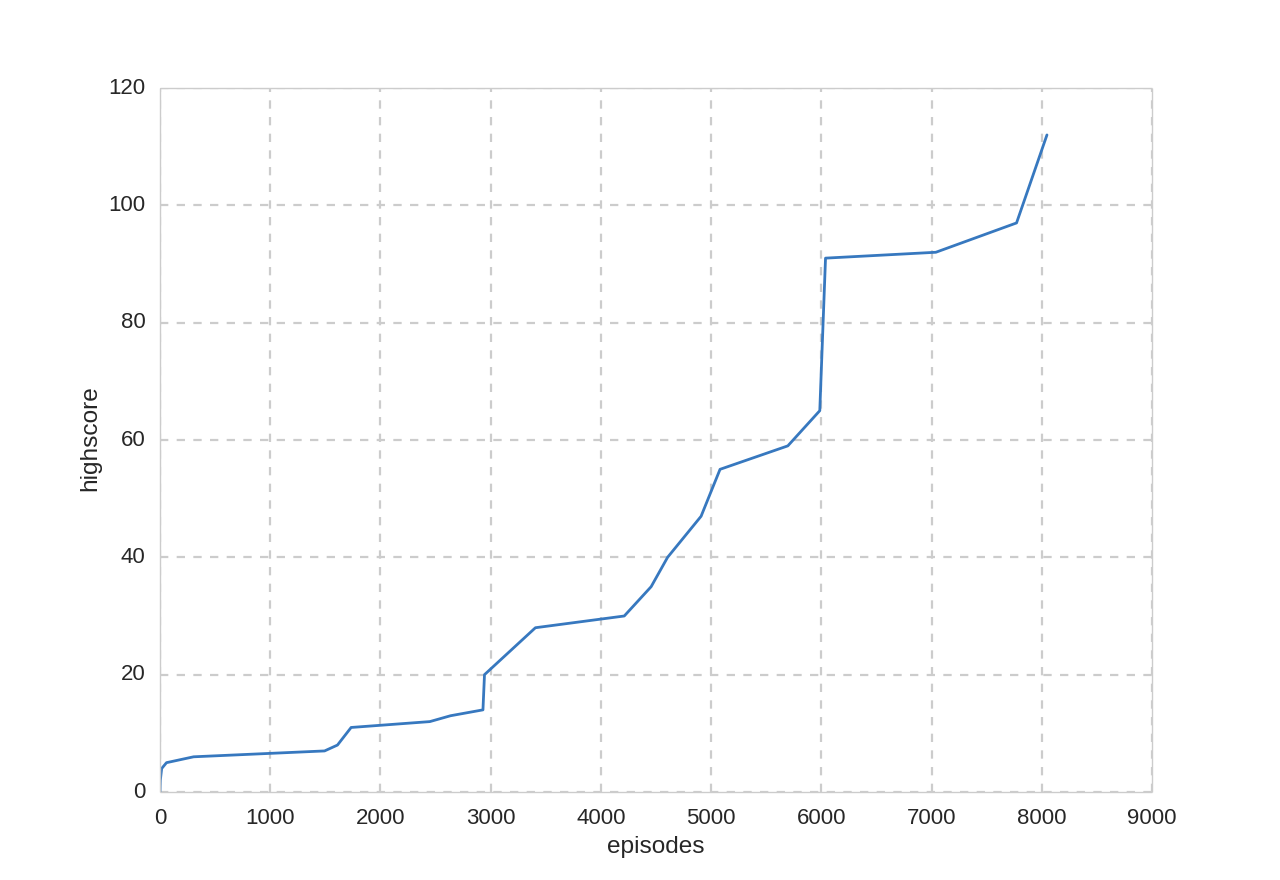

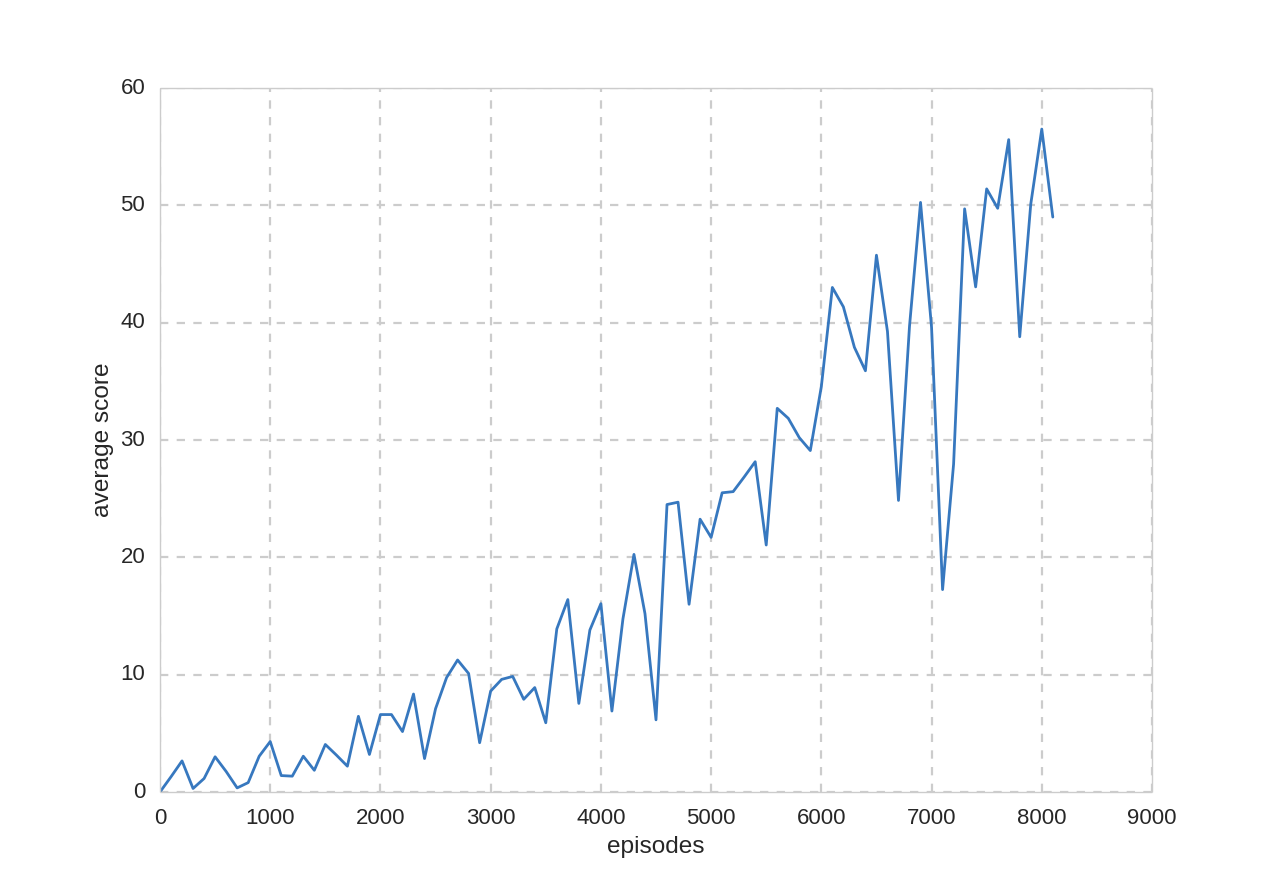

We trained DQN for a total of 42 hours (8200 episodes, 93 epochs, 4670K frames).

本当は10000プレイさせたかったのですが突然コンピュータがシャットダウンしてしまったため中途半端な結果になってしましました。

We trained DQN for a total of 50 hours (1500 episodes, 96 epochs, 4849K frames).