New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

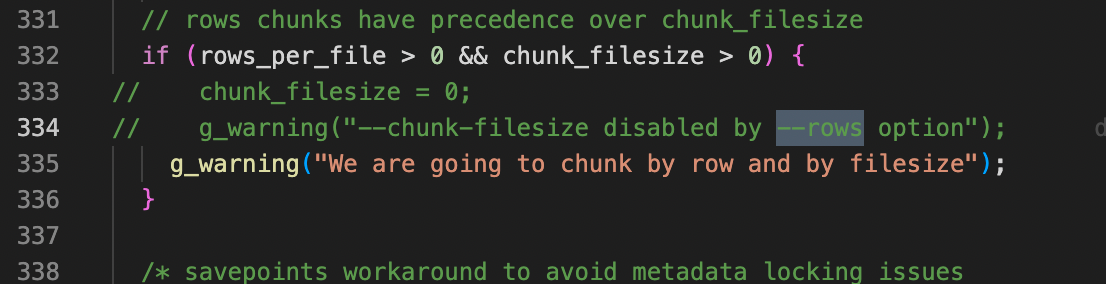

The problem of using --rows and --chunk-filesize at the same time #738

Labels

Milestone

Comments

|

Hi @ronylei, |

|

Hi @davidducos , I understand that when --rows=500000 and --chunk-filesize=1 are used at the same time, --rows shall prevail. Why is the file size 2M? |

|

Hi @ronylei, Can you compile from the master and test again? |

|

It is no problem to use the following version |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

3.start backup

The text was updated successfully, but these errors were encountered: