New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

perf: ModuleTokenFactory #10844

Comments

Quick answer is - yes. Thanks for investigating this issue though. |

|

This adds substantial overhead to applications with more than 20 modules, to the point where it should be considered an issue. In our app with ~30 modules, this adds ~12 seconds of extra cold boot latency... |

|

I appreciate the effort on this. I have a project (nestjs v9) with 37 modules and Not sure why tho. I was not able to start the app after changing |

|

Perhaps there's room for optimizations in the object-hash library? I just saw an issue created by @H4ad with some great ideas on how to make things somewhat better |

|

@kamilmysliwiec Yes, there are a lot of things that I could improve in Optimizing |

|

@H4ad I did just that, take a look. I just can't run the tests locally, they fail with missing typings from axios |

|

Hi everyone, just an update on this topic. I made the updates that I described in the last comment and in the performance tests I didn't see any performance improvement, in fact I see a performance degradation in real projects except in the project used initially for testing (a project without typeorm) . I still believe that @pocesar solution won't fit, but it actually works for him, I talk to @micalevisk and he suggests that maybe the behavior of I did some research in the Angular codebase to see how they do this kind of injection, and from what I've seen they do this heavy stuff at compile time, which I don't think is an option for us because we don't have control over the build configuration. One option I thought maybe could work is having a helper to help make the dynamic modules unique so we don't need to perform any serialization because the user will ensure the module is unique to that ID, I did some experiments and it worked and it helped to reduce a little startup time. I'll continue my research, and as soon as I find something, I'll come back and share it with you.

|

|

@H4ad this happens when running on Cloud Run on GCP. Cloud Run overhead in an empty project is around 5-6s, so 14s is only NestJS doing serialization. I used clinic flame to see that the biggest culprit is the |

|

Hey @kamilmysliwiec, I did some experiments and I found two possible solutions for this problem. Module TokenBased on the last assumption of making dynamic modules unique by using a UID, I could create a proof of concept that works in my private API, see the commit with the changes. I introduce nest/packages/core/injector/module-token-factory.ts Lines 38 to 39 in f051833

To use this new behavior, I just wraps your module by

I also add a faster path when the module is static: nest/packages/core/injector/module-token-factory.ts Lines 54 to 64 in f051833

Basically, I skip what All these changes were only possible because I change these lines: Basically, I stop to create a new instance of an object and just use what the user sends to us, without this modification, my class But with this final change, I realize that we could have a better option. Using object reference as an ID

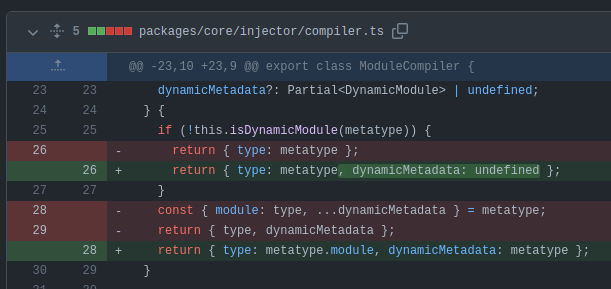

First, why we need to use From what I discover, it's because we associate a UID for each module to be easier to know which service or any injectable be part of what module. The problem is, when we have dynamic modules, we don't know exactly how to generate a ID for that module because is not so simple to distinguish between two dynamic modules. But, why is not simple? Basically, the answer I found is in these lines: nest/packages/core/injector/compiler.ts Lines 28 to 29 in 0e0e24b

In those lines, we perform a shallow clone in the dynamic metadata information, so we lost the ability to distinguish between two dynamic modules because every time the same object is sent to this function, another object with a different reference is created. The main point of having a UID in the DynamicModule was to solve this issue. And to fix this issue, we just need to change to: return { type: metatype.module, dynamicMetadata: metatype }So, relying on fixing this problem, to generate the ID was as simple as: nest/packages/core/injector/module-token-factory.ts Lines 20 to 31 in 5069858

Basically, I create a I also keep the faster path when Using this approach, we eliminate the usage of The only drawback of this approach is: two dynamic modules that have the same name and properties will be evaluated twice, in the old version, NestJS identified this as being the same and return the same ID for both modules. To be honest, I think that this is a user problem instead a problem of the framework should worry about. SummaryBoth options should be heavily tested, so here are some instructions on how you can test in your own private API.

First optionTo test the first option, you should override three files and create one file in the root:

Then, just build your API and run with Second OptionTo test the second option, you should override two files and create one file in the root:

Then, just build your API and run with |

|

I tested the second option in a nestjs v8 app with 67 [eager] modules (and a bunch of circular imports) but didn't get any relevant improvement :/ But if that means that now nestjs won't have that two dependencies anymore, then it sounds good. |

Well not exactly. Since you're using |

|

I don't know if it's possible, but a last resort would to be to use Rust and serde to serialize those big objects, and do all the calculations on optimized binary code. the only issue is that it will require a building step just for this |

|

@kamilmysliwiec So, the back-port will not be feasible for the second option will not be possible to back-port (if back-port is a thing that you want). In this case, what about just launching this as a breaking-change in the next NestJS version? About the first option, we could launch it as a feature for the current version because it does not break the compatibility, what do you think?

Your line of thinking is similar to what Angular does, but the main problem is: modules could be dynamic, so it's not possible to put the initialization of all modules in the build step because that information could be different for each initialization of the API. Also, moving the computation to Rust I don't think that will bring too much speed improvement, I already tried putting the |

|

@H4ad the idea is for getting a stable hash from an object declaration, it doesn't really matter if it's dynamic or not, since it's only for hashing. WASM is very good at hashing, but the same can be done using native addons as well. |

|

@pocesar We can achieve this same behavior with But nice to know, I will keep the WASM usage in my mind, large strings could be useful but for the size of the strings that NestJS generates, the overhead of sending the information is greater than the benefits. |

|

Build-time optimizations are off the table (anything that involves pre-processing TS, including NestJS CLI plugins, proved to be rather unstable and hard to maintain).

@H4ad I'm OK with breaking changes in this case BUT we still need to generate a consistent hash per each module and this hash shouldn't change each time the application is run (it should be identical). This is required for some other features that I'm currently working on |

|

Based on kamil's requirements, I push some optimizations for For now, I don't think it will solve @pocesar's problem but it will improve boot speed a bit. Also, based on the requirements, I don't think it's possible to solve the But I hope what we find in this issue can guide someone to a better version in the future. Thank you all for your patience and help with this issue. |

Is there an existing issue that is already proposing this?

NestJS version

9.2.1

Is your performance suggestion related to a problem? Please describe it

I want to improve the initialization time of NestJS, so I started to do some profiling in

NestFactory#create.Today, the most expensive calls in the initialization are:

*_objectand*_stringare part ofobject-hashlibrary, used inside this line:nest/packages/core/injector/module-token-factory.ts

Lines 15 to 21 in 95e096f

Describe the performance enhancement you are proposing and how we can try it out

I didn't understand why you need to have the same token for the same metadata, so I did a test to comment all those lines and just return a random token from

random-string-generator:And I run the tests and the tests passed!

Benchmarks result or another proof (eg: POC)

About the improvements, we drop from:

To:

This represents a drop of 18019 CPU ticks, reflecting a reduction of 4.2ms from 29.65 to 25.45 in the initialization accords the CPU profile.

So my question is: do we really need that metadata to be serialized in the same hash every time or could we do this optimization?

If the answer is that we don't need it, I can open a pull request, along with #10825 and the other PRs I open, we can see a lot of startup time improvements, not just in the

#createmethod, but in#createApplicationContextand also increateMicroservicebecauseModuleTokenFactoryis used byNestContainerwhich is used in all these methods.The text was updated successfully, but these errors were encountered: