As a student delving into the world of data science and machine learning, one of the fundamental concepts you need to grasp is the variance-bias tradeoff. This concept serves as a cornerstone in building accurate and adaptable predictive models. Let's embark on a comprehensive journey through the variance-bias tradeoff, enriched with detailed explanations and illustrative diagrams.

1. Introduction: Bias and Variance

At the heart of the variance-bias tradeoff are two critical concepts: bias and variance.

-

Bias: Imagine a target you're trying to hit with darts. Bias is akin to consistently missing the target by a fixed amount. In machine learning terms, high bias occurs when your model oversimplifies the problem, disregarding nuances in the data and leading to systematic errors.

-

Variance: On the other hand, variance reflects how your model's performance fluctuates based on different training datasets. High variance models are like aiming for the target with erratic throws, resulting in inconsistent hits. These models capture noise and fluctuations in the training data, performing well on the training set but poorly on unseen data.

2. The Tradeoff Explained

The essence of the variance-bias tradeoff lies in the interplay between these two elements. It's crucial to strike a balance between bias and variance to develop models that generalize effectively. Here's a closer look at how this tradeoff works:

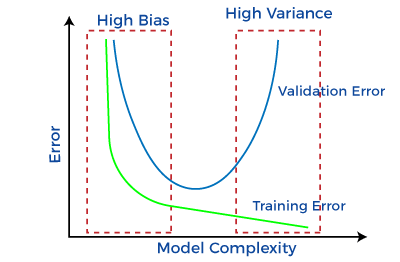

As you can see in the diagram:

-

On the left side of the curve, where model complexity is low, bias is high but variance is low. These models are overly simplistic and fail to capture intricate patterns in the data. This phenomenon is called underfitting.

-

On the right side, with high model complexity, bias is low but variance is high. These models fit the training data extremely well, sometimes even memorizing it. However, they struggle to generalize to new data, leading to overfitting.

3. Finding the Sweet Spot

The key challenge is to find the model complexity that minimizes both bias and variance, striking the optimal balance. This complexity depends on the nature of the problem and the available data.

In this diagram:

- The optimal point on the curve represents the sweet spot, where the total error is minimized. It's the point where the model generalizes effectively without fitting noise.

4. Practical Strategies

To navigate the variance-bias tradeoff effectively, consider these practical strategies:

-

Regularization: Techniques like L1 and L2 regularization add penalties to overly complex models, restraining their flexibility and curbing variance.

-

Cross-Validation: Use techniques like k-fold cross-validation to estimate a model's performance on unseen data. This helps you make informed decisions about model selection.

-

Feature Engineering: Thoughtful feature selection and engineering reduce noise, aiding the model in capturing relevant patterns.

-

Ensemble Methods: Combine predictions from multiple models to mitigate individual models' biases and variances, yielding a more robust prediction.

5. Real-Life Scenarios

To solidify your understanding, let's examine real-life scenarios:

-

Medical Diagnostics: High bias models might overlook subtle symptoms, leading to misdiagnosis. High variance models, however, might detect noise as important symptoms, leading to inconsistency.

-

Financial Predictions: High bias models might oversimplify complex market dynamics, resulting in inaccurate predictions. High variance models, on the other hand, could react strongly to short-term market noise, causing unstable forecasts.

6. Conclusion: Your Path to Mastery

As you embark on your journey as a data scientist or machine learning practitioner, mastering the variance-bias tradeoff is essential. This understanding empowers you to create models that balance simplicity and complexity, resulting in accurate and generalizable predictions.

Remember, the variance-bias tradeoff is not a one-size-fits-all concept. It varies based on the problem, the data, and the ultimate goal of your analysis. Strive to find that delicate equilibrium that transforms you from a learner into a skilled practitioner who can tackle real-world challenges with precision and insight.