UniFormerV2: Spatiotemporal Learning by Arming Image ViTs with Video UniFormer

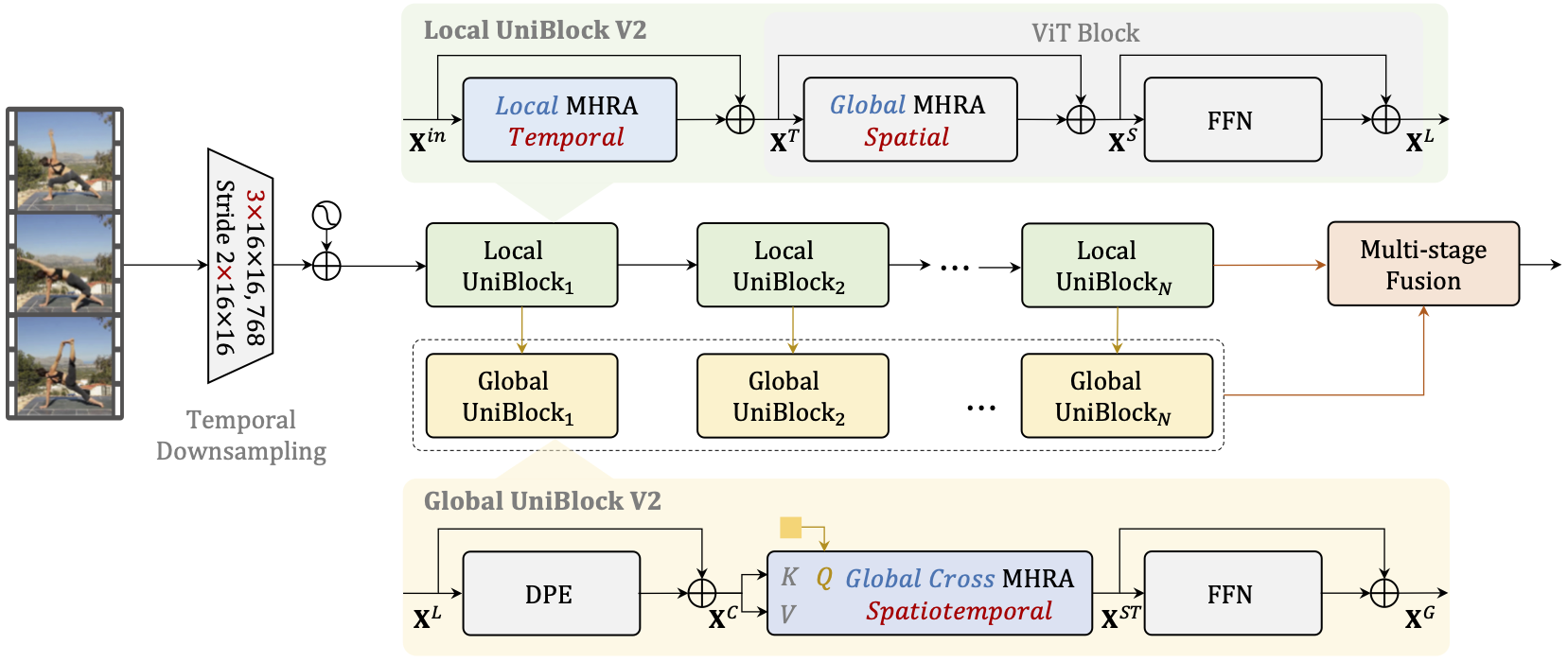

Learning discriminative spatiotemporal representation is the key problem of video understanding. Recently, Vision Transformers (ViTs) have shown their power in learning long-term video dependency with self-attention. Unfortunately, they exhibit limitations in tackling local video redundancy, due to the blind global comparison among tokens. UniFormer has successfully alleviated this issue, by unifying convolution and self-attention as a relation aggregator in the transformer format. However, this model has to require a tiresome and complicated image-pretraining phrase, before being finetuned on videos. This blocks its wide usage in practice. On the contrary, open-sourced ViTs are readily available and well-pretrained with rich image supervision. Based on these observations, we propose a generic paradigm to build a powerful family of video networks, by arming the pretrained ViTs with efficient UniFormer designs. We call this family UniFormerV2, since it inherits the concise style of the UniFormer block. But it contains brand-new local and global relation aggregators, which allow for preferable accuracy-computation balance by seamlessly integrating advantages from both ViTs and UniFormer. Without any bells and whistles, our UniFormerV2 gets the state-of-the-art recognition performance on 8 popular video benchmarks, including scene-related Kinetics-400/600/700 and Moments in Time, temporal-related Something-Something V1/V2, untrimmed ActivityNet and HACS. In particular, it is the first model to achieve 90% top-1 accuracy on Kinetics-400, to our best knowledge.

| uniform sampling | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | mm-Kinetics top1 acc | mm-Kinetics top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | short-side 320 | UniFormerV2-B/16 | clip | - | - | 84.3 | 96.4 | 84.4 | 96.3 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | short-side 320 | UniFormerV2-B/16 | clip-kinetics710 | - | - | 85.6 | 97.0 | 85.8 | 97.1 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | short-side 320 | UniFormerV2-L/14* | clip-kinetics710 | 88.7 | 98.1 | 88.8 | 98.1 | 88.7 | 98.1 | 4 clips x 3 crop | 0.7T | 354M | config | ckpt | - |

| 16 | short-side 320 | UniFormerV2-L/14* | clip-kinetics710 | 89.0 | 98.2 | 89.1 | 98.2 | 89.0 | 98.2 | 4 clips x 3 crop | 1.3T | 354M | config | ckpt | - |

| 32 | short-side 320 | UniFormerV2-L/14* | clip-kinetics710 | 89.3 | 98.2 | 89.3 | 98.2 | 89.4 | 98.2 | 2 clips x 3 crop | 2.7T | 354M | config | ckpt | - |

| 32 | short-side 320 | UniFormerV2-L/14@336* | clip-kinetics710 | 89.5 | 98.4 | 89.7 | 98.3 | 89.5 | 98.4 | 2 clips x 3 crop | 6.3T | 354M | config | ckpt | - |

| uniform sampling | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | mm-Kinetics top1 acc | mm-Kinetics top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | Raw | UniFormerV2-B/16 | clip-kinetics710 | - | - | 86.1 | 97.2 | 86.4 | 97.3 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 89.0 | 98.3 | 89.0 | 98.2 | 87.5 | 98.0 | 4 clips x 3 crop | 0.7T | 354M | config | ckpt | - |

| 16 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 89.4 | 98.3 | 89.4 | 98.3 | 87.8 | 98.0 | 4 clips x 3 crop | 1.3T | 354M | config | ckpt | - |

| 32 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 89.2 | 98.3 | 89.5 | 98.3 | 87.7 | 98.1 | 2 clips x 3 crop | 2.7T | 354M | config | ckpt | - |

| 32 | Raw | UniFormerV2-L/14@336* | clip-kinetics710 | 89.8 | 98.5 | 89.9 | 98.5 | 88.8 | 98.3 | 2 clips x 3 crop | 6.3T | 354M | config | ckpt | - |

| uniform sampling | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | mm-Kinetics top1 acc | mm-Kinetics top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | Raw | UniFormerV2-B/16 | clip | - | - | 75.8 | 92.8 | 75.9 | 92.9 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | Raw | UniFormerV2-B/16 | clip-kinetics710 | - | - | 76.3 | 92.7 | 76.3 | 92.9 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 80.8 | 95.2 | 80.8 | 95.4 | 79.4 | 94.8 | 4 clips x 3 crop | 0.7T | 354M | config | ckpt | - |

| 16 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 81.2 | 95.6 | 81.2 | 95.6 | 79.2 | 95.0 | 4 clips x 3 crop | 1.3T | 354M | config | ckpt | - |

| 32 | Raw | UniFormerV2-L/14* | clip-kinetics710 | 81.4 | 95.7 | 81.5 | 95.7 | 79.8 | 95.3 | 2 clips x 3 crop | 2.7T | 354M | config | ckpt | - |

| 32 | Raw | UniFormerV2-L/14@336* | clip-kinetics710 | 82.1 | 96.0 | 82.1 | 96.1 | 80.6 | 95.6 | 2 clips x 3 crop | 6.3T | 354M | config | ckpt | - |

| uniform sampling | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | Raw | UniFormerV2-B/16 | clip-kinetics710-kinetics400 | 42.3 | 71.5 | 42.6 | 71.7 | 4 clips x 3 crop | 0.1T | 115M | config | ckpt | log |

| 8 | Raw | UniFormerV2-L/14* | clip-kinetics710-kinetics400 | 47.0 | 76.1 | 47.0 | 76.1 | 4 clips x 3 crop | 0.7T | 354M | config | ckpt | - |

| 8 | Raw | UniFormerV2-L/14@336* | clip-kinetics710-kinetics400 | 47.7 | 76.8 | 47.8 | 76.0 | 4 clips x 3 crop | 1.6T | 354M | config | ckpt | - |

| uniform sampling | resolution | backbone | pretrain | top1 acc | top5 acc | config | ckpt | log |

|---|---|---|---|---|---|---|---|---|

| 8 | Raw | UniFormerV2-B/16* | clip | 78.9 | 94.2 | config | ckpt | log |

| 8 | Raw | UniFormerV2-L/14* | clip | - | - | config | ckpt | - |

| 8 | Raw | UniFormerV2-L/14@336* | clip | - | - | config | ckpt | - |

The models with * are ported from the repo UniFormerV2 and tested on our data. Due to computational limitations, we only support reliable training config for base model (i.e. UniFormerV2-B/16).

- The values in columns named after "reference" are the results of the original repo.

- The values in

top1/5 accis tested on the same data list as the original repo, and the label map is provided by UniFormerV2. - The values in columns named after "mm-Kinetics" are the testing results on the Kinetics dataset held by MMAction2, which is also used by other models in MMAction2. Due to the differences between various versions of Kinetics dataset, there is a little gap between

top1/5 accandmm-Kinetics top1/5 acc. For a fair comparison with other models, we report both results here. Note that we simply report the inference results, since the training set is different between UniFormer and other models, the results are lower than that tested on the author's version. - Since the original models for Kinetics-400/600/700 adopt different label file, we simply map the weight according to the label name. New label map for Kinetics-400/600/700 can be found here.

- Due to some differences between SlowFast and MMAction2, there are some gaps between their performances.

- Kinetics-710 is used for pretraining, which helps improve the performance on other datasets efficiently. You can find more details in the paper. We also map the wegiht for Kinetics-710 checkpoints, you can find the label map here.

For more details on data preparation, you can refer to

You can use the following command to test a model.

python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]Example: test UniFormerV2-B/16 model on Kinetics-400 dataset and dump the result to a pkl file.

python tools/test.py configs/recognition/uniformerv2/uniformerv2-base-p16-res224_clip-kinetics710-pre_u8_kinetics400-rgb.py \

checkpoints/SOME_CHECKPOINT.pth --dump result.pklFor more details, you can refer to the Test part in the Training and Test Tutorial.

@article{Li2022UniFormerV2SL,

title={UniFormerV2: Spatiotemporal Learning by Arming Image ViTs with Video UniFormer},

author={Kunchang Li and Yali Wang and Yinan He and Yizhuo Li and Yi Wang and Limin Wang and Y. Qiao},

journal={ArXiv},

year={2022},

volume={abs/2211.09552}

}