New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

TensorRT inference slower than PyTorch for DeepLabV3+ with ResNest101 #239

Comments

|

是因为不支持ResNest吗 |

|

Could you please change the title in English so that users overseas can tell what it's about? |

|

@Xingxu1996 Hi, could you provide us how you test the speed of PyTorch model and TensorRT model? |

In apis/test.py, I modify code in def single_gou_test: So,ONNX model is four times slower than Pytorch model, and TRT is two times slower than Pytorch model! |

|

It's no surprise to see ONNX model is slower. But normaly, TensorRT should be faster than PyTorch. Please provide detailed script you use to convert the model in mmdeploy, including model config, deploy config and checkpoint. |

checkpoint file? How to provide it ? |

|

If you use standard ckpt provided by mmseg, then give us the link is Ok. |

I have solved the problem. In tensorrt engine, ReduceSum operations (torch.sum(dim=n) in resnest block) cost a lot of time. So, I replace it with slice and element-wise-add. Based on torch2trt framework, my segmention model impove inference speed 40%+。But I have not find the solution for the routine of torch->onnx->trt as in MMdeploy! |

|

@Xingxu1996 Hi, good to know it' solved. Could you tell us what lines of code you have changed in mmseg? Let's see if this could be integrated in mmdeploy. |

In mmseg/models/backbones/resnest.py class SplitAttentionConv2d(nn.Module): def forward(),there are two reducesum operations: 1、gap = splits.sum(dim=1) ; 2、torch.sum(attens * splits, dim=1) . Because my self.radix =2, so it's easy to replace it with slice and element-wise add. Look forward DaLao's more robust solution !!!! Based on trt profiler, the two operations cost of a lot of inference time. The version of TensorRT is 7.2.1.6. |

|

Thanks for your detailed info. Let's see if there's any good solution here. |

|

@Xingxu1996 Hi, could you run |

I am very sorry for the late reply! 2022-05-14 11:05:36,453 - mmdeploy - INFO - Backend information 2022-05-14 11:05:44,314 - mmdeploy - INFO - Codebase information |

GPU is P40, input_shape [1,3,1080,1920], |

|

Whether will you describe how to solve the question? or we would better contact with each other by WeChat! |

@Xingxu1996 Hi, sorry for late replying. We have not integrate what you mentioned before into mmdeploy yet. You could join our wechat group for more convenient discussion. Please contact the assistant through WeChat ID |

|

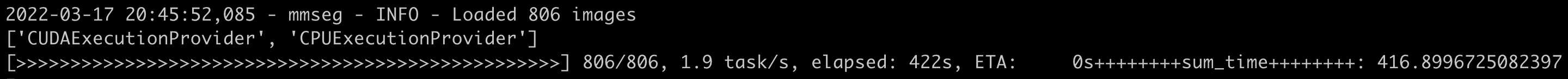

@Xingxu1996 Hi,thanks for your patience. I've tested on my machine and following are the results envConfigs:model cfg: https://github.com/open-mmlab/mmsegmentation/blob/master/configs/resnest/deeplabv3plus_s101-d8_512x1024_80k_cityscapes.py Original model: No change of mmseg. Result

Conclusions

|

|

Closed since we have above solution for this issue. |

backbone为ResNest101的DeepLabV3+转tensorrt后,推理速度慢了两倍多

请问为什么呢

The text was updated successfully, but these errors were encountered: