-

Notifications

You must be signed in to change notification settings - Fork 774

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

javaagent causes big heap on high load for Netty instrumentation #5265

Comments

|

@ozoz03 I ran your sample app and am not convinced that this is a memory leak. If I run your sample app for a bit and perform gc from jconsole before taking a heap dump then all the |

|

@laurit on prod we cannot wait until the oom error happened. we just redeploy. |

|

@ozoz03 How large heap do you use in your production? |

|

@iNikem 6g |

|

@ozoz03 If possible could you verify whether the issue you observed is resolved in nightly build https://oss.sonatype.org/content/repositories/snapshots/io/opentelemetry/javaagent/opentelemetry-javaagent/1.11.0-SNAPSHOT/ |

|

@laurit thank you a lot. |

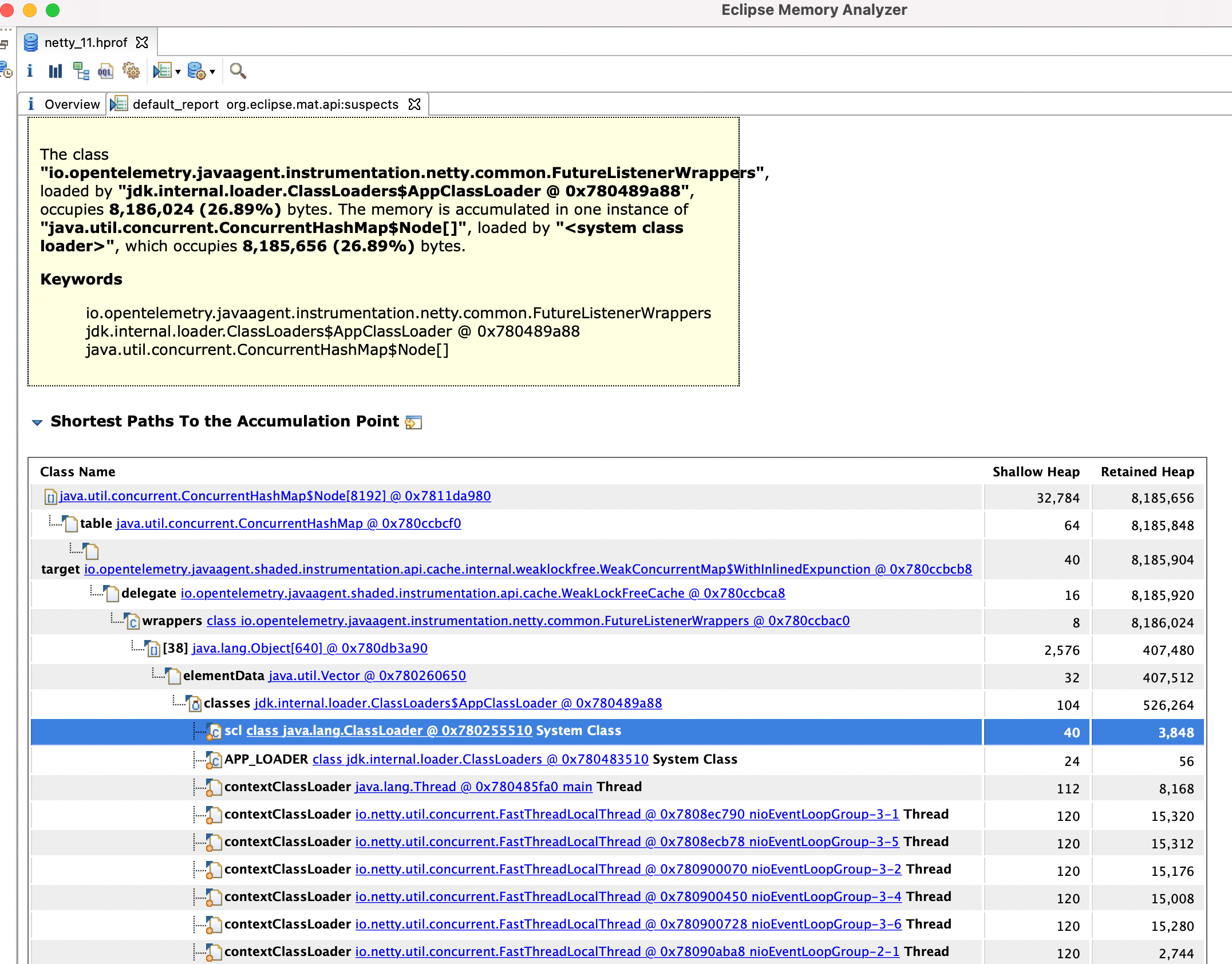

Javaagent produces a critical heap increase for Netty instrumentation on our prod env (heap grows persistently over days and is not cleaned up by GC).

We was able to reproduce the issue by a test app with artillery load.

Looks like cache that contains

FutureListenerWrappersis not properly maintained by GC:Steps to reproduce

Here are steps to reproduce: https://github.com/ozoz03/netty-otel-memory-leak/blob/master/README.md

What version are you using?

v1.10.0Environment

Compiler: openjdk version "11.0.11" 2021-04-20 LTS

OS: macOS 11.6.2, Alpine Linux 3.12.8

The text was updated successfully, but these errors were encountered: