New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

226-yolov7-optimization on Ubuntu #742

Comments

|

@bbartling which ubuntu and python version you use? could you please try to reduce number of samples? replace with |

|

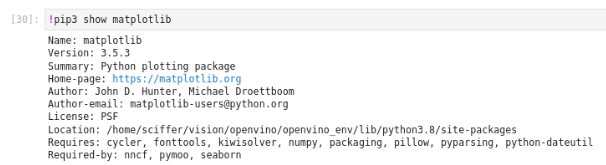

@akashAD98 which matplotlib version do you have? |

|

@eaidova its 3.5.3 |

|

@akashAD98 could you please update it? it looks like some internal bug in matplotlib of specific version. I tested with 3.6.2 and do not see this porblem |

|

Hi @eaidova Using the

Should I add anything to the test function? |

it solved my issue, thanks, Also is there any script for doing inference on video & webcam using .XML file? or converted format |

|

@bbartling ok, now I know that you use develop branch:) please switch to main, I introduced this functionality on it |

|

@akashAD98 glad to know that you solve the issue. Inside notebook you can see inference code for single image, which include pre processing, inference and postprocessing code. I think it should not be an issue just replace image reading with video capturing, right? |

|

@eaidova still let me try ,also Nncf is not supported, my purpose of using openvino to get higher FPS on CPU for video/webcam not for image, that's main part I'm missing here in this tutorial, NOT able to compare speed |

@akashAD98 What do you mean under nncf unsupported? It is tool which allow you to get quantized model representation, moving to low precision helps to make model more hardware-friendly and speedup it, so I think it can help get higher FPS on CPU no matter video or image you use as input |

|

@eaidova yes I can do it, but just saying if you add this it would be easy for others. yesterday my NNCF import was not working, not its working fine. thanks for your quick reply |

|

@akashAD98 thank you for your feedback, possibly we consider to adding video inference in the tutorial in future (or having separated demo for it) |

|

@eaidova im getting this issue while doing inference |

|

@akashAD98 are you sure that you specify postprocessing correctly? |

|

@eaidova i tried to export it with --grid parameter only, still not able to do the process further. |

|

@akashAD98 I mean if you use --grid parameter, you have wrong postprocessing in your code, it assume that you have 3 outputs, while you have only one |

|

@akashAD98 why you do not use prepare_input_tensor for video frames, you missing normalization input tensors, that is why you have no detections. Also each frame from video capture is BGR, you need to convert it do RGB in the same way how you do it in process_image.

Sorry, I have a lot of work and I think this is an inappropriate request to rewire someone else's code for making it working... especially, if it is not connected with tutorial content. Probably, your code works with your onnx model which was exported in another way, different from demonstrated in our tutorial, if you convert your model to IR using mo, your code will work with your model in this format, but from model provided in tutorial (no mattter onnx or xml) required different postprocessing |

|

@eaidova i converted to rgb & also used prepare_input_tensor(), then what is wrong here |

|

@akashAD98 but it is too late you run inference using async infer queue inside process_video |

When I run this notebook on Ubuntu with a successful setup of the virtual env and requirements.txt install...the kernel dies on my machine half way through every time...would you have tips to try?

Its this block of code towards the end...where it does run I can see the process go from 0 to 100% but after a 100% is met the Kernel dies and I cant make it any further.

Any options to try greatly appreciated.

The text was updated successfully, but these errors were encountered: