New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Observing significant (up to 30x) __get_item__ performance drop between 0.19.2 and 0.20.x #16644

Comments

|

Unfortunately it is very hard to debug such a large example -- a lot of different things could be doing wrong. Could please make a Minimal, Complete, and Verifiable example? Thanks |

|

Thanks for the link! I will post something shortly. |

|

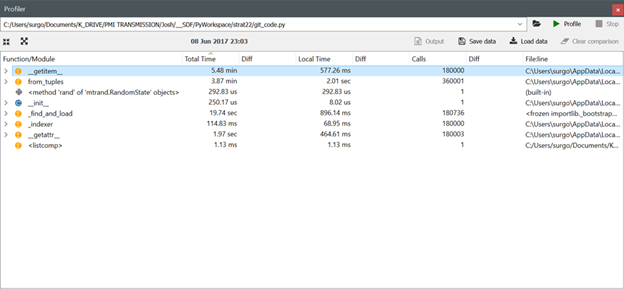

Okay, I can replicate the 0.19.2 to 0.20.1 performance degradation with the following code, but the 0.20.2 performance is roughly the same as the 0.19.2 performance (i.e., the get_item issues appears to have been corrected in version 0.20.2 for my verifiable code example). I will look at what else is in my original code and revise the verifiable example tomorrow to try to replicate the 3x performance degradation I saw in my original script. I have also included images for the new code from the Spyder profiler after running Pandas versions 0.19.2, 0.20.1 and 0.20.2 respectively. |

|

@jupiterjosh The related issue with the MultiIndex indexing performance regression: #16319 (and PRs #16324 and #16346) |

|

If you can get the remaining slowdown between 0.19.2 and 0.20.2 in a smaller reproducible example, that would be nice! |

|

@jorisvandenbossche Thanks. I will track down the remaining slowdown. @jreback I would have preferred if this was closed with some sort of comment since I did observe a slowdown between 0.19.2 and 0.20.2. I will repost when I have figured out where the remaining slowdown is occurring and I can create a better verifiable example. It is somewhere buried in get_loc, but I can't tease out where as of yet. Btw, I don't know if this helps for your performance benchmarking, but as a performance use-case I routinely pull in dataframes with north of 1 million records. |

|

The remaining slowdown is due to whether get_loc from pandas_libs.index.MultiIndexObjectEngine is preferred by the dataframe (this method returns quickly) or whether get_loc from pandas_libs.index.MultiIndexHashEngine is used (much much slower). The MultIndexHashEngine uses a function called "_combine_hash_arrays" which appears to be the code causing the slowdown. I am not familiar with the pandas codebase enough to alter the code below to trigger MultiIndexHashEngine to be preferred over MultiIndexObjectEngine. Any ideas? I tried mixing the types on my index, but that didn't trigger MultIndexHashEngine, and yet the script that I run in my original code causes this to be used. I also tried loading from a file (my original script calls read_csv) thinking that might change the type of index engine, but that also did not work. ` #%% #%% |

|

@jupiterjosh I am happy to keep around an issue that demonstrates a clear example of a performance regression, meaning it is a minimal reproducible example. pls format the code and show timing differences with a version on each. further you in fact stated this

|

|

@jreback -- perhaps you could comment on this:

Pandas is doing some sort of type inferencing to determine when the MultiIndexHashEngine is used over the MultiIndexObjectEngine. If you could give me a pointer on how to set up a multiindex that triggers the use of the MultiIndexHashEngine then I will post code and profiler results. |

|

Yes, there are indeed two mechanisms to index MultiIndexes, and which is used depends on the size of the index: pandas/pandas/core/indexes/multi.py Lines 635 to 646 in 1564a6e

So the current cut-off is a size of 10000. Note that a "minimal reproducible example" does not need to use a small dataframe. I mean you can create with minimal code a large frame, and so trigger the use the hash-based engine. |

I can demonstrate the 3x slowdown in the code below. There are two files attached to this comment with my timeit results (I used IPython's "timeit" magic command). I don't know if one needs to reopen the issue or start a new one. After playing with the indexing for awhile, I could see that the memory management in the new version is really good, I just think the cutoff for triggering it may be too low given the 3x performance penalty (assuming that can't be fixed). The attached timeit results show a roughly 40us execution time on my machine for up to 100,000,000 indices using version 0.19.2 and roughly 120us on my machine for indices greater than 10k and up to 10MM indices using 0.20.2. As I said before, the memory management in the new version is very good, but the 10k cutoff feels low. Multiindices by their very nature get large (i.e, curse of dimensionality), and 10,000 indices corresponds to a really small 100 by 100 multiindex. The difference in memory footprint for 10,000,000 indices was roughly 500Mb between the two versions. I personally would consider anything under 1GB a small price to pay for some additional breathing room and a 66% reduction in execution time. Also, this is just fyi, but I don't know if the memory performance is working exactly how you want it to. I have a decent amount of RAM on my box and I was able to see both engines in action on a large dataset. In version 0.19.2, the dataframe with 100MM indices ate up around 24GB of ram and didn't let it go. The new version peaked at 18GB before settling down to 11GB. At first I thought it was a garbage collection issue because the code is building dataframes in a loop and so I cleaned out everything, ran just the 10BB scenario and got the same result. So I'm wondering if the idea is to make the memory footprint smaller, is the initial ballooning in the engine a problem? It certainly would be for the 1BB case if you had 16GB of ram, since you'd be paging the hdd (and hanging the box) as the footprint approaches 18GB before settling down to 11GB. #%%

import pandas as pd

import numpy as np

#%%

# Construct a dummy dataframe with a 2d multindex of size m-by-n

def dummy_df(m,n):

_M = m

_N = n

tuples = [(i,j) for i in range(_M) for j in range(_N)]

index = pd.MultiIndex.from_tuples(tuples, names=['first', 'second'])

df = pd.DataFrame(np.random.rand(_N*_M,4),columns=['A','B','C','D'],index=index)

return df

#%%

# Test data accessing

n = 1000

for m in [5,9,20,100,500,1000,10000,100000]:

df = dummy_df(m,n)

print(str(m*n) + ' indices =>')

# Time a random value with IPython's timeit magic command

%timeit df.A.loc[3,25] See attached files for timeit results (I decided to inline them after the initial post): Using Pandas version 0.19.2

Using Pandas 0.20.2:

|

|

@jupiterjosh the cutoff is 100k elements ATM. I suppose going to 1M wouldn't hurt. But you would have to show conclusive gains like this: #16346 (comment). You can add an asv for 1M I suppose. @jorisvandenbossche also had a nice graph of this. Which if we can add memory usage would be nice. If you are actually querying 1M times itself then you are doing things in an inefficient manner as well. You can easily use list / boolean indexing. |

|

Thanks @jreback. I will look at adding an asv. Is the 100k cutoff in the 0.20.2 release or a dev build? I hit it at 10k elements in 0.20.2. You're right btw, I will change my indexing strategy. I don't know the pandas code-base, but I was more concerned with the indexing slowdown potentially impacting other pandas functions (joins or groupbys maybe?) since indexing seems to be something that you'd want to be as fast (and atomic) as possible. |

groupbys and joins are running vectorized code. indexing is by definition initiated by the user. you should not be doing repeated single indexing. |

|

Cutoff is currently indeed only 10k as @jupiterjosh finds (code) Figure for timings is here: pandas/pandas/core/indexes/multi.py Lines 635 to 646 in 1564a6e

I am certainly in favor of increasing the cutoff to at least 100k. At the time, I tried to make similar plots for memory, but this was a bit more complicated and didn't get consistent results. |

Code Sample, a copy-pastable example if possible

Problem description

This is my first ever git post, and so please let me know if I can do anything else to make the issue more clear.

This is the gist of a script that I execute in Spyder. It is ugly and could be written to be made faster, but since the "for" loop (which is calling get_item) only took around 10 seconds to run, I didn't worry about cleaning it up. The 3x performance degradation between 0.19.2 to 0.20.2 (and a 30x degradation in 0.20.1) is very disconcerting since get_item is such a low level operation.

[this should explain why the current behaviour is a problem and why the expected output is a better solution.]

Expected Output

The code sample above took around 10 seconds to execute on pandas version 0.19.2. It took over 5 minutes to run in version 0.20.1 and around 30 seconds to run in version 0.20.2. The runtime difference between 0.19.2 and 0.20.2 is still a 3x degradation.

Note the profiler output for get_item using pandas versions 0.19.2, 0.20.1 and 0.20.2 respectively:

1 of 4 -- Output of

pd.show_versions()-- VERSION 0.19.2pandas: 0.19.2

nose: 1.3.7

pip: 9.0.1

setuptools: 27.2.0

Cython: 0.25.2

numpy: 1.12.1

scipy: 0.19.0

statsmodels: 0.8.0

xarray: None

IPython: 5.3.0

sphinx: 1.5.6

patsy: 0.4.1

dateutil: 2.6.0

pytz: 2017.2

blosc: None

bottleneck: 1.2.1

tables: 3.2.2

numexpr: 2.6.2

matplotlib: 2.0.2

openpyxl: 2.4.7

xlrd: 1.0.0

xlwt: 1.2.0

xlsxwriter: 0.9.6

lxml: 3.7.3

bs4: 4.6.0

html5lib: 0.999

httplib2: None

apiclient: None

sqlalchemy: 1.1.9

pymysql: 0.7.9.None

psycopg2: None

jinja2: 2.9.6

boto: 2.46.1

pandas_datareader: None

2 of 3 -- Output of

pd.show_versions()-- VERSION 0.20.1pandas: 0.20.1

pytest: 3.0.7

pip: 9.0.1

setuptools: 27.2.0

Cython: 0.25.2

numpy: 1.12.1

scipy: 0.19.0

xarray: None

IPython: 5.3.0

sphinx: 1.5.6

patsy: 0.4.1

dateutil: 2.6.0

pytz: 2017.2

blosc: None

bottleneck: 1.2.1

tables: 3.2.2

numexpr: 2.6.2

feather: None

matplotlib: 2.0.2

openpyxl: 2.4.7

xlrd: 1.0.0

xlwt: 1.2.0

xlsxwriter: 0.9.6

lxml: 3.7.3

bs4: 4.6.0

html5lib: 0.999

sqlalchemy: 1.1.9

pymysql: 0.7.9.None

psycopg2: None

jinja2: 2.9.6

s3fs: None

pandas_gbq: None

pandas_datareader: None

3 of 3-- Output of

pd.show_versions()-- VERSION 0.20.2pandas: 0.20.2

pytest: 3.0.7

pip: 9.0.1

setuptools: 27.2.0

Cython: 0.25.2

numpy: 1.12.1

scipy: 0.19.0

xarray: None

IPython: 5.3.0

sphinx: 1.5.6

patsy: 0.4.1

dateutil: 2.6.0

pytz: 2017.2

blosc: None

bottleneck: 1.2.1

tables: 3.2.2

numexpr: 2.6.2

feather: None

matplotlib: 2.0.2

openpyxl: 2.4.7

xlrd: 1.0.0

xlwt: 1.2.0

xlsxwriter: 0.9.6

lxml: 3.7.3

bs4: 4.6.0

html5lib: 0.999

sqlalchemy: 1.1.9

pymysql: 0.7.9.None

psycopg2: None

jinja2: 2.9.6

s3fs: None

pandas_gbq: None

pandas_datareader: None

The text was updated successfully, but these errors were encountered: