-

-

Notifications

You must be signed in to change notification settings - Fork 217

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Do you know of any packages or frameworks similar to promptfoo? #49

Comments

|

I also would like to know. |

|

Unfortunately not really. I built this because there wasn't anything else out there that did what I needed it to do. OpenAI does have an Evals framework you can take a look at. Its focus is on testing OpenAI models with heavier test cases, and some of the more advanced test cases require Python implementation. |

|

The main thing I need is this but in python with langchain compatibility. It might be worth cloning and converting. |

|

As far as I know, |

|

@typpo Thanks for the link reference. |

|

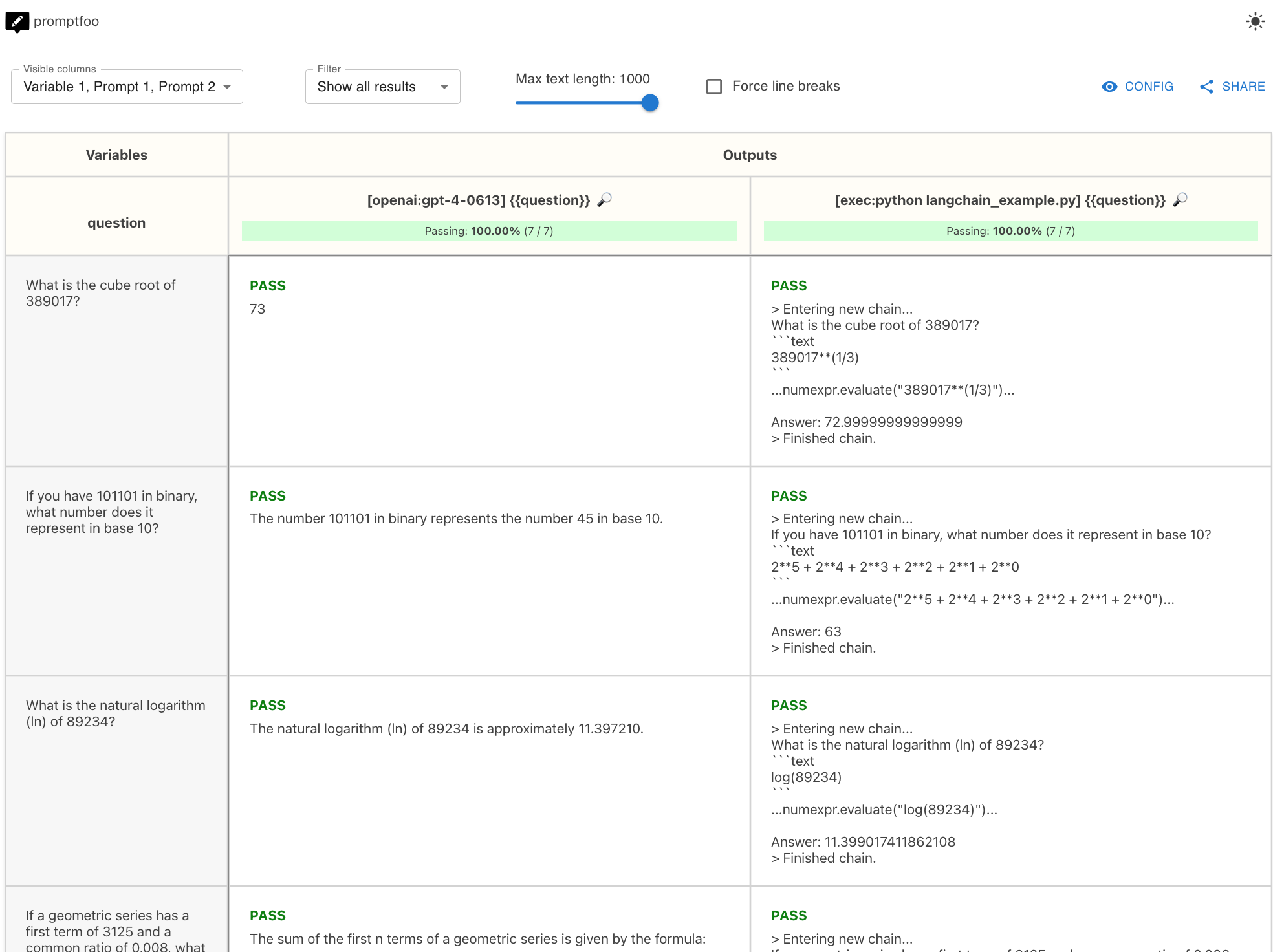

For those of you working in Python, have a look at the end-to-end LLM chain testing documentation. Specifically, I've created an example that shows how to evaluate a Python LangChain implementation. The example compares raw GPT-4 with LangChain's LLM-Math plugin by using the # promptfooconfig.yaml

# ...

providers:

- openai:chat:gpt-4-0613

- exec:python langchain_example.py

# ...The result is a side-by-side comparison of GPT-4 and LangChain doing math: Hope this helps your use cases. If not, interested in learning more. Side note - |

|

It looks like it was released recently. |

|

@typpo : First of all congratulations on the great work in building this library. It would be great if we can have some way to directly compare and contrast |

I was looking for something just like promptfoo. Do you know of any packages or frameworks similar to this? I would like to consider other comparisons.

The text was updated successfully, but these errors were encountered: