-

Notifications

You must be signed in to change notification settings - Fork 21.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

distributed data parallel, gloo backend works, but nccl deadlock #17745

Comments

|

I have encoutered this problem. My problem is caused by different CUDA version. |

|

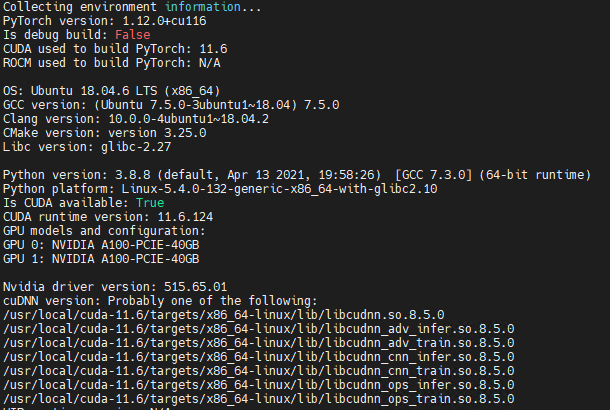

Can you please use https://github.com/pytorch/pytorch/blob/master/torch/utils/collect_env.py to report information about your system |

|

Had a similar issue. Mine was due to not issuing |

I have run into the same problem as #14870 . Because I cannot reopen the issue, I opened a new issue. But the GPUs are all empty, except that 11MB memory is used (no process running).

The code is easy to reproduce:

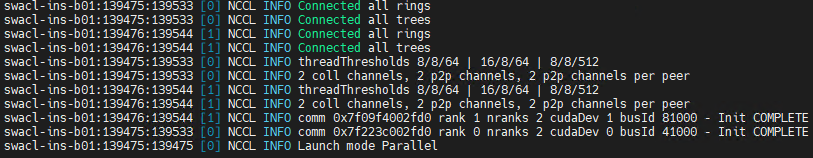

After printing

it hangs.

But it works fine with

gloo.The text was updated successfully, but these errors were encountered: