New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

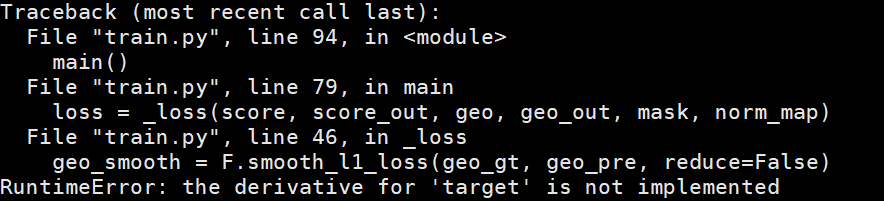

RuntimeError: the derivative for 'target' is not implemented. When I use F.smooth_l1_loss(x, y, reduce=False) #3933

Comments

|

But F.smooth_l1_loss works well when using it with two random tensor. I am really confused. |

|

That sounds like a bug. The Variable you pass in for |

|

Are you sure that the target is the second argument and not the first? You feed (GT, PRED) but it should be (PRED, GT)... |

|

@zou3519 you are right, cause I pass the parameters in wrong order. |

|

@wadimkehl Thx, man. You are totally right! My bad. I will close this issue |

/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py:2016: UserWarning: Using a target size (torch.Size([32, 784])) that is different to the input size (torch.Size([32, 1, 28, 28])) is deprecated. Please ensure they have the same size.

|

When I calculate the smooth L1 Loss between two Variable with F.smooth_l1_loss(x, y, reduce=False), I will the get this:

However, when switching to nn.SmoothL1Loss(x, y, reduce=False), I have no problem. It's weird man

The text was updated successfully, but these errors were encountered: