max_pool2d CPU forward performance is poor #51393

Labels

module: performance

Issues related to performance, either of kernel code or framework glue

module: pooling

triaged

This issue has been looked at a team member, and triaged and prioritized into an appropriate module

NCHW

NHWC

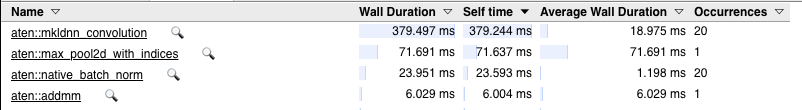

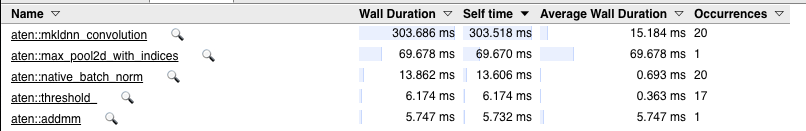

Results from running on my machine (lscpu)

These results are well below both peak memory/cache bandwidth on the machine and GFLOPs

perfindicates that we're hitting max_pool2d_with_indices_single_out_frame.A few things pop out here:

ucomisson scalar single-precision floats):NCHWis understandably hard, butNHWCshould be relatively trivial. The quantized op kernel does so:pytorch/aten/src/ATen/native/quantized/cpu/kernels/QuantizedOpKernels.cpp

Line 1096 in cedfa4c

cc @VitalyFedyunin @ngimel @heitorschueroff

The text was updated successfully, but these errors were encountered: