[INSTANCENORM] Instance Normalization ignores track_running_stats=True when exporting to ONNX. #72057

Labels

module: onnx

Related to torch.onnx

onnx-needs-info

needs information from the author / reporter before ONNX team can take action

triaged

This issue has been looked at a team member, and triaged and prioritized into an appropriate module

🐛 Describe the bug

Hi,

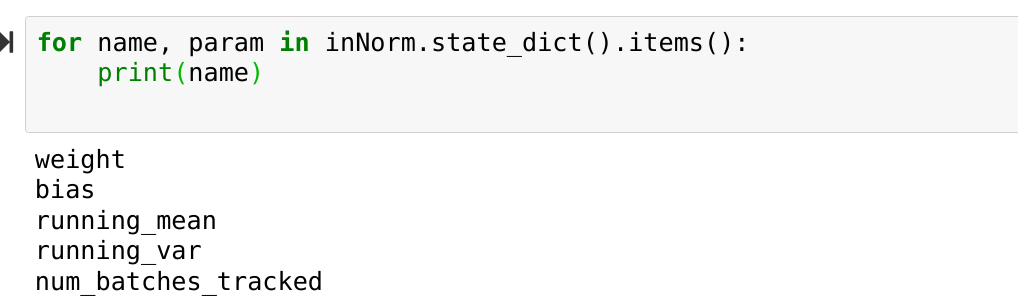

I was exporting https://github.com/yunjey/stargan to ONNX, they have Instance Normalization layers with track_running_stats=True. In this case the layer keeps 4 params: running mean/variance, weight and bias.

Here is a minimal illustaration.

But when I export it to onnx, it keeps only weight and bias params. Can this be fixed?

I have also tested the layer with the onnxruntime, and the absolute and relative error is large.

And this is the result of the onnx runtime from the instance noramlization layer.

Versions

Versions of relevant libraries:

[pip3] numpy==1.19.5

[pip3] torch==1.8.1+cpu

[pip3] torchaudio==0.8.1

[pip3] torchsummary==1.5.1

[pip3] torchvision==0.9.1+cpu

[pip3] torchviz==0.0.1

[conda] Could not collect

The text was updated successfully, but these errors were encountered: