New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ResourceExhaustedError after segmentation models update! #167

Comments

|

Hi, I have add a one more skip connection (a little modification, but now it requires more memory).

May be that was not a good decision to make this modification, but I have received a feedback that it help to improve quality. |

|

May it will be good to make this modification optional and return original architecture. |

|

Newbies like me will try to run these kinds of libraries firstly on Google Colab. And I don't think that we'll easily understand and solve this model & GPU fit problem. As you said, it would be better when it is optional. Could you please let me know when you update it?

model = sm.FPN(backbone_name = 'resnext101',

classes = NUM_CLASSES,

input_shape = INPUT_SHAPE,

encoder_weights = "imagenet",

activation="softmax",

encoder_freeze=True,

pyramid_aggregation = 'sum')Thank you very much! :-) |

|

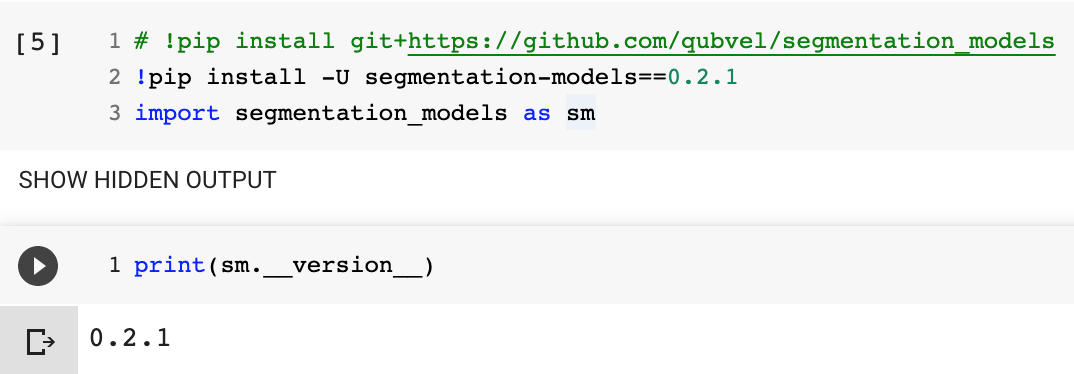

I have mentioned this in readme: you need 0.2.1 version. |

|

pip install -U segmentation-models==0.2.1 |

|

Still the same problem. # !pip install -U segmentation-models==0.2.1

import segmentation_models as sm

# Load model

model = sm.FPN(backbone_name = 'resnext101',

classes = NUM_CLASSES,

input_shape = INPUT_SHAPE,

encoder_weights = "imagenet",

activation="softmax",

encoder_freeze=True)

model.compile(optimizer = optimizers.rmsprop(lr=0.00032),

loss = categorical_focal_loss(),

metrics = ["accuracy", sm.metrics.iou_score])

NUM_BATCH = 32

NUM_EPOCH = 100

history = model.fit_generator(

generator = zipped_train_generator,

validation_data=(X_validation, y_validation),

steps_per_epoch=len(X_train) // NUM_BATCH,

callbacks= callbacks_list,

verbose = 1,

epochs = NUM_EPOCH)Epoch 1/100

---------------------------------------------------------------------------

ResourceExhaustedError Traceback (most recent call last)

<ipython-input-17-d397d21e8395> in <module>()

----> 1 get_ipython().run_cell_magic('time', '', 'history = model.fit_generator(\n generator = zipped_train_generator,\n validation_data=(X_validation, y_validation),\n steps_per_epoch=len(X_train) // NUM_BATCH,\n callbacks= callbacks_list,\n verbose = 1,\n epochs = NUM_EPOCH)')

9 frames

/usr/local/lib/python3.6/dist-packages/IPython/core/interactiveshell.py in run_cell_magic(self, magic_name, line, cell)

2115 magic_arg_s = self.var_expand(line, stack_depth)

2116 with self.builtin_trap:

-> 2117 result = fn(magic_arg_s, cell)

2118 return result

2119

</usr/local/lib/python3.6/dist-packages/decorator.py:decorator-gen-60> in time(self, line, cell, local_ns)

/usr/local/lib/python3.6/dist-packages/IPython/core/magic.py in <lambda>(f, *a, **k)

186 # but it's overkill for just that one bit of state.

187 def magic_deco(arg):

--> 188 call = lambda f, *a, **k: f(*a, **k)

189

190 if callable(arg):

/usr/local/lib/python3.6/dist-packages/IPython/core/magics/execution.py in time(self, line, cell, local_ns)

1191 else:

1192 st = clock2()

-> 1193 exec(code, glob, local_ns)

1194 end = clock2()

1195 out = None

<timed exec> in <module>()

/usr/local/lib/python3.6/dist-packages/keras/legacy/interfaces.py in wrapper(*args, **kwargs)

89 warnings.warn('Update your `' + object_name + '` call to the ' +

90 'Keras 2 API: ' + signature, stacklevel=2)

---> 91 return func(*args, **kwargs)

92 wrapper._original_function = func

93 return wrapper

/usr/local/lib/python3.6/dist-packages/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1416 use_multiprocessing=use_multiprocessing,

1417 shuffle=shuffle,

-> 1418 initial_epoch=initial_epoch)

1419

1420 @interfaces.legacy_generator_methods_support

/usr/local/lib/python3.6/dist-packages/keras/engine/training_generator.py in fit_generator(model, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

215 outs = model.train_on_batch(x, y,

216 sample_weight=sample_weight,

--> 217 class_weight=class_weight)

218

219 outs = to_list(outs)

/usr/local/lib/python3.6/dist-packages/keras/engine/training.py in train_on_batch(self, x, y, sample_weight, class_weight)

1215 ins = x + y + sample_weights

1216 self._make_train_function()

-> 1217 outputs = self.train_function(ins)

1218 return unpack_singleton(outputs)

1219

/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py in __call__(self, inputs)

2713 return self._legacy_call(inputs)

2714

-> 2715 return self._call(inputs)

2716 else:

2717 if py_any(is_tensor(x) for x in inputs):

/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py in _call(self, inputs)

2673 fetched = self._callable_fn(*array_vals, run_metadata=self.run_metadata)

2674 else:

-> 2675 fetched = self._callable_fn(*array_vals)

2676 return fetched[:len(self.outputs)]

2677

/usr/local/lib/python3.6/dist-packages/tensorflow/python/client/session.py in __call__(self, *args, **kwargs)

1456 ret = tf_session.TF_SessionRunCallable(self._session._session,

1457 self._handle, args,

-> 1458 run_metadata_ptr)

1459 if run_metadata:

1460 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr)

ResourceExhaustedError: OOM when allocating tensor with shape[32,512,56,56] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node training/RMSprop/gradients/conv2d_1057/convolution_grad/Conv2DBackpropInput}}]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info. |

|

check the version |

|

You can also try to make smaller batch_size, or reduce image spatial size to fit into memory |

|

Actually it does not look like it is cuda memory exhausted error, usually it says something like "not enough memory for tensor with shape ..." |

|

The first traceback is correct, but the second looks different |

|

Oh, it was just my incorrect mobile version, they are the same)) |

|

Yeah, thats strange.. |

|

Hello, With the 1.0.0 version FPN(resnet34) FPN(resnet18) FPN(inception v3) do OOM. They worked with the 0.2.1, so may be there's something else, don't you think? Thank's for your work, |

|

Hi, there is an option how to aggregare feature pyramid: |

|

I tried, |

|

Hi, Does model use multiple GPUs in training (e.g. keras.utils.multi_gpu_model)? I have the same problem when using Unet(efficientnetb4/b5/b6) with a batch_size > 12. My images are all 320 by 320. It's OK if I use a batch_size smaller than 10. Thank you, Chen |

|

Hi, No my model doesn't use keras.utils.multi_gpu_model. Mathieu. |

Hi! I was working FPN with 'resnext101' backbone on Google Colab. I've trained the model and have done lots of experiments and the results were very good. Today, after I updated the segmentation models (actually, every time I use Google Colab, I have to reinstall it) I got the following error shown below. By the way, I tried to use Unet with 'vgg16' backbone and everything went well. I wonder why FPN with resnext101 backbone does not fit GPU memory as it fit two days ago.

Thank you very much @qubvel .

Edit1:

FPN with vgg16 backbone is OK.

FPN with vgg19 backbone is OK.

FPN with resnet34 backbone is OK.

FPN with resnet50 backbone is NOT OK (The same error is shown below).

FPN with resnet101 backbone is NOT OK (The same error is shown below).

FPN with resnext50 backbone is NOT OK (The same error is shown below).

Edit2:

The related StackOverflow question.

The text was updated successfully, but these errors were encountered: