New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Upgrading from k8s 1.23.14 to 1.23.15 fails in Rancher 2.6.9 #40280

Comments

|

Seems worker nodes are stuck at 1.23.14 but control-plane and etcd nodes are upgraded to 1.23.15... |

|

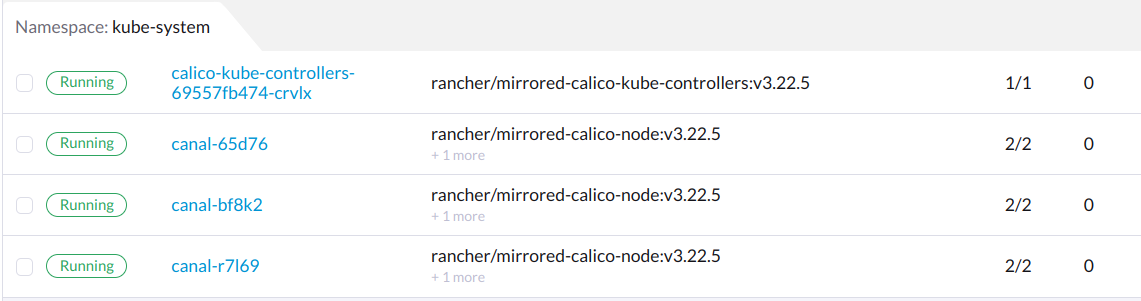

I can confirm I do also have the same issue. Tried several times with both Rancher 2.6.9 and 2.7.0 to upgrade to 1.23.15 as well as 1.24.9. It seems that under "Workload" --> "Jobs" in the namespace kube-system you can see that the Job rke-network-plugin-deploy-job keeps failing with messages mentioned in this issue: projectcalico/calico#6258 However not all of our clusters have this issue. All of them but one did the upgrade fine. |

|

We can confirm the behaviour while upgrading from 1.24.4 to 1.24.9 with rancher 2.7.1 |

|

confirmed editing these crd's allowed the update to complete successfully /w rke 1.4.2 & k8s 1.21.5 => 1.24.9 |

|

I am trying to figure out this problem, considering that it may be caused by the upgrade of CNI components, so I simulate the version upgrade by adjusting KDM(https://github.com/rancher/kontainer-driver-metadata). Rancher: v2.6.10

I haven't found a way to reproduce it yet. Is the CNI using the default configuration, or has it been changed since the initial installation? Maybe this is a clue? |

|

I tried to follow these steps to test but did not reproduce the issue. Cluster can be upgraded. Rancher:v2.6.9 KDM1: https://raw.githubusercontent.com/rancher/kontainer-driver-metadata/11-28-2022/data/data.json Steps:

|

|

there are RKE1 clusters setup 2 years ago which used v1beta1 CRD, and spec.preserveUnknownFields value is set to true (v1beta1 default to true). workaround: P.S. upstream already added backwards-compatible fix in projectcalico/calico#6242 but only in 3.24 |

|

Using the v1beta1 CRD as a clue, I did this test. I would check the setting of preserveUnknownFields. Calico uses v1 CRDs since 3.15. Rancher: v2.6.10

When the upgrade fails, I can see that the I run the following command, edit the cluster on the UI, and click the save button to upgrade the cluster successfully. The Calico v3.22.5 version may have some special changes. From the information provided, all RKEs that fail to upgrade use Calico v3.22.5. Never mind, there should be a solution here. If anyone finds other errors in |

|

Calico 3.22 CRD introduced new fields with default values, so have to set |

I can confirm this workaround successfully worked on our Rancher-environment, thanks. |

|

We used the workaround provided by @niusmallnan to go from K8s v1.22.9 and Rancher v2.6.5 -> K8s v1.24.9 Rancher v2.7.1. |

|

I can confirm that this worked aswell. Thank you so much! |

|

/forwardport v2.7.2 |

|

Same issue here, upgrading a cluster from v1.23.14 to v1.23.15 on Rancher 2.6.10. Can confirm we have a RKE1 cluster from 3 years ago. |

|

Steps to validate:

The job cc: @rishabhmsra |

|

Validated using below steps:

Result:

|

|

Validated again using below steps:

Result :

|

In Cluster manager I tried to upgrade to kubernetes 1.23.15 from 1.23.14 but the process ends in upgrade failed and error message , see screenshots. However when it says fail I hit the edit cluster again and to my surprise 1.23.14 is still a selectable option. If I choose that, and hit upgrade(downgrade) again, the cluster becomes green again. However the cluster info now says 1.23.15 and all nodes show 1.23.15. Is this a known bug?

The text was updated successfully, but these errors were encountered: