-

Notifications

You must be signed in to change notification settings - Fork 8

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Train a categorical model #39

Comments

|

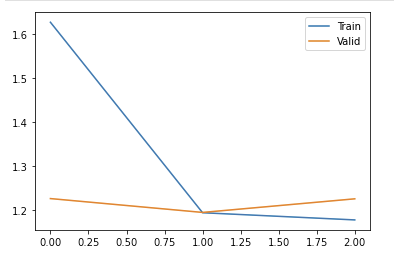

I got the first working end-to-end version going. Training a categorical net, then sampling from it with a 2D correlated random field. So far, I have not actually trained it on the full data and with enough bins but here is what this approximately looks like. Next steps are: 1) Copy changes into src; 2) Train on full data with more bins; 3) Evaluate it; 4) Improve net by adding more variables, etc. |

|

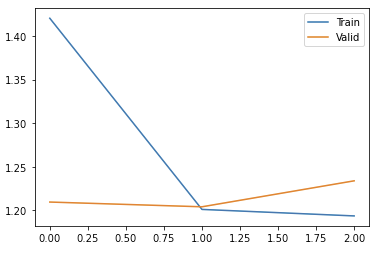

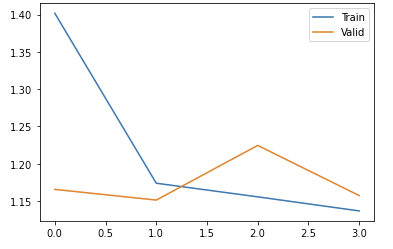

Adding orography and the LSM (3 + 4) on their own doesn't really change the skill yet at all. Maybe without additional information in terms of variables this isn't enough info. I would still have expected orography to hep a little. Hmm. But maybe the uncertainty is also just way too large for this to make any impact? Let's add both and add some more variables, one by one. |

|

Also, try the better loss!!! |

It might make sense to also look into a Metnet-style categorical model. I am pretty sure that this would work. It would be a) a good baseline and b) a good fallback option if we never get a GAN to train.

I would like to try sampling from the probability output with a correlated random field as well. This might actually end up looking quite realistic and, I suspect, hard to beat score-wise with a GAN.

The text was updated successfully, but these errors were encountered: