You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

What you observe is the backpressure effect. The cache operator has a default size of prefetch equal to 256. The following prefetch is 256 - 256 >> 2 which is 192. So there are 2 prefetches happening 256 and then 192 which is in total 448.

You may wonder why you don't see more. The answer is because of your usage of the shared flux. It blocks itself within flatMap. The FlatMap operator also has built-in backpressure so flatMap backpressure stops and hereby cache backpressure also stops and both operators stop requesting upstream.

Why flatMap may stop requesting more? The FlatMap operator has a concurrency of 256 elements, which means 256 concurrent streams can run at the same time and be merged within that operator. Whenever the inner publisher completes, the flat map may request one more element from the upstream. If the inner source does not complete, flatMap does not request more. You may easily check that behavior in the following example:

You will see that onNext(256) never happens. That is fine, that is the so-called backpressure control implemented in flatMap. To fix that you can always adjust that value:

Now you should see the last element and onComplete after that.

So, what is wrong in your case? The problem with your scenario is that you are flatting the same flux. This means if that flux can not complete earlier, all the flattened variants will run forever, which means you will have a deadlock.

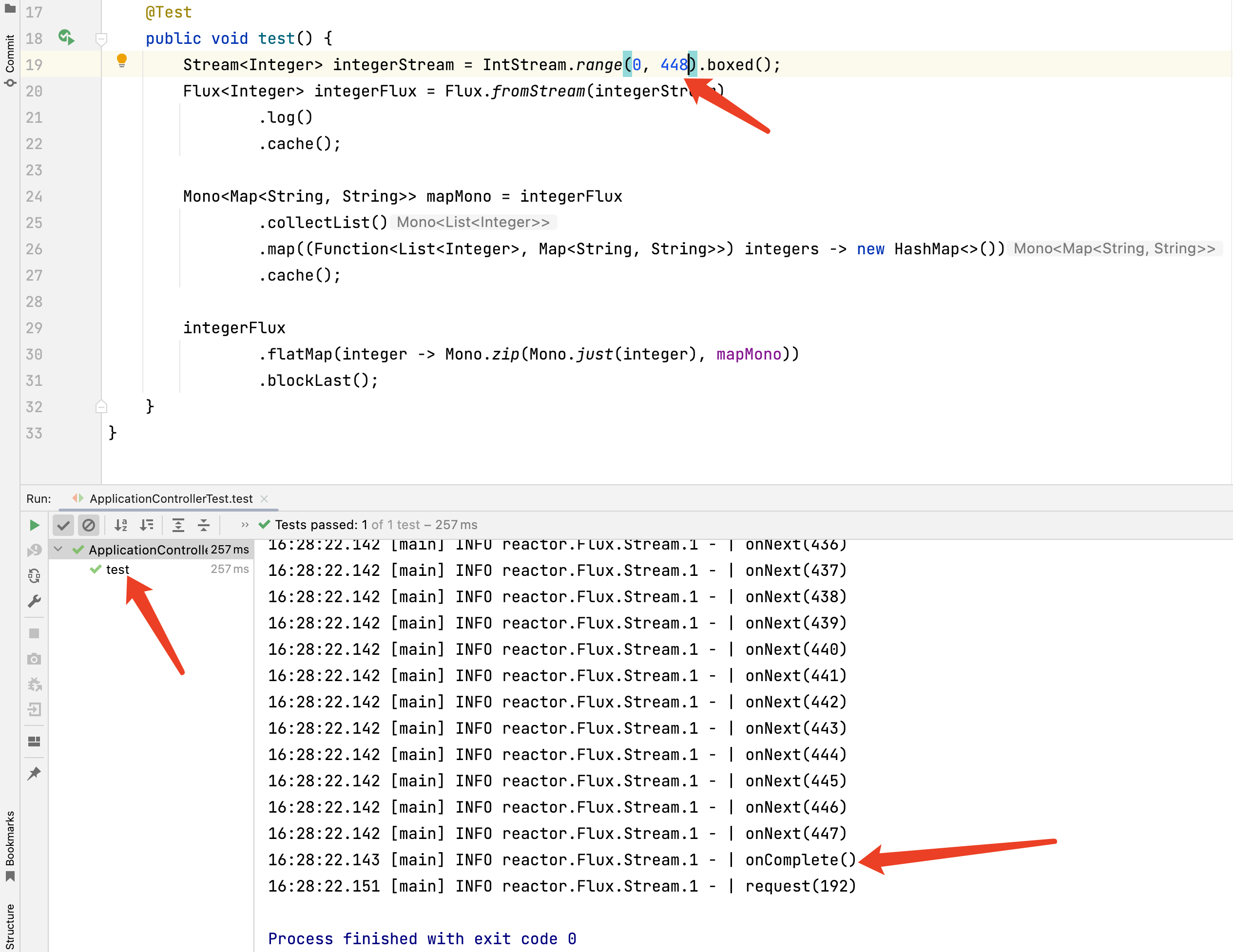

I found a strange issue when i use flux cache. Here is the code:

It will block, but if i change the 449 to 448, it will successfully complete. And here are the result images for this 2 situations.

449:

448

My reactor-core version is 3.4.19.

Please help me, thanks.

The text was updated successfully, but these errors were encountered: