You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Exercise 5. Fill in the missing code in mem_init() after the call to check_page().

Your code should now pass the check_kern_pgdir() and check_page_installed_pgdir() checks.

Continue the work in implementing mem_init(), the first task is mapping virtual address of UPGAES into the physical

address of pages[npages] . boot_map_region(kern_pgdir, UPAGES, PTSIZE, PADDR(pages), PTE_U);

The next step is to map the kern stack into corresponding physical parts.

the "boostack" is the physical address of the top of the stack. Which is determined by the linker;

Namely, we can map the address [KSTACKTOP - PTSIZE, KERNBASE) , but we only map the STKSIZE part thus: boot_map_region(kern_pgdir, KSTACKTOP - KSTKSIZE, KSTKSIZE, PADDR(bootstack), PTE_W);

Lastly, the kernbase should be mapped boot_map_region(kern_pgdir, KERNBASE, 0xffffffff - KERNBASE, 0, PTE_W);

3. (From Lecture 3) We have placed the kernel and user environment in the same address space. Why will user

programs not be able to read or write the kernel's memory? What specific mechanisms protect the kernel

memory?

Cause the PTE_U is not enabled;

4. What is the maximum amount of physical memory that this operating system can support? Why?

This system use 4096KB(RO PAGES size ) space for storing page information, a struct PageInfo has 8Byte, thus we can have at most 4096 * 1024 / 8 = 524,288 pages, which can afford 524288 * 4096 Bytes = 2048MB = 2GB,

5. How much space overhead is there for managing memory, if we actually had the maximum amount of physical

memory? How is this overhead broken down?

Page Directory = 1024 * 4Byte = 4K;

Page table entry = 1024 * 1024* 4Byte = 4MB; (But infact almost 2GB physical memory, thus only 512 second -level will be used), thus 2MB;

Page Info(RO pages) = PTSIZE = 4MB;

total = 2MB + 4MB + 4K;

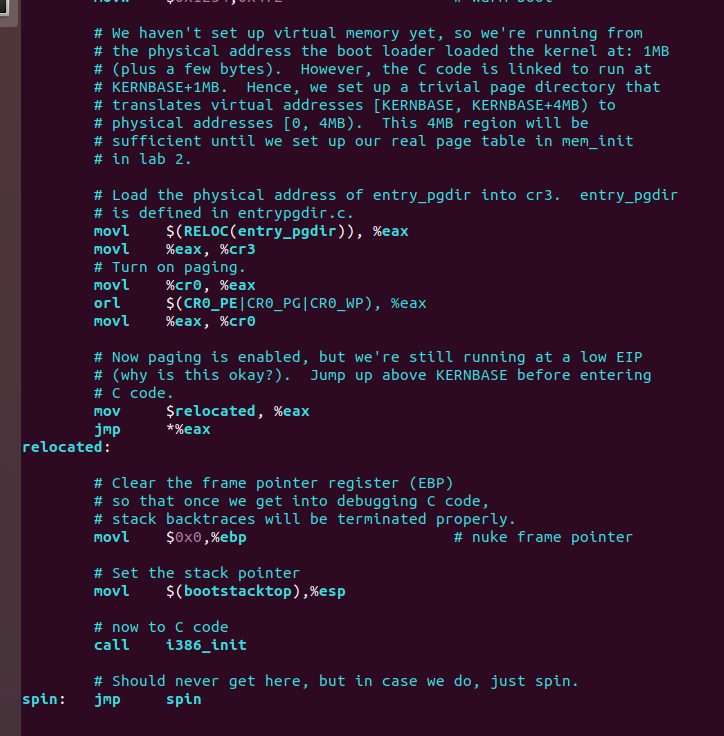

6. Revisit the page table setup in kern/entry.S and kern/entrypgdir.c. Immediately after we turn on paging,

EIP is still a low number (a little over 1MB). At what point do we transition to running at an EIP above

KERNBASE? What makes it possible for us to continue executing at a low EIP between when we enable paging

and when we begin running at an EIP above KERNBASE? Why is this transition necessary?

See entry.S :

(1) After execute the jmp *%eax , this instruction will chg the EIP value;

(2)Because the entrypgdic also maps the VA's [0, 4MB) into PA's [0, 4MB);

(3)Because in the mem_init(), the lower(<4MB) physical address will be abandoned.

Challenge! We consumed many physical pages to hold the page tables for the KERNBASE mapping. Do a more

space-efficient job using the PTE_PS ("Page Size") bit in the page directory entries. This bit was not supported in the

original 80386, but is supported on more recent x86 processors. You will therefore have to refer to Volume 3 of the

current Intel manuals. Make sure you design the kernel to use this optimization only on processors that support it!

Too difficult for me now....

Challenge! Extend the JOS kernel monitor with commands to:

Display in a useful and easy-to-read format all of the physical page mappings (or lack thereof) that apply to a

particular range of virtual/linear addresses in the currently active address space. For example, you might enter

'showmappings 0x3000 0x5000' to display the physical page mappings and corresponding permission bits that

apply to the pages at virtual addresses 0x3000, 0x4000, and 0x5000.

Explicitly set, clear, or change the permissions of any mapping in the current address space.

Dump the contents of a range of memory given either a virtual or physical address range. Be sure the dump code

behaves correctly when the range extends across page boundaries!

Do anything else that you think might be useful later for debugging the kernel. (There's a good chance it will be!)

int setm(int argc, char **argv, struct Trapframe *tf);

int showmappings(int argc, char **argv, struct Trapframe *tf);

int showvm(int argc, char **argv, struct Trapframe *tf);

struct Command {

const char *name;

const char *desc;

// return -1 to force monitor to exit

int (*func)(int argc, char** argv, struct Trapframe* tf);

};

static struct Command commands[] = {

{ "help", "Display this list of commands", mon_help },

{ "kerninfo", "Display information about the kernel", mon_kerninfo },

{ "bt", "Dispaly the back trace info about the call stack", mon_backtrace},

{ "sv", "Show virtual mapping", showmappings},

{ "setm", "Set perm of virtual memory", setm},

{ "showvm", "show the value of virtual memory", showvm},

};

void pprint(pte_t *pte){

cprintf("PTE_P: %x, PTE_W: %x, PTE_U: %x\n",

*pte&PTE_P, *pte&PTE_W, *pte&PTE_U);

}

uint32_t xtoi(char* buf){

uint32_t res = 0;

buf += 2;

while(*buf){

if(*buf >= 'a')

*buf = *buf - 'a' + '0' + 10;

res = res * 16 + *buf - '0';

++buf;

}

return res;

}

int showmappings(int argc, char **argv, struct Trapframe *tf){

if(argc == 1){

cprintf("Usage: showmappings 0xbegin_addr 0xend_addr.\n");

return 0;

}

uint32_t begin = xtoi(argv[1]);

uint32_t end = xtoi(argv[2]);

cprintf("begin: %x, end: %x", begin, end);

for(; begin <= end; begin += PGSIZE){

pte_t *pte = pgdir_walk(kern_pgdir, (void*)begin, 1); //create

if(!pte) panic("boot map region panic, out of memory");

if(*pte & PTE_P){

cprintf("page %x with ", begin);

pprint(pte);

}

else{

cprintf("page not exist: %x\n", begin);

}

}

return 0;

}

int setm(int argc, char **argv, struct Trapframe *tf)

{

if (1 == argc){

cprintf("Usage: setm 0xaddr [0]1 : clear or set[ [P|W|U]\n");

return 0;

}

uint32_t addr = xtoi(argv[1]);

pte_t *pte = pgdir_walk(kern_pgdir, (void*)addr, 1); //create

cprintf("%x before setm: ", addr);

pprint(pte);

uint32_t perm = 0;

if (argv[3][0] == 'P') perm = PTE_P;

if (argv[3][0] == 'W') perm = PTE_W;

if (argv[3][0] == 'U') perm = PTE_U;

if (argv[3][0] == '0')

*pte = *pte & ~perm;

else

*pte = *pte | perm;

cprintf("%x after setm: ", addr);

pprint(pte);

return 0;

}

int showvm(int argc, char **argv, struct Trapframe *tf){

if(1 == argc) {

cprintf("Usage : showvm 0xaddr 0xn \n");

return 0;

}

void** addr = (void**) xtoi(argv[1]);

uint32_t n = xtoi(argv[2]);

int i;

for(i = 0; i < n; ++i){

cprintf("VM at %x is %x\n", addr + i, addr[i]);

}

return 0;

}

Challenge! Write up an outline of how a kernel could be designed to allow user environments unrestricted use of the

full 4GB virtual and linear address space. Hint: the technique is sometimes known as "follow the bouncing kernel." In

your design, be sure to address exactly what has to happen when the processor transitions between kernel and user

modes, and how the kernel would accomplish such transitions. Also describe how the kernel would access physical

memory and I/O devices in this scheme, and how the kernel would access a user environment's virtual address space

during system calls and the like. Finally, think about and describe the advantages and disadvantages of such a scheme

in terms of flexibility, performance, kernel complexity, and other factors you can think of.

Challenge! Since our JOS kernel's memory management system only allocates and frees memory on page granularity,

we do not have anything comparable to a general-purpose malloc/free facility that we can use within the kernel. This

could be a problem if we want to support certain types of I/O devices that require physically contiguous buffers larger

than 4KB in size, or if we want user-level environments, and not just the kernel, to be able to allocate and map 4MB

superpages for maximum processor efficiency. (See the earlier challenge problem about PTE_PS.)

The text was updated successfully, but these errors were encountered:

Exercise 5. Fill in the missing code in mem_init() after the call to check_page().

Your code should now pass the check_kern_pgdir() and check_page_installed_pgdir() checks.

Continue the work in implementing mem_init(), the first task is mapping virtual address of UPGAES into the physical

address of pages[npages] .

boot_map_region(kern_pgdir, UPAGES, PTSIZE, PADDR(pages), PTE_U);The next step is to map the kern stack into corresponding physical parts.

the "boostack" is the physical address of the top of the stack. Which is determined by the linker;

Namely, we can map the address [KSTACKTOP - PTSIZE, KERNBASE) , but we only map the STKSIZE part thus:

boot_map_region(kern_pgdir, KSTACKTOP - KSTKSIZE, KSTKSIZE, PADDR(bootstack), PTE_W);Lastly, the kernbase should be mapped

boot_map_region(kern_pgdir, KERNBASE, 0xffffffff - KERNBASE, 0, PTE_W);0xef000 -> pages[]

0xeeff8 -> bootstacktop

0xf0000 -> 0

3. (From Lecture 3) We have placed the kernel and user environment in the same address space. Why will user

programs not be able to read or write the kernel's memory? What specific mechanisms protect the kernel

memory?

Cause the PTE_U is not enabled;

4. What is the maximum amount of physical memory that this operating system can support? Why?

This system use 4096KB(RO PAGES size ) space for storing page information, a struct PageInfo has 8Byte, thus we can have at most 4096 * 1024 / 8 = 524,288 pages, which can afford 524288 * 4096 Bytes = 2048MB = 2GB,

5. How much space overhead is there for managing memory, if we actually had the maximum amount of physical

memory? How is this overhead broken down?

Page Directory = 1024 * 4Byte = 4K;

Page table entry = 1024 * 1024* 4Byte = 4MB; (But infact almost 2GB physical memory, thus only 512 second -level will be used), thus 2MB;

Page Info(RO pages) = PTSIZE = 4MB;

total = 2MB + 4MB + 4K;

6. Revisit the page table setup in kern/entry.S and kern/entrypgdir.c. Immediately after we turn on paging,

EIP is still a low number (a little over 1MB). At what point do we transition to running at an EIP above

KERNBASE? What makes it possible for us to continue executing at a low EIP between when we enable paging

and when we begin running at an EIP above KERNBASE? Why is this transition necessary?

See entry.S :

(1) After execute the

jmp *%eax, this instruction will chg the EIP value;(2)Because the entrypgdic also maps the VA's [0, 4MB) into PA's [0, 4MB);

(3)Because in the mem_init(), the lower(<4MB) physical address will be abandoned.

Challenge! We consumed many physical pages to hold the page tables for the KERNBASE mapping. Do a more

space-efficient job using the PTE_PS ("Page Size") bit in the page directory entries. This bit was not supported in the

original 80386, but is supported on more recent x86 processors. You will therefore have to refer to Volume 3 of the

current Intel manuals. Make sure you design the kernel to use this optimization only on processors that support it!

Too difficult for me now....

Challenge! Extend the JOS kernel monitor with commands to:

Display in a useful and easy-to-read format all of the physical page mappings (or lack thereof) that apply to a

particular range of virtual/linear addresses in the currently active address space. For example, you might enter

'showmappings 0x3000 0x5000' to display the physical page mappings and corresponding permission bits that

apply to the pages at virtual addresses 0x3000, 0x4000, and 0x5000.

Explicitly set, clear, or change the permissions of any mapping in the current address space.

Dump the contents of a range of memory given either a virtual or physical address range. Be sure the dump code

behaves correctly when the range extends across page boundaries!

Do anything else that you think might be useful later for debugging the kernel. (There's a good chance it will be!)

Challenge! Write up an outline of how a kernel could be designed to allow user environments unrestricted use of the

full 4GB virtual and linear address space. Hint: the technique is sometimes known as "follow the bouncing kernel." In

your design, be sure to address exactly what has to happen when the processor transitions between kernel and user

modes, and how the kernel would accomplish such transitions. Also describe how the kernel would access physical

memory and I/O devices in this scheme, and how the kernel would access a user environment's virtual address space

during system calls and the like. Finally, think about and describe the advantages and disadvantages of such a scheme

in terms of flexibility, performance, kernel complexity, and other factors you can think of.

Challenge! Since our JOS kernel's memory management system only allocates and frees memory on page granularity,

we do not have anything comparable to a general-purpose malloc/free facility that we can use within the kernel. This

could be a problem if we want to support certain types of I/O devices that require physically contiguous buffers larger

than 4KB in size, or if we want user-level environments, and not just the kernel, to be able to allocate and map 4MB

superpages for maximum processor efficiency. (See the earlier challenge problem about PTE_PS.)

The text was updated successfully, but these errors were encountered: