-

Notifications

You must be signed in to change notification settings - Fork 353

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Process Killed without any Error #36

Comments

|

Hey @BATspock - could you check that JAX is correctly installed? See comment #30 (comment) |

|

Hi @sanchit-gandhi, I do not believe this is a problem with the installations. I executed the script referred to in the comment above and got the following output: I am trying to run the basic starter code but still #getting the same error. Starter code: |

|

The error message looks like it's a And then re-running the code? |

|

Any luck @BATspock? Happy to help make this work here! |

|

I was facing the same issue even after uninstalling and reinstalling TensorFlow. I tried to play around with my Nvidia drivers the, but now whisper-Jax is not detecting my GPU. However, I still see the same error of the process being killed without error. |

|

Update. I decided to ask GPT-4 what's going on and it told me that it's because there's insufficient memory. I checked it and it turns out GPT-4 is right! I see the memory fill up aaaaaaaall the way to 16 GB and then the app gets killed. In your case, what you'll have to solve is the problem that it does not detect your GPU somehow, because obviously you wouldn't need as much RAM if you use your 3050. It is only because it somehow does not want to cooperate with your GPU, that it falls back on CPU and then tries to load the model into your system's RAM instead of in your GPU's VRAM, which is apparently also insufficient like in my case. I don't have a GPU so I'm now shit out of luck. You'll be in luck if you figure out how to get the GPU working with it! :) But at least now you know the reason why the app keeps on getting killed! :) |

Still i would suggest to you CUDA greater than 12 ! For this i have wasted a lot of time it is the CUDA & jax incapability issues. If you have a fresh instance of only ubuntu this this steps Install CUDA & DriversInstall virtualenvRestart the instance after this ! Make a Venv & install dependencies |

|

What exactly do you mean by fresh instance of only ubuntu? Are you working with a cloud spawned image? |

|

Yeah on cloud, but running larger files it gets killed. I am also trying out diff things. If i get anything will surely share. On thing i noticed from htop is that the CPU is at 100% even for 1 file, while memory is 4.7GB/ 8GB |

|

Okay so the issue here is that "pool" which is getting created using mulit-processing is not getting closed. So every time we run the code in a "Flask or Flask + Celery Server" the program gets killed

I have tried with But yeah this was the issue i have checked the RAM & CPU usage on the tiny model. Temp Solution

|

I have the following specs:

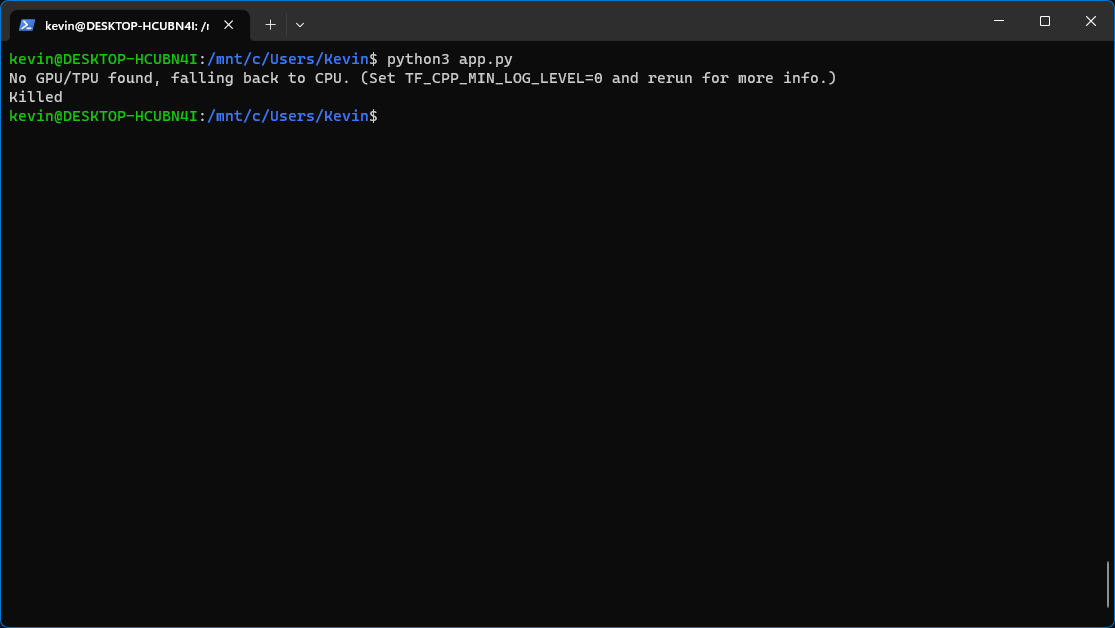

I see the following warning before the program is killed:

W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRTI do not see other errors:

How can I resolve this issue? Please let me know if I need to share any more details

The text was updated successfully, but these errors were encountered: