Kaskade is a text user interface (TUI) for Apache Kafka, built with Textual. It includes features like:

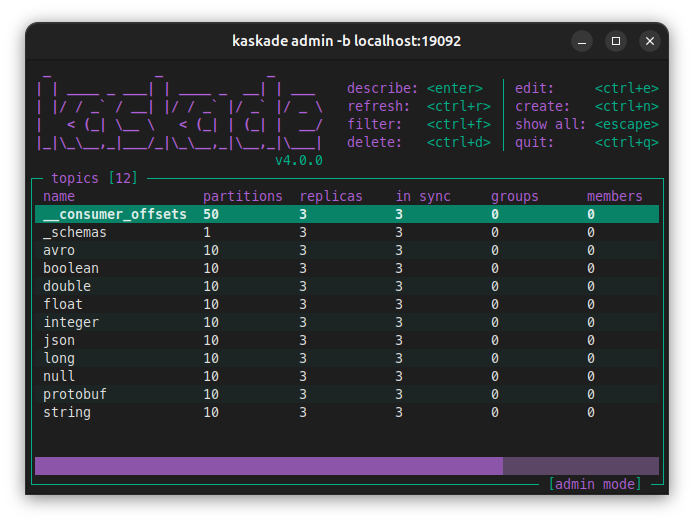

- Admin:

- List topics, partitions, groups and group members

- Topic information like lag, replicas and records count

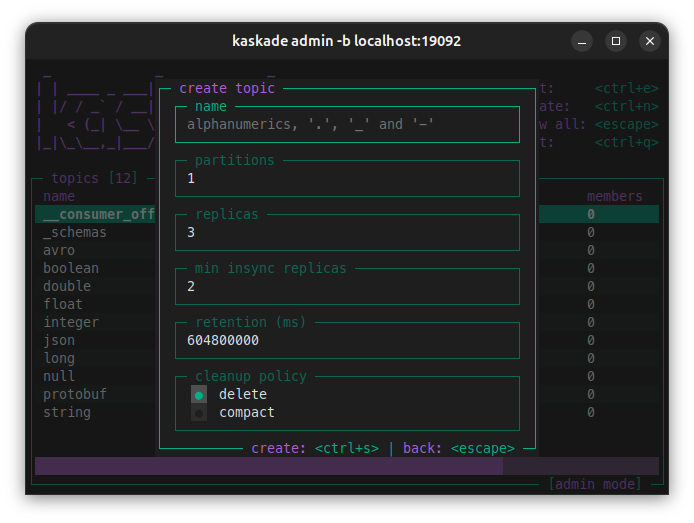

- Create, edit and delete topics

- Filter topics by name

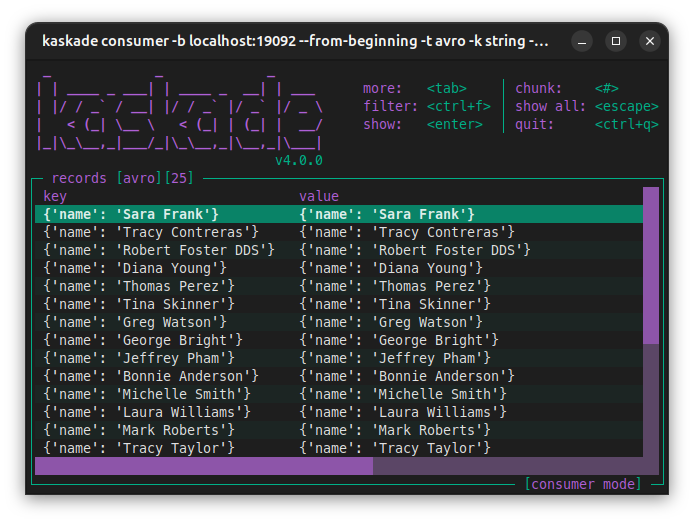

- Consumer:

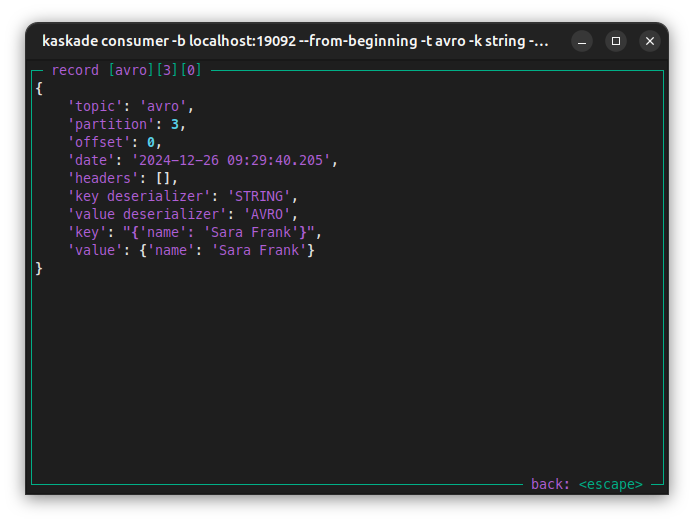

- Json, string, integer, long, float, boolean and double deserialization

- Filter by key, value, header and/or partition

- Schema Registry support with avro

|

|

|

|

pipx install kaskade

pipxwill installkaskadeandkskdaliases.

pipx upgrade kaskadeHow to install pipx for your OS at: pipx Installation.

kaskade --help

kaskade admin --help

kaskade consumer --helpkaskade admin -b localhost:9092kaskade consumer -b localhost:9092 -t my-topicdocker run --rm -it --network my-networtk sauljabin/kaskade:latest admin -b my-kafka:9092

docker run --rm -it --network my-networtk sauljabin/kaskade:latest consumer -b my-kafka:9092 -t my-topickaskade admin -b broker1:9092,broker2:9092kaskade consumer -b localhost:9092 -t my-topic -k json -v jsonkaskade consumer -b localhost:9092 -t my-topic -x auto.offset.reset=earliestkaskade consumer -b localhost:9092 -s url=http://localhost:8081 -t my-topic -k avro -v avroMore Schema Registry configurations at: SchemaRegistryClient.

librdkafka clients do not currently support AVRO Unions in (de)serialization, more at: Limitations for librdkafka clients.

kaskade admin -b ${BOOTSTRAP_SERVERS} -x security.protocol=SSLFor more information about SSL encryption and SSL authentication go to the

librdkafkaofficial

page: Configure librdkafka client.

kaskade admin -b ${BOOTSTRAP_SERVERS} \

-x security.protocol=SASL_SSL \

-x sasl.mechanism=PLAIN \

-x sasl.username=${CLUSTER_API_KEY} \

-x sasl.password=${CLUSTER_API_SECRET}kaskade consumer -b ${BOOTSTRAP_SERVERS} \

-x security.protocol=SASL_SSL \

-x sasl.mechanism=PLAIN \

-x sasl.username=${CLUSTER_API_KEY} \

-x sasl.password=${CLUSTER_API_SECRET} \

-s url=${SCHEMA_REGISTRY_URL} \

-s basic.auth.user.info=${SR_API_KEY}:${SR_API_SECRET} \

-t my-topic \

-k string \

-v avroMore about confluent cloud configuration at: Kafka Client Quick Start for Confluent Cloud.

For development instructions see DEVELOPMENT.md.