-

-

Notifications

You must be signed in to change notification settings - Fork 25.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

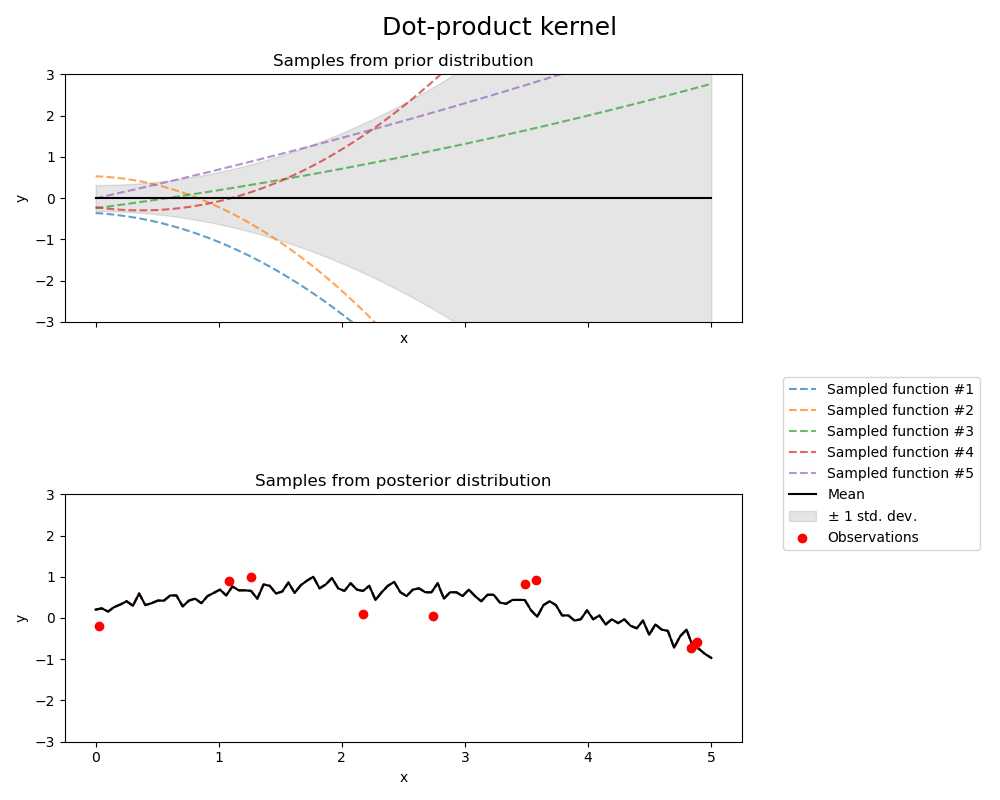

Figure is shown incorrectly #25755

Comments

|

This is not an issue with the figure. However, this is linked to the We need to investigate if this is normal or how we could try to remove this warning to have a better figure. |

|

My understanding of Gaussian Process is very limited, but I'll write down what I found in case it could help someone solve this. It seems that the convergence problem was present from the beginning and has appeared in all the releases (v0.18, v0.19, and v0.21 don't show the warning in the rendered notebook but I ran the examples with versions In order to avoid getting the warning, I added two parameters to the

Thus, we could edit the code for the GP with dot-product kernel like this: import scipy.optimize

from sklearn.utils.optimize import _check_optimize_result

def my_optimizer(obj_func, initial_theta, bounds):

opt_res = scipy.optimize.minimize(

obj_func,

initial_theta,

method="L-BFGS-B",

jac=True,

bounds=bounds,

options={'maxls': 40},

# bounds=None, # This is the other option without increasing the number of line search steps.

)

_check_optimize_result("lbfgs", opt_res)

return opt_res.x, opt_res.fun

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import ConstantKernel, DotProduct

kernel = ConstantKernel(0.1, (0.01, 10.0)) * (

DotProduct(sigma_0=1.0, sigma_0_bounds=(0.1, 10.0)) ** 2

)

gpr = GaussianProcessRegressor(kernel=kernel, random_state=0, optimizer=my_optimizer)

...Nevertheless, the GP fit does not appear to be good. It actually is fairly similar to the GP that raise a warning. With warning the GP has: Without warning the GP has: On the other hand, the mean function from the GP posterior rendered on the notebook is different from what I got when I run it. While the rendered notebook shows: Is this behavior expected? |

Describe the issue linked to the documentation

Second part of the figure doesn't show sampled functions

Suggest a potential alternative/fix

No response

The text was updated successfully, but these errors were encountered: